Video Friday: Loco-Manipulation

Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We also post a weekly calendar of upcoming robotics events for the next few months. Please send us your events for inclusion.

Eurobot Open 2024: 8–11 May 2024, LA ROCHE-SUR-YON, FRANCE

ICRA 2024: 13–17 May 2024, YOKOHAMA, JAPAN

RoboCup 2024: 17–22 July 2024, EINDHOVEN, NETHERLANDS

Cybathlon 2024: 25–27 October 2024, ZURICH

Enjoy today’s videos!

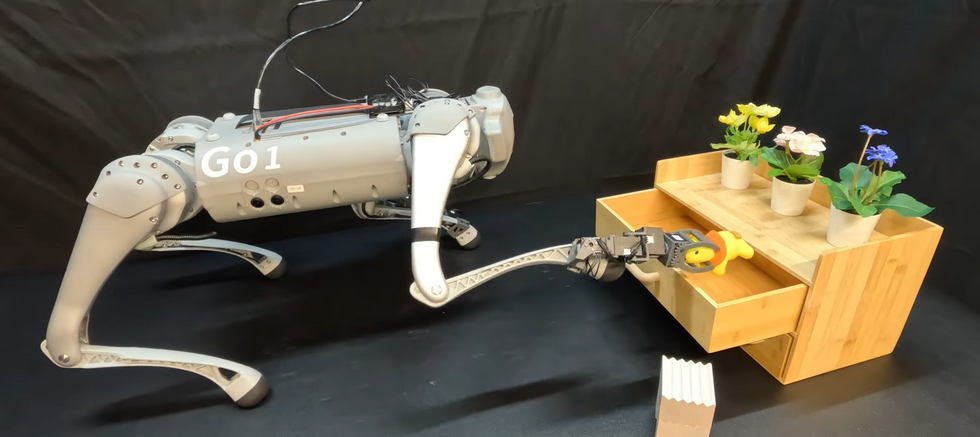

In this work, we present LocoMan, a dexterous quadrupedal robot with a novel morphology to perform versatile manipulation in diverse constrained environments. By equipping a Unitree Go1 robot with two low-cost and lightweight modular 3-DoF loco-manipulators on its front calves, LocoMan leverages the combined mobility and functionality of the legs and grippers for complex manipulation tasks that require precise 6D positioning of the end effector in a wide workspace.

[ CMU ]

Thanks, Changyi!

Object manipulation has been extensively studied in the context of fixed-base and mobile manipulators. However, the overactuated locomotion modality employed by snake robots allows for a unique blend of object manipulation through locomotion, referred to as loco-manipulation. In this paper, we present an optimization approach to solving the loco-manipulation problem based on nonimpulsive implicit-contact path planning for our snake robot COBRA.

Okay, but where that costume has eyes is not where Spot has eyes, so the Spot in the costume can’t see, right? And now I’m skeptical of the authenticity of the mutual snoot-boop.

[ Boston Dynamics ]

Here’s some video of Field AI’s robots operating in relatively complex and unstructured environments without prior maps. Make sure to read our article from this week for details!

[ Field AI ]

Is it just me, or is it kind of wild that researchers are now publishing papers comparing their humanoid controller to the “manufacturer’s” humanoid controller? It’s like humanoids are a commodity now or something.

[ OSU ]

I, too, am packing armor for ICRA.

[ Pollen Robotics ]

Honey Badger 4.0 is our latest robotic platform, created specifically for traversing hostile environments and difficult terrains. Equipped with multiple cameras and sensors, it will make sure no defect is omitted during inspection.

[ MAB Robotics ]

Thanks, Jakub!

Have an automation task that calls for the precision and torque of an industrial robot arm…but you need something that is more rugged or a nonconventional form factor? Meet the HEBI Robotics H-Series Actuator! With 9x the torque of our X-Series and seamless compatibility with the HEBI ecosystem for robot development, the H-Series opens a new world of possibilities for robots.

[ HEBI ]

Thanks, Dave!

This is how all spills happen at my house too: super passive-aggressively.

[ 1X ]

EPFL’s team, led by Ph.D. student Milad Shafiee along with coauthors Guillaume Bellegarda and BioRobotics Lab head Auke Ijspeert, have trained a four-legged robot using deep-reinforcement learning to navigate challenging terrain, achieving a milestone in both robotics and biology.

[ EPFL ]

At Agility, we make robots that are made for work. Our robot Digit works alongside us in spaces designed for people. Digit handles the tedious and repetitive tasks meant for a machine, allowing companies and their people to focus on the work that requires the human element.

[ Agility ]

With a wealth of incredible figures and outstanding facts, here’s Jan Jonsson, ABB Robotics veteran, sharing his knowledge and passion for some of our robots and controllers from the past.

[ ABB ]

I have it on good authority that getting robots to mow a lawn (like, any lawn) is much harder than it looks, but Electric Sheep has built a business around it.

[ Electric Sheep ]

The AI Index, currently in its seventh year, tracks, collates, distills, and visualizes data relating to artificial intelligence. The Index provides unbiased, rigorously vetted, and globally sourced data for policymakers, researchers, journalists, executives, and the general public to develop a deeper understanding of the complex field of AI. Led by a steering committee of influential AI thought leaders, the Index is the world’s most comprehensive report on trends in AI. In this seminar, HAI Research Manager Nestor Maslej offers highlights from the 2024 report, explaining trends related to research and development, technical performance, technical AI ethics, the economy, education, policy and governance, diversity, and public opinion.

[ Stanford HAI ]

This week’s CMU Robotics Institute seminar, from Dieter Fox at Nvidia and the University of Washington, is “Where’s RobotGPT?”

In this talk, I will discuss approaches to generating large datasets for training robot-manipulation capabilities, with a focus on the role simulation can play in this context. I will show some of our prior work, where we demonstrated robust sim-to-real transfer of manipulation skills trained in simulation, and then present a path toward generating large-scale demonstration sets that could help train robust, open-world robot-manipulation models.

[ CMU ]