Last week there was a press release you might easily have missed. A Distributed Autonomous Organization (DAO) called OrangeDAO is cooperating with a small seed venture fund called Press Start Capital to establish the OrangeDAO X Press Start Cap Fellowship Program for new Web3 entrepreneurs. Successful applicants get $25,000 each plus 10 weeks of structured mentorship plus continued access to the more than 1200-member OrangeDAO network. In exchange, OrangeDAO and Press Start get to invest in the resulting companies, if any, produced by the class.

Last week there was a press release you might easily have missed. A Distributed Autonomous Organization (DAO) called OrangeDAO is cooperating with a small seed venture fund called Press Start Capital to establish the OrangeDAO X Press Start Cap Fellowship Program for new Web3 entrepreneurs. Successful applicants get $25,000 each plus 10 weeks of structured mentorship plus continued access to the more than 1200-member OrangeDAO network. In exchange, OrangeDAO and Press Start get to invest in the resulting companies, if any, produced by the class.

Big deal, it’s Y Combinator Junior, right?

Wrong. It’s Y Combinator on steroids.

This second-generation YC has been released in the wild where it will replicate and grow unconstrained. Expect to see more deals like this one.

A Distributed Autonomous Organization is a financial partnership that leverages blockchain technology to help multiple users make decisions as a single entity. There are many DAOs around and hardly anybody understands them or knows what they are good for. Mainly they have seemed to be involved in the NFT market. But OrangeDAO is different. It has 1200+ members and every one of those members is a graduate of the Y Combinator startup accelerator. They are verified Y Combinator company founders, so they’ve all had similar entrepreneurial experiences and see business much the same way as a result. OrangeDAO seems to have big plans and to make those plans happen in August the DAO, itself, raised $80 million in venture capital, with their first use of that capital being these Fellowships.

I think this will change forever venture capital and the world economy.

It represents a new stage in the evolution of venture capital. In many senses it is the democratization of VC.

It’s no surprise that OrangeDAO comes from Y Combinator alumni. YC, itself, disrupted the VC model and this Fellowship continues that disruption.

It’s turning what was a disruption into an ecosystem.

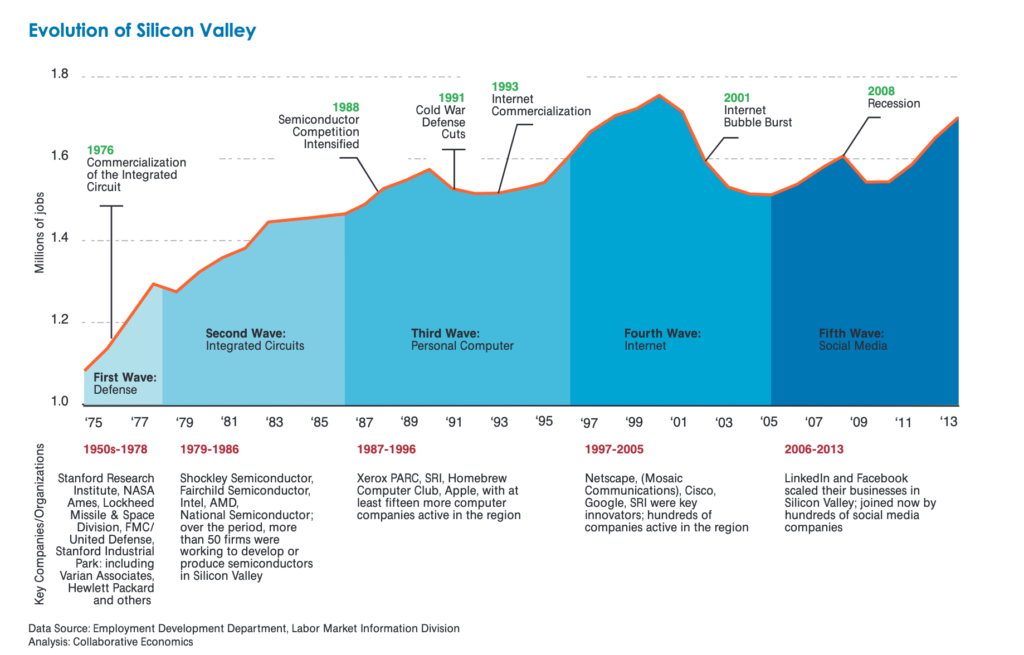

Think about the VC model. The original Silicon Valley VC wasn’t even from Silicon Valley — it was Sherman Fairchild from Fairchild Camera in Baltimore, who came to Mountain View to invest in Shockley Semiconductor in 1954.

This was the Tycoon-as-VC model, which was soon replaced by the Professional VC model where dumb institutional money was invested by VCs (generally lawyers or former CFOs) who didn’t really understand what they were investing in. But there were enough opportunities that they could “spray and pray” and succeed on the simple odds. Tim Draper’s grandpa and Arthur Rock typified this generation. Few people realize that Rock invested only $75K in Apple… ever.

Eventually there rose in Silicon Valley a technocracy with a new class of VCs who DID more or less understand their investments. Don Valentine and Tom Perkins led this charge and ultimately hired associates and partners who looked just like them, which describes every person today working on Sand Hill Road.

Typical of this glory age of VC, there were dumb institutional investors, technical or semi-technical professional VCs, and an emerging class of entrepreneurs who needed progressively LESS money as technical markets blossomed and third-party services became available.

At this point there emerged the YC/Techstars, Angel investing, and eventually crowdfunding models. YC brought with it two revolutionary ideas: 1) you didn’t have to have a VC friend to get a chance to pitch your idea, and; 2) there was a VC role for educating entrepreneurs.

Prior to YC (and to this day in most places) VCs like to keep their entrepreneurs ignorant, so they can be more easily controlled. YC worked to subvert that control.

Angel investing was something parallel to YC — experienced (generally self-taught — the hard way) entrepreneurs playing VC together over dinner for smaller deals. Remember The Band-of-Angels had Gordon Moore at those dinners. But total deal sizes were limited because it wasn’t professional — not full-time work for anyone.

Crowdfunding was also parallel and totally wacky because it was truly democratic: nobody knows anything. In crowdfunding EVERYONE is stupid. Neither the investors, managers, nor entrepreneurs know what they are doing, which is why crowdfunding hasn’t been a big success to date.

I had an Indian friend who worked at Intel and lived in Roseville in a neighborhood filled with Indian engineers who worked at Intel and lived in Roseville. The average first-generation Indian engineer in California keeps in his/her checking account $100,000 “just in case.” My friend used to argue that he could walk around the block with a good pitch deck and get seed funding for his next venture by the time he made it back to his own doorstep. It was a brilliant observation.

These OrangeDAO Fellowships are like that Indian neighborhood in Roseville. The DAO members all have similar backgrounds, similar values, and similar risk tolerances. THERE ARE MORE OF THEM, so they can do bigger deals. And — here’s the important bit — THEY ARE ALL YC-EDUCATED and connected globally through the blockchain. They not only know many of the same things, they have a sense of where this knowledge comes from and why it is useful. That’s Paul Graham’s legacy at YC.

But this is a second-through-Nth-generation movement, at the very center of which is not just education, but FURTHER education — the very concept that education, itself, is a legacy to be nurtured and extended. Think land-grant American universities of the 19th century, In the YC-based DAO we have people who want the next generation of entrepreneurs to be even better-educated. It’s not some egalitarian goal, either: they see it as key to success for the whole thing.

Smart people with good ideas will self-identify, be funded at a subsistence level to allow them to develop those ideas and prove their worth, then they can participate on a truly level playing field for the first time.

YC and Techstars and their copycat cousins did this too, BUT NEVER AT SCALE.

Gone is the Tycoon, gone is the professional VC who doesn’t understand his tech, gone soon will be the angels (subsumed into the DAO model), and gone for the most part are the asshole VCs whom entrepreneurs grow to hate (not all of them, but a lot).

Done correctly, this model is essentially Meritocratic VC. If the idea is good, the market is ready, and the people know what they are doing, the capital will be there. Everything has the prospect of being better under this evolved system, or at least that’s the way I see it. And it all comes down to the centrality of education combined with scale.

Now here is the $100 TRILlION question: can this Middle Class VC model be exported to Topeka and Timbuktu?

I think it can be.

The problem with all the Silicon Glens and Silicon Prairies, and Silicon Forests and Silicon Gulags that failed repeatedly over the last 30 years is they were copying the wrong parts of the successful model. They didn’t have the people and the institutional knowledge of Silicon Valley and Sand Hill Road, but this OrangeDAO DOES.

It’s a containerized copy of successful Silicon Valley culture that carries with it all dependencies, even money. .

The post

Paul Graham’s Legacy first appeared on

I, Cringely.

Digital Branding

Digital Branding

Web Design

Marketing

I’ve been following the press and social media coverage of Apple’s pricey new Vision Pro Augmented Reality headset, which now totals hundreds of stories and thousands of comments and I’ve noticed one idea missing from all of them: what would Steve (Jobs) say? Steve would call the Vision Pro a “hobby,” just as he did with the original Apple TV.

I’ve been following the press and social media coverage of Apple’s pricey new Vision Pro Augmented Reality headset, which now totals hundreds of stories and thousands of comments and I’ve noticed one idea missing from all of them: what would Steve (Jobs) say? Steve would call the Vision Pro a “hobby,” just as he did with the original Apple TV.