Engineering the First Fitbit: The Inside Story

It was December 2006. Twenty-nine-year-old entrepreneur James Park had just purchased a Wii game system. It included the Wii Nunchuk, a US $29 handheld controller with motion sensors that let game players interact by moving their bodies—swinging at a baseball, say, or boxing with a virtual partner.

Park became obsessed with his Wii.

“I was a tech-gadget geek,” he says. “Anyone holding that nunchuk was fascinated by how it worked. It was the first time that I had seen a compelling consumer use for accelerometers.”

After a while, though, Park spotted a flaw in the Wii: It got you moving, sure, but it trapped you in your living room. What if, he thought, you could take what was cool about the Wii and use it in a gadget that got you out of the house?

![]() The first generation of Fitbit trackers shipped in this package in 2009. NewDealDesign

The first generation of Fitbit trackers shipped in this package in 2009. NewDealDesign

“That,” says Park, “was the aha moment.” His idea became Fitbit, an activity tracker that has racked up sales of more than 136 million units since its first iteration hit the market in late 2009.

But back to that “aha moment.” Park quickly called his friend and colleague Eric Friedman. In 2002, the two, both computer scientists by training, had started a photo-sharing company called HeyPix, which they sold to CNET in 2005. They were still working for CNET in 2006, but it wasn’t a bad time to think about doing something different.

Friedman loved Park’s idea.

“My mother was an active walker,” Friedman says. “She had a walking group and always had a pedometer with her. And my father worked with augmentative engineering [assistive technology] for the elderly and handicapped. We’d played with accelerometer tech before. So it immediately made sense. We just had to refine it.”

The two left CNET, and in April 2007 they incorporated the startup with Park as CEO and Friedman as chief technology officer. Park and Friedman weren’t trying to build the first step counter—mechanical pedometers date back to the 1960s. They weren’t inventing the first smart activity tracker— BodyMedia, a medical device manufacturer, had in 1999 included accelerometers with other sensors in an armband designed to measure calories burned. And Park and Friedman didn’t get a smart consumer tracker to market first. In 2006, Nike had worked with Apple to launch the Nike+ for runners, a motion-tracking system that required a special shoe and a receiver that plugged into an iPod

Fitbit’s founders James Park [left] and Eric Friedman released their first product in 2009, when this photo was taken. Peter DaSilva/The New York Times/Redux

Fitbit’s founders James Park [left] and Eric Friedman released their first product in 2009, when this photo was taken. Peter DaSilva/The New York Times/Redux

Park wasn’t aware of any of this when he thought about getting fitness out of the living room, but the two quickly did their research and figured out what they did and didn’t want to do.

“We didn’t want to create something expensive, targeted at athletes,” he says. “Or something that was dumb and not connected to software. And we wanted something that could provide social connection, like photo sharing did.”

That something had to be comfortable to wear all day, be easy to use, upload its data seamlessly so the data could be tracked and shared with friends, and rarely need charging. Not an easy combination of requirements.

“It’s one of those things where the simpler you get, the harder it becomes to design something well,” Park says.

The first Fitbit was designed for women

The first design decision was the biggest one. Where on the body did they expect people to put this wearable? They weren’t going to ask people to buy special shoes, like the Nike+, or wear a thick band on their upper arms, like BodyMedia’s tracker.

They hired NewDealDesign to figure out some of these details.

“In our first two weeks, after multiple discussions with Eric and James, we decided that the project was going to be geared to women,” says Gadi Amit, president and principal designer of NewDealDesign. “That decision was the driver of the form factor.”

“We wanted to start with something familiar to people,” Park says, “and people tended to clip pedometers to their belts.” So a clip-on device made sense. But women generally don’t wear belts.

To do what it needed to do, the clip-on gadget would have to contain a roughly 2.5-by-2.5-centimeter (1-by-1-inch) printed circuit board, Amit recalls. The big breakthrough came when the team decided to separate the electronics and the battery, which in most devices are stacked. “By doing that, and elongating it a bit, we found that women could put it anywhere,” Amit says. “Many would put it in their bras, so we targeted the design to fit a bra in the center front, purchasing dozens of bras for testing.”

The decision to design for women also drove the overall look, to “subdue the user interface,” as Amit puts it. They hid a low-resolution monochrome OLED display behind a continuous plastic cover, with the display lighting up only when you asked it to. This choice helped give the device an impressive battery life.

The earliest Fitbit devices used an animated flower as a progress indicator. NewDealDesign

The earliest Fitbit devices used an animated flower as a progress indicator. NewDealDesign

They also came up with the idea of a flower as a progress indicator—inspired, Park says, by the Tamagotchi, one of the biggest toy fads of the late 1990s. “So we had a little animated flower that would shrink or grow based on how active you were,” Park explains.

And after much discussion over controls, the group gave the original Fitbit just one button.

Hiring an EE—from Dad—to design Fitbit’s circuitry

Park and Friedman knew enough about electronics to build a crude prototype, “stuffing electronics into a box made of cut-up balsam wood,” Park says. But they also knew that they needed to bring in a real electrical engineer to develop the hardware.

Fortunately, they knew just whom to call. Friedman’s father, Mark, had for years been working to develop a device for use in nursing homes, to remotely monitor the position of bed-bound patients. Mark’s partner in this effort was Randy Casciola, an electronics engineer and currently president of Morewood Design Labs.

Eric called his dad, told him about the gadget he and Park envisioned, and asked if he and Casciola could build a prototype.

“Mark and I thought we’d build a quick-and-dirty prototype, something they could get sensor data from and use for developing software. And then they’d go off to Asia and get it miniaturized there,” Casciola recalls. “But one revision led to another.” Casciola ended up working on circuit designs for Fitbits virtually full time until the sale of the company to Google, announced in 2019 and completed in early 2021.

“We saw some pretty scary manufacturers. Dirty facilities, flash marks on their injection-molded plastics, very low precision.”

—James Park

“We were just two little guys in a little office in Pittsburgh,” Casciola says. “Before Fitbit came along, we had realized that our nursing-home thing wasn’t likely to ever be a product and had started taking on some consulting work. I had no idea Fitbit would become a household name. I just like working on anything, whether I think it’s a good idea or not, or even whether someone is paying me or not.”

The earliest prototypes were pretty large, about 10 by 15 cm, Casciola says. They were big enough to easily hook up to test equipment, yet small enough to strap on to a willing test subject.

After that, Park and Eric Friedman—along with Casciola, two contracted software engineers, and a mechanical design firm—struggled with turning the bulky prototype into a small and sleek device that counted steps, stored data until it could be uploaded and then transmitted it seamlessly, had a simple user interface, and didn’t need daily charging.

“Figuring out the right balance of battery life, size, and capability kept us occupied for about a year,” Park says.

The Fitbit prototype, sitting on its charger, booted up for the first time in December 2008. James Park

The Fitbit prototype, sitting on its charger, booted up for the first time in December 2008. James Park

After deciding to include a radio transmitter, they made a big move: They turned away from the Bluetooth standard for wireless communications in favor of the ANT protocol, a technology developed by Garmin that used far less power. That meant the Fitbit wouldn’t be able to upload to computers directly. Instead, the team designed their own base station, which could be left plugged into a computer and would grab data anytime the Fitbit wearer passed within range.

Casciola didn’t have expertise in radio-frequency engineering, so he relied on the supplier of the ANT radio chips: Nordic Semiconductor, in Trondheim, Norway.

“They would do a design review of the circuit board layout,” he explains. “Then we would send our hardware to Norway. They would do RF measurements on it and tell me how to tweak the values of the capacitors and conductors in the RF chain, and I would update the schematic. It’s half engineering and half black magic to get this RF stuff working.”

Another standard they didn’t use was the ubiquitous USB charging connection.

“We couldn’t use USB,” Park says. “It just took up too much volume. Somebody actually said to us, ‘Whatever you do, don’t design a custom charging system because it’ll be a pain, it’ll be super expensive.’ But we went ahead and built one. And it was a pain and super expensive, but I think it added a level of magic. You just plopped your device on [the charger]. It looked beautiful, and it worked consistently.”

Most of the electronics they used were off the shelf, including a 16-bit Texas Instruments MSP430 microprocessor, and 92 kilobytes of flash memory and 4 kb of RAM to hold the operating system, the rest of the code, all the graphics, and at least seven days’ worth of collected data.

The Fitbit was designed to resist sweat, and they generally survived showers and quick dips, says Friedman. “But hot tubs were the bane of our existence. People clipped it to their swimsuits and forgot they had it on when they jumped into the hot tub.”

Fitbit’s demo or die moment

Up to this point, the company was surviving on $400,000 invested by Park, Friedman, and a few people who had backed their previous company. But more money would be needed to ramp up manufacturing. And so a critical next step would be a live public demo, which they scheduled for the TechCrunch conference in San Francisco in September 2008.

Live demonstrations of new technologies are always risky, and this one walked right up to the edge of disaster. The plan was to ask an audience member to call out a number, and then Park, wearing the prototype in its balsa-wood box, would walk that number of steps. The count would sync wirelessly to a laptop projecting to a screen on stage. When Friedman hit refresh on the browser, the step count would appear on the screen. What could go wrong?

A lot. Friedman explains: “You think counting steps is easy, but let’s say you do three steps. One, two, three. When you bring your feet together, is that a step or is that the end? It’s much easier to count 1,000 steps than it is to do 10 steps. If I walk 10 steps and am off by one, that’s a glaring error. With 1,000, that variance becomes noise.”

The first semi-assembled Fitbit records its inaugural step count. James Park

After a lot of practice, the two thought they could pull it off. Then came the demo. “While I was walking, the laptop crashed,” Park says. “I wasn’t aware of that. I was just walking happily. Eric had to reboot everything while I was still walking. But the numbers showed up; I don’t think anyone except Eric realized what had happened.”

That day, some 2,000 preorders poured in. And Fitbit closed a $2 million round of venture investment the next month.

Though Park and Friedman had hoped to get Fitbits into users’ hands—or clipped onto their bras—by Christmas of 2008, they missed that deadline by a year.

The algorithms that determine Fitbit’s count

Part of Fitbit’s challenge of getting from prototype to shippable product was software development. They couldn’t expect users to walk as precisely as Park did for the demo. Instead, the device’s algorithms needed to determine what a step was and what was a different kind of motion—say, someone scratching their nose.

“Data collection was difficult,” Park says. “Initially, it was a lot of us wearing prototype devices doing a variety of different activities. Our head of research, Shelten Yuen, would follow, videotaping so we could go back and count the exact number of steps taken. We would wear multiple devices simultaneously, to compare the data collects against each other.”

Friedman remembers one such outing. “James was tethered to the computer, and he was pretending to walk his dog around the Haight [in San Francisco], narrating this little play that he’s putting on: ‘OK, I’m going to stop. The dog is going to pee on this tree. And now he’s going over there.’ The great thing about San Francisco is that nobody looks strangely at two guys tethered together walking around talking to themselves.”

“Older people tend to have an irregular cadence—to the device, older people look a lot like buses going over potholes.” –James Park

“Pushing baby strollers was an issue,” because the wearer’s arms aren’t swinging, Park says. “So one of our guys put an ET doll in a baby stroller and walked all over the city with it.”

Road noise was another big issue. “Yuen, who was working on the algorithms, was based in Cambridge, Mass.,” Park says. “They have more potholes than we do. When he took the bus, the bus would hit the potholes and [the device would] be bouncing along, registering steps.” They couldn’t just fix the issue by looking for a regular cadence to count steps, he adds, because not everyone has a regular cadence. “Older people tend to have an irregular cadence—to the device, older people look a lot like buses going over potholes.”

Fitbit’s founders enter the world of manufacturing

A consumer gadget means mass manufacturing, potentially in huge quantities. They talked to a lot of contract-manufacturing firms, Park recalls. They realized that as a startup with an unclear future market, they wouldn’t be of interest to the top tier of manufacturers. But they couldn’t go with the lowest-budget operations, because they needed a reasonable level of quality.

“We saw some pretty scary manufacturers,” Park said. “Dirty facilities, flash marks on their injection-molded plastics [a sign of a bad seal or other errors], very low precision.” They eventually found a small manufacturer that was “pretty good but still hungry for business.” The manufacturer was headquartered in Singapore, while their surface-mount supplier, which put components directly onto printed circuit boards, was in Batam, Indonesia.

Workers assemble Fitbits by hand in October of 2008. James Park

Workers assemble Fitbits by hand in October of 2008. James Park

Working with that manufacturer, Park and Friedman made some tweaks in the design of the circuitry and the shape of the case. They struggled over how to keep water—and sweat—out of the device, settling on ultrasonic welding for the case and adding a spray-on coating for the circuitry after some devices were returned with corrosion on the electronics. That required tweaking the layout to make sure the coating would get between the chips. The coating on each circuit board had to be checked and touched up by hand. When they realized that the coating increased the height of the chips, they had to tweak the layout some more.

In December 2009, just a week before the ship date, Fitbits began rolling off the production line.

“I was in a hotel room in Singapore testing one of the first fully integrated devices,” Park says. “And it wasn’t syncing to my computer. Then I put the device right next to the base station, and it started to sync. Okay, that’s good, but what was the maximum distance it could sync? And that turned out to be literally just a few inches. In every other test we had done, it was fine. It could sync from 15 or 20 feet [5 or 6 meters] away.”

The problem, Park eventually figured out, occurred when the two halves of the Fitbit case were ultrasonically welded together. In previous syncing tests, the cases had been left unsealed. The sealing process pushed the halves closer together, so that the cable for the display touched or nearly touched the antenna printed on the circuit board, which affected the radio signal. Park tried squeezing the halves together on an unsealed unit and reproduced the problem.

Getting the first generation of Fitbits into mass production required some last-minute troubleshooting. Fitbit cofounder James Park [top, standing in center] helps debug a device at the manufacturer shortly before the product’s 2009 launch. Early units from the production line are shown partially assembled [bottom]. James Park

Getting the first generation of Fitbits into mass production required some last-minute troubleshooting. Fitbit cofounder James Park [top, standing in center] helps debug a device at the manufacturer shortly before the product’s 2009 launch. Early units from the production line are shown partially assembled [bottom]. James Park

“I thought, if we could just push that cable away from the antenna, we’d be okay,” Park said. “The only thing I could find in my hotel room to do that was toilet paper. So I rolled up some toilet paper really tight and shoved it in between the cable and the antenna. That seemed to work, though I wasn’t really confident.”

Park went to the factory the next day to discuss the problem—and his solution—with the manufacturing team. They refined his fix—replacing the toilet paper with a tiny slice of foam—and that’s how the first generation of Fitbits shipped.

Fitbit’s fast evolution

The company sold about 5,000 of those $99 first-generation units in 2009, and more than 10 times that number in 2010. The rollout wasn’t entirely smooth. Casciola recalls that Fitbit’s logistics center was sending him a surprising number of corroded devices that had been returned by customers. Casciola’s task was to tear them down and diagnose the problem.

“One of the contacts on the device, over time, was growing a green corrosion,” Casciola says. “But the other two contacts were not.” It turned out the problem came from Casciola’s design of the system-reset trigger, which allowed users to reset the device without a reset button or a removable battery. “Inevitably,” Casciola says, “firmware is going to crash. When you can’t take the battery out, you have to have another way of forcing a reset; you don’t want to have someone waiting six days for the battery to run out before restarting.”

The reset that Casciola designed was “a button on the charging station that you could poke with a paper clip. If you did this with the tracker sitting on the charger, it would reset. Of course, we had to have a way for the tracker to see that signal. When I designed the circuit to allow for that, I ended up with a nominal voltage on one pin.” This low voltage was causing the corrosion.

“If you clipped the tracker onto sweaty clothing—remember, sweat has a high salt content—a very tiny current would flow,” says Casciola. “It was just fractions of a microamp, not enough to cause a reset, but enough, over time, to cause greenish corrosion.”

Cofounders Eric Friedman [left] and James Park visit Fitbit’s manufacturer in December of 2008. James Park

Cofounders Eric Friedman [left] and James Park visit Fitbit’s manufacturer in December of 2008. James Park

On the 2012 generation of the Fitbit, called the Fitbit One, Casciola added a new type of chip, one that hadn’t been available when he was working on the original design. It allowed the single button to trigger a reset when it was held down for some seconds while the device was sitting on the charger. That eliminated the need for the active pin.

The charging interface was the source of another early problem. In the initial design, the trim of the Fitbit’s plastic casing was painted with chrome. “We originally wanted an actual metal trim,” Friedman says, “but that interfered with the radio signal.”

Chrome wasn’t a great choice either. “It caused problems with the charger interface,” Park adds. “We had to do a lot of work to prevent shorting there.”

They dropped the chrome after some tens of thousands of units were shipped—and then got compliments from purchasers about the new, chrome-less look.

Evolution happened quickly, particularly in the way the device transmitted data. In 2012, when Bluetooth LE became widely available as a new low-power communications standard, the base station was replaced by a small Bluetooth communications dongle. And eventually the dongles disappeared altogether.

“We had a huge debate about whether or not to keep shipping that dongle,” Park says. “Its cost was significant, and if you had a recent iPhone, you didn’t need it. But we didn’t want someone buying the device and then returning it because their cellphone couldn’t connect.” The team closely tracked the penetration rate of Bluetooth LE in cellphones; when they felt that number was high enough, they killed off the dongle.

Fitbit’s wrist-ward migration

After several iterations of the original Fitbit design, sometimes called the “clip” for its shape, the fitness tracker moved to the wrist. This wasn’t a matter of simply redesigning the way the device attached to the body but a rethinking of algorithms.

The impetus came from some users’ desire to better track their sleep. The Fitbit’s algorithms allowed it to identify sleep patterns, a design choice that, Park says, “was pivotal, because it changed the device from being just an activity tracker to an all-day wellness tracker.” But nightclothes didn’t offer obvious spots for attachment. So the Fitbit shipped with a thin fabric wristband intended for use just at night. Users began asking customer support if they could keep the wristband on around the clock. The answer was no; Fitbit’s step-counting algorithms at the time didn’t support that.

“My father, who turned 80 on July 5, is fixated on his step count. From 11 at night until midnight, he’s in the parking garage, going up flights of stairs. And he is in better shape than I ever remember him.” —Eric Friedman

Meanwhile, a cultural phenomenon was underway. In the mid-2000s, yellow Livestrong bracelets, made out of silicone and sold to support cancer research, were suddenly everywhere. Other causes and movements jumped on the trend with their own brightly colored wristbands. By early 2013, Fitbit and its competitors Nike and Jawbone had launched wrist-worn fitness trackers in roughly the same style as those trendy bracelets. Fitbit’s version was called the Flex, once again designed by NewDealDesign.

A no-button user interface for the Fitbit Flex

The Flex’s interface was even simpler than the original Fitbit’s one button and OLED screen: It had no buttons and no screen, just five LEDs arranged in a row and a vibrating motor. To change modes, you tapped on the surface.

“We didn’t want to replace people’s watches,” Park says. The technology wasn’t yet ready to “build a compelling device—one that had a big screen and the compute power to drive really amazing interactions on the wrist that would be worthy of that screen. The technology trends didn’t converge to make that possible until 2014 or 2015.”

The Fitbit Flex [right], the first Fitbit designed to be worn on the wrist, was released in 2013. It had no buttons and no screen. Users controlled it by tapping; five LEDs indicated progress toward a step count selected via an app [left]. iStock

The Fitbit Flex [right], the first Fitbit designed to be worn on the wrist, was released in 2013. It had no buttons and no screen. Users controlled it by tapping; five LEDs indicated progress toward a step count selected via an app [left]. iStock

“The amount of stuff the team was able to convey with just the LEDs was amazing,” Friedman recalls. “The status of where you are towards reaching your [step] goal, that’s obvious. But [also] the lights cycling to show that it’s searching for something, the vibrating when you hit your step goal, things like that.”

The tap part of the interface, though, was “possibly something we didn’t get entirely right,” Park concedes. It took much fine-tuning of algorithms after the launch to better sort out what was not tapping—like applauding. Even more important, some users couldn’t quite intuit the right way to tap.

“If it works for 98 percent of your users, but you’re growing to millions of users, 2 percent really starts adding up,” Park says. They brought the button back for the next generation of Fitbit devices.

And the rest is history

In 2010, its first full year on the market, the Fitbit sold some 50,000 units. Fitbit sales peaked in 2015, with almost 23 million devices sold that year, according to Statista. Since then, there’s been a bit of a drop-off, as multifunctional smart watches have come down in price and grown in popularity and Fitbit knockoffs entered the market. In 2021, Fitbit still boasted more than 31 million active users, according to Market.us.Media. And Fitbit may now be riding the trend back to simplicity, as people find themselves wanting to get rid of distractions and move back to simpler devices. I see this happening in my own family: My smartwatch-wearing daughter traded in that wearable for a Fitbit Charge 6 earlier this year.

Related Articles

The Consumer Electronics Hall of Fame: Fitbit

Fitbit went public in 2015 at a valuation of $4.1 billion. In 2021 Google completed its $2.1 billion purchase of the company and absorbed it into its hardware division. In April of this year, Park and Friedman left Google. Early retirement? Hardly. The two, now age 47, have started a new company that’s currently in stealth mode.

The idea of encouraging people to be active by electronically tracking steps has had staying power.

“My father, who turned 80 on July 5, is fixated on his step count,” Friedman says. “From 11 at night until midnight, he’s in the parking garage, going up flights of stairs. And he is in better shape than I ever remember him.”

What could be a better reward than that?

This article appears in the September 2024 print issue.

This early version of the Hover flying camera generated a lot of initial excitement, but never fully took off.Zero Zero Robotics

This early version of the Hover flying camera generated a lot of initial excitement, but never fully took off.Zero Zero Robotics

To help a user fall asleep more quickly [top], bursts of pink noise are timed to generate a brain response that is out of phase with alpha waves and so suppresses them. To enhance deep sleep [bottom], the pink noise is timed to generate a brain response that is in phase with delta waves.Source: Elemind

To help a user fall asleep more quickly [top], bursts of pink noise are timed to generate a brain response that is out of phase with alpha waves and so suppresses them. To enhance deep sleep [bottom], the pink noise is timed to generate a brain response that is in phase with delta waves.Source: Elemind

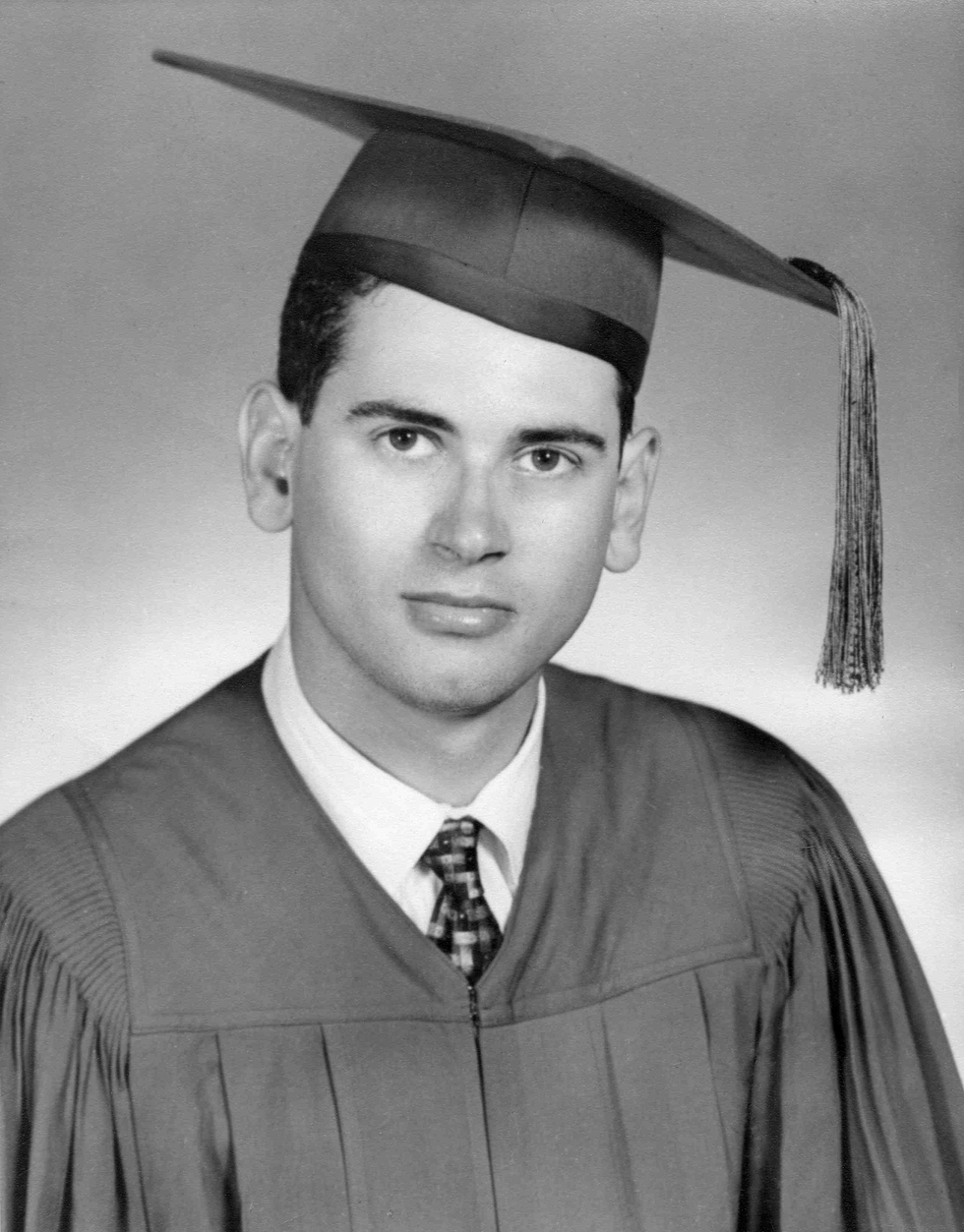

Bob Kahn graduated from high school in 1955.Bob Kahn

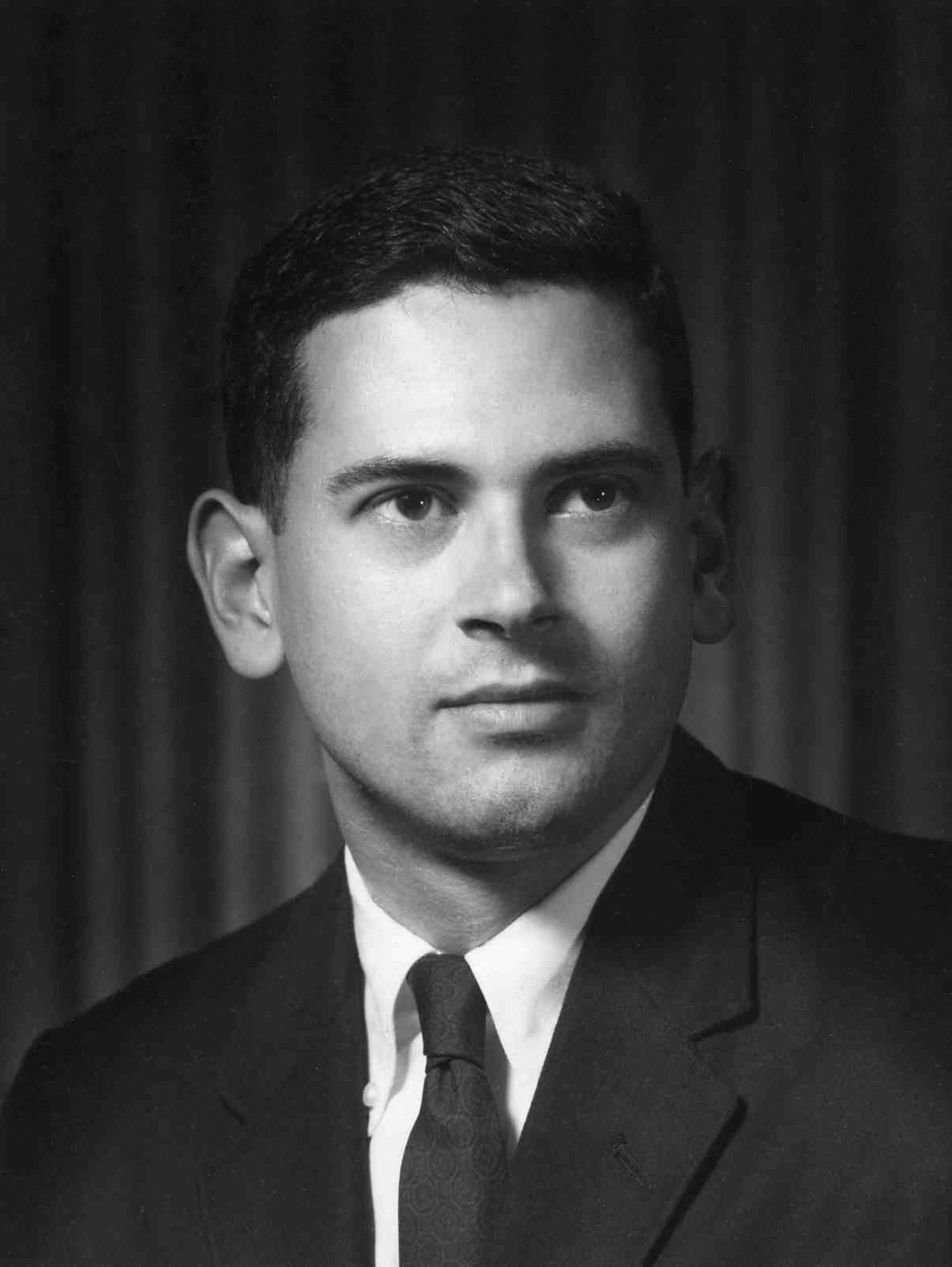

Bob Kahn graduated from high school in 1955.Bob Kahn Bob Kahn served on the MIT faculty from 1964 to 1966.Bob Kahn

Bob Kahn served on the MIT faculty from 1964 to 1966.Bob Kahn In 1997, President Bill Clinton [right] presented the National Medal of Technology to Bob Kahn [center] and Vint Cerf [left].Bob Kahn

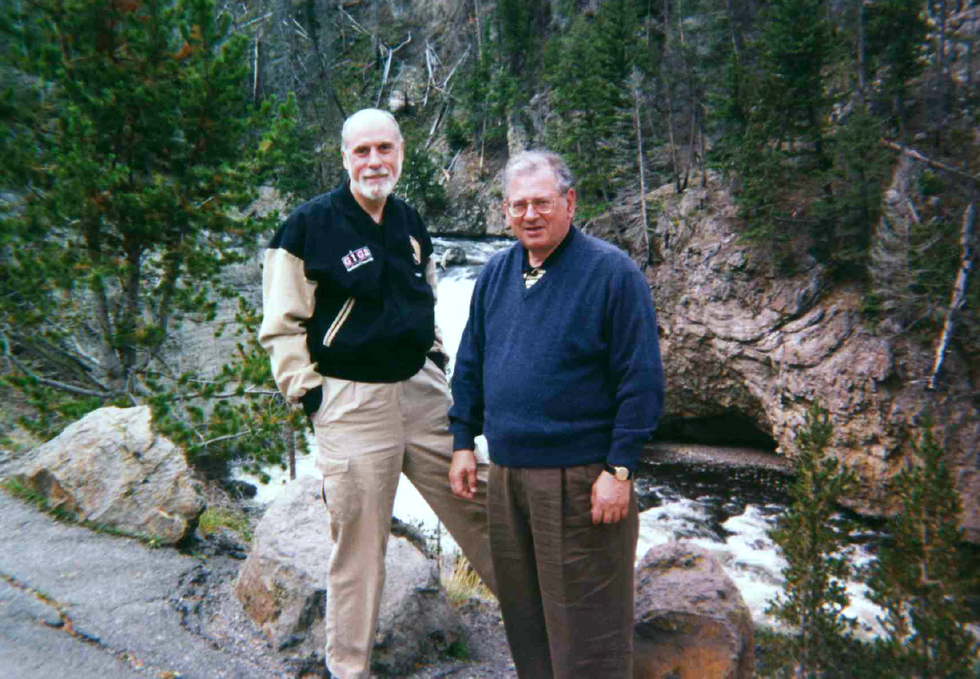

In 1997, President Bill Clinton [right] presented the National Medal of Technology to Bob Kahn [center] and Vint Cerf [left].Bob Kahn Bob Kahn and Vint Cerf visited Yellowstone National Park together in the early 2000s.Bob Kahn

Bob Kahn and Vint Cerf visited Yellowstone National Park together in the early 2000s.Bob Kahn A plaque commemorating the ARPANET now stands in front of the Arlington, Va., headquarters of the Defense Advanced Research Projects Agency (DARPA). Bob Kahn

A plaque commemorating the ARPANET now stands in front of the Arlington, Va., headquarters of the Defense Advanced Research Projects Agency (DARPA). Bob Kahn