MemryX MX3 edge AI accelerator delivers up to 5 TOPS, is offered in die, package, and M.2 and mPCIe modules

Jean-Luc noted the MemryX MX3 edge AI accelerator module while covering the DeGirum ORCA M.2 and USB Edge AI accelerators last month, so today, we’ll have a look at this AI chip and corresponding modules that run computer vision neural networks using common frameworks such as TensorFlow, TensorFlow Lite, ONNX, PyTorch, and Keras.

MemryX MX3 Specifications

MemryX hasn’t disclosed much performance stats about this chip. All we know is it offers more than 5 TFLOPs. The listed specifications include:

- Bfloat16 activations

- Batch = 1

- Weights: 4, 8, and 16-bit

- ~10M parameters stored on-die

- Host interfaces – PCIe Gen 3 I/O and/or USB 2.0/3.x

- Power consumption – ~1.0W

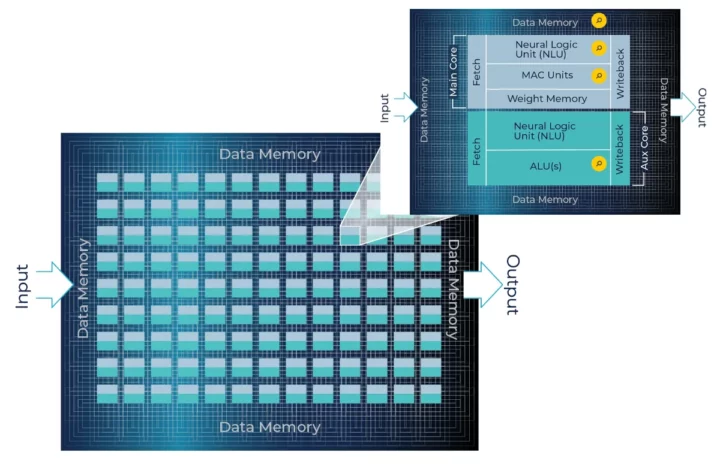

- 1-click compilation for the MX-SDK when mapping neural networks that have multiple layers

Under the hood, the MX3 features MemryX Compute Engines (MCE) which are tightly coupled with at-memory computing. This design creates a native, proprietary dataflow architecture that utilizes up to 70% of the chip with just one click compared to 15-30% on traditional CPUs, GPUs, and DSPs that use legacy instruction sets and control-flow architectures after software tuning.

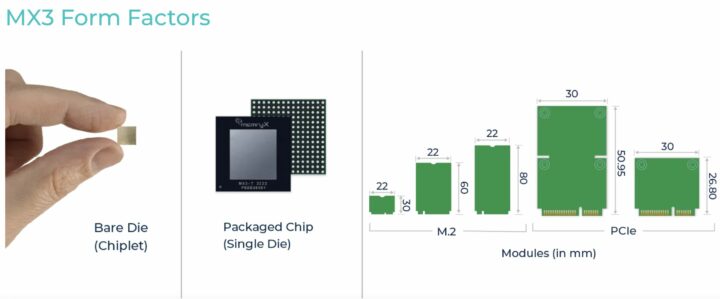

Form Factor

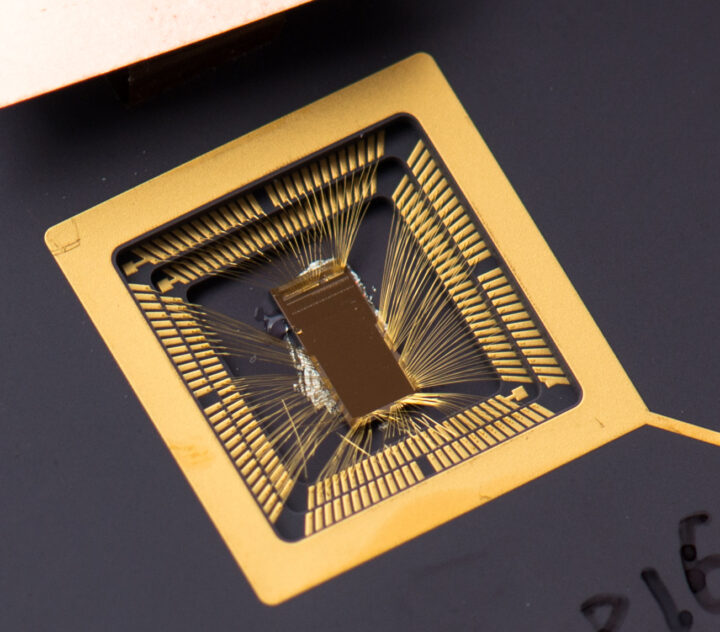

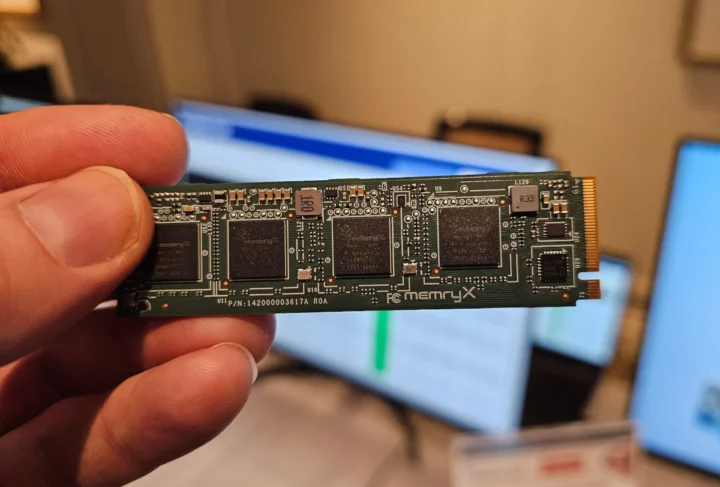

Form-factor-wise, this edge AI processor is offered either as a bare die, a single-die package, or in modules (mini PCIe or M.2) with one or more MemryX MX3 chips.

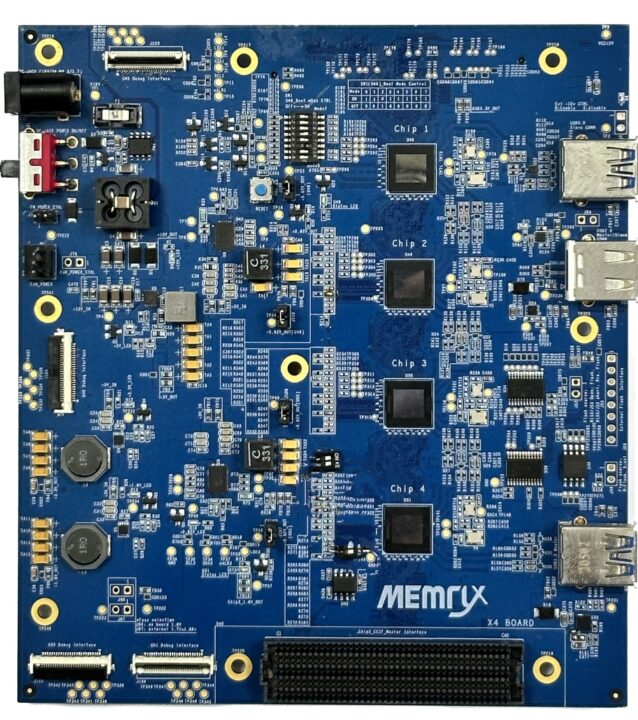

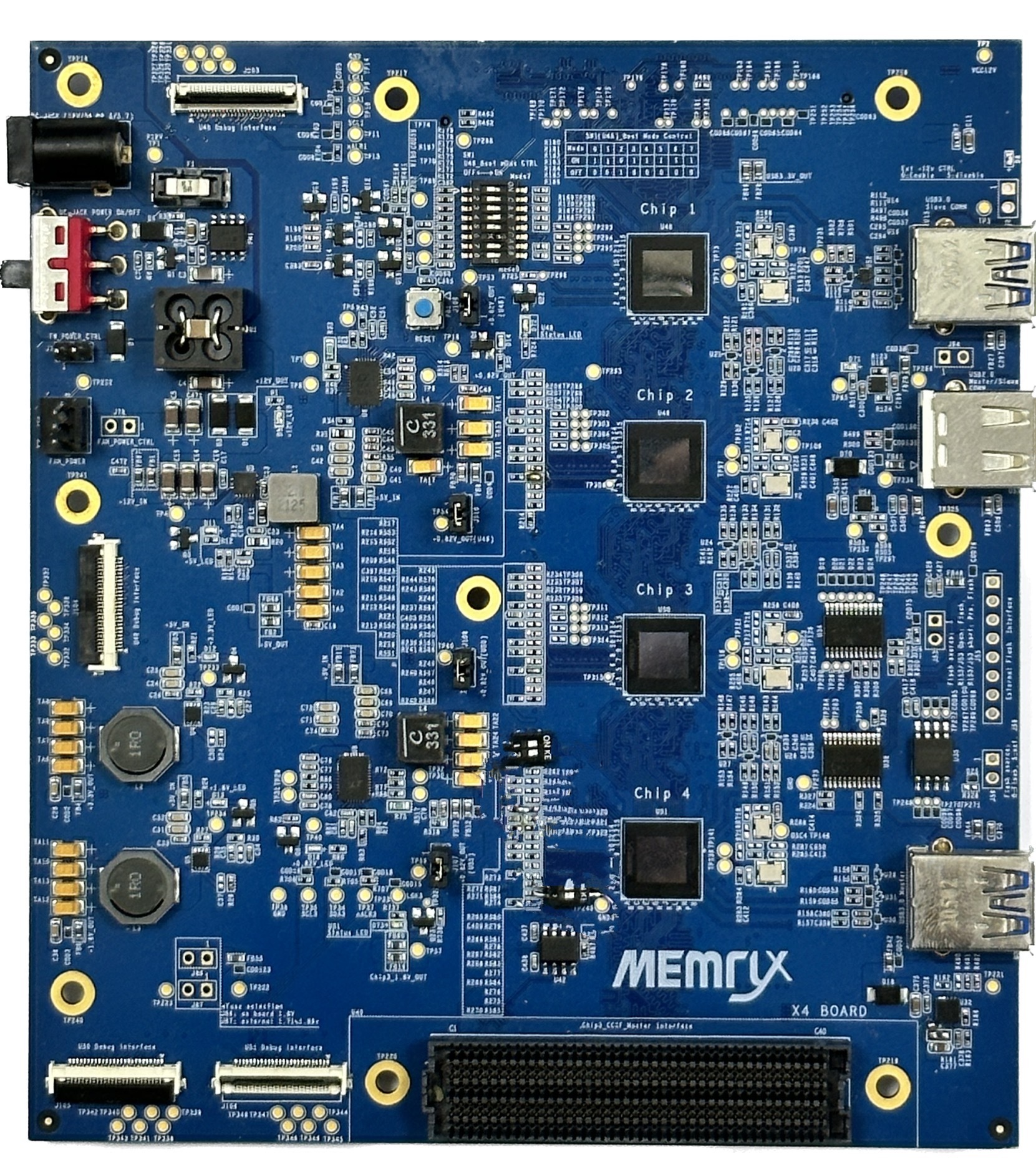

MemryX MX3 EVB

The MX3 EVB (Evaluation Board) is a PCBA with four MX3 chips, and you can cascade multiple EVB boards using a single interface to provide the required inferencing power. Each of these four chips has a single-die package.

MX3 SDK

The MX SDK helps in simulating and deploying the trained AI models. MemryX builds its products to:

- Provide real-world performance per watt

- Run models trained on any popular framework without requiring software changes or retraining

- Provide high scalability and granularity

- Run AI models equally as well on every host processor regardless of the system load

- Provide the same 1-click SDK (compilation software)

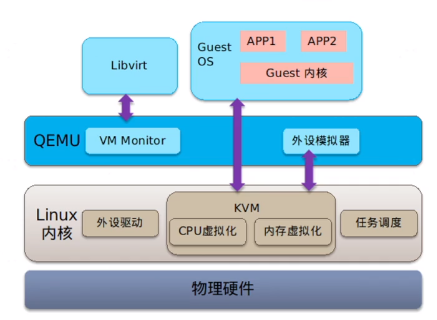

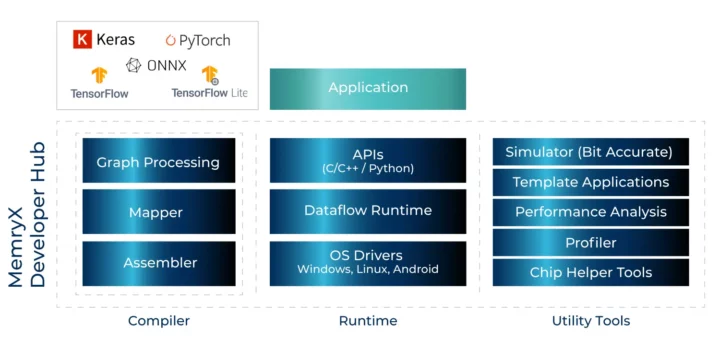

This SDK’s developer hub consists of a compiler (for graph processing, mapping, and assembling), utility tools (a bit-accurate simulator, performance analyzer, profiler, chip helper tools, and template applications), and a runtime environment with APIs, OS drivers, and a dataflow runtime.

You can use the MX3 EVB with Edge Impulse deployments after installing dependencies like Python 3.8+, MemryX tools and drivers, and Edge Impulse (for Linux). Next, connect the board to Edge Impulse, then verify it is connected by going to your projects and clicking “devices”.

MemryX MX3 demo

While the company hasn’t provided much detail about the chip’s performance, they did upload a video demo using the virtual camera input of AirSim – a software that creates datasets for autonomous driving and flying – comparing a computer fitted to an MX3 M.2 module to one equipped with NVIDIA 4060 GPU.

Latency was very low while running on the MX3 module, but increased drastically when switching over to the NVIDIA 4060 GPU, and the loud noise from the cooling fans was clearly noticeable.

More details may be found on the company’s website.

The post MemryX MX3 edge AI accelerator delivers up to 5 TOPS, is offered in die, package, and M.2 and mPCIe modules appeared first on CNX Software - Embedded Systems News.