Robot Dog Cleans Up Beaches With Foot-Mounted Vacuums

Cigarette butts are the second most common undisposed-of litter on Earth—of the six trillion-ish cigarettes inhaled every year, it’s estimated that over 4 trillion of the butts are just tossed onto the ground, each one leeching over 700 different toxic chemicals into the environment. Let’s not focus on the fact that all those toxic chemicals are also going into people’s lungs, and instead talk about the ecosystem damage that they can do and also just the general grossness of having bits of sucked-on trash everywhere. Ew.

Preventing those cigarette butts from winding up on the ground in the first place would be the best option, but it would require a pretty big shift in human behavior. Operating under the assumption that humans changing their behavior is a nonstarter, roboticists from the Dynamic Legged Systems unit at the Italian Institute of Technology (IIT), in Genoa, have instead designed a novel platform for cigarette-butt cleanup in the form of a quadrupedal robot with vacuums attached to its feet.

IIT

There are, of course, far more efficient ways of at least partially automating the cleanup of litter with machines. The challenge is that most of that automation relies on mobility systems with wheels, which won’t work on the many beautiful beaches (and many beautiful flights of stairs) of Genoa. In places like these, it still falls to humans to do the hard work, which is less than ideal.

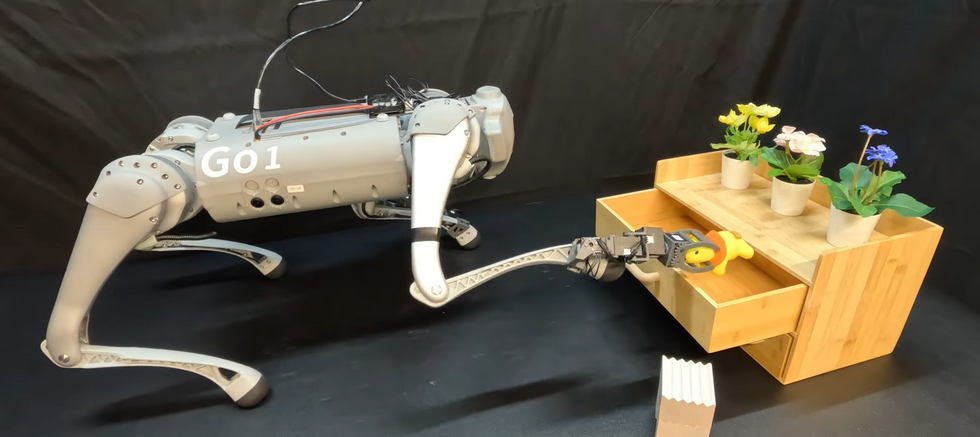

This robot, developed in Claudio Semini’s lab at IIT, is called VERO (Vacuum-cleaner Equipped RObot). It’s based around an AlienGo from Unitree, with a commercial vacuum mounted on its back. Hoses go from the vacuum down the leg to each foot, with a custom 3D-printed nozzle that puts as much suction near the ground as possible without tripping the robot up. While the vacuum is novel, the real contribution here is how the robot autonomously locates things on the ground and then plans how to interact with those things using its feet.

First, an operator designates an area for VERO to clean, after which the robot operates by itself. After calculating an exploration path to explore the entire area, the robot uses its onboard cameras and a neural network to detect cigarette butts. This is trickier than it sounds, because there may be a lot of cigarette butts on the ground, and they all probably look pretty much the same, so the system has to filter out all of the potential duplicates. The next step is to plan its next steps: VERO has to put the vacuum side of one of its feet right next to each cigarette butt while calculating a safe, stable pose for the rest of its body. Since this whole process can take place on sand or stairs or other uneven surfaces, VERO has to prioritize not falling over before it decides how to do the collection. The final collecting maneuver is fine-tuned using an extra Intel RealSense depth camera mounted on the robot’s chin.

VERO has been tested successfully in six different scenarios that challenge both its locomotion and detection capabilities.IIT

VERO has been tested successfully in six different scenarios that challenge both its locomotion and detection capabilities.IIT

Initial testing with the robot in a variety of different environments showed that it could successfully collect just under 90 percent of cigarette butts, which I bet is better than I could do, and I’m also much more likely to get fed up with the whole process. The robot is not very quick at the task, but unlike me it will never get fed up as long as it’s got energy in its battery, so speed is somewhat less important.

As far as the authors of this paper are aware (and I assume they’ve done their research), this is “the first time that the legs of a legged robot are concurrently utilized for locomotion and for a different task.” This is distinct from other robots that can (for example) open doors with their feet, because those robots stop using the feet as feet for a while and instead use them as manipulators.

So, this is about a lot more than cigarette butts, and the researchers suggest a variety of other potential use cases, including spraying weeds in crop fields, inspecting cracks in infrastructure, and placing nails and rivets during construction.

Some use cases include potentially doing multiple things at the same time, like planting different kinds of seeds, using different surface sensors, or driving both nails and rivets. And since quadrupeds have four feet, they could potentially host four completely different tools, and the software that the researchers developed for VERO can be slightly modified to put whatever foot you want on whatever spot you need.

VERO: A Vacuum‐Cleaner‐Equipped Quadruped Robot for Efficient Litter Removal, by Lorenzo Amatucci, Giulio Turrisi, Angelo Bratta, Victor Barasuol, and Claudio Semini from IIT, was published in the Journal of Field Robotics.