50 Years Later, This Apollo-Era Antenna Still Talks to Voyager 2

For more than 50 years, Deep Space Station 43 has been an invaluable tool for space probes as they explore our solar system and push into the beyond. The DSS-43 radio antenna, located at the Canberra Deep Space Communication Complex, near Canberra, Australia, keeps open the line of communication between humans and probes during NASA missions.

Today more than 40 percent of all data retrieved by celestial explorers, including Voyagers, New Horizons, and the Mars Curiosity rover, comes through DSS-43.

“As Australia’s largest antenna, DSS-43 has provided two-way communication with dozens of robotic spacecraft,” IEEE President-Elect Kathleen Kramer said during a ceremony where the antenna was recognized as an IEEE Milestone. It has supported missions, Kramer noted, “from the Apollo program and NASA’s Mars exploration rovers such as Spirit and Opportunity to the Voyagers’ grand tour of the solar system.

“In fact,” she said, “it is the only antenna remaining on Earth capable of communicating with Voyager 2.”

Why NASA needed DSS-43

Maintaining two-way contact with spacecraft hurtling billions of kilometers away across the solar system is no mean feat. Researchers at NASA’s Jet Propulsion Laboratory, in Pasadena, Calif., knew that communication with distant space probes would require a dish antenna with unprecedented accuracy. In 1964 they built DSS-42—DSS-43’s predecessor—to support NASA’s Mariner 4 spacecraft as it performed the first-ever successful flyby of Mars in July 1965. The antenna had a 26-meter-diameter dish. Along with two other antennas at JPL and in Spain, DSS-42 obtained the first close-up images of Mars. DSS-42 was retired in 2000.

NASA engineers predicted that to carry out missions beyond Mars, the space agency needed more sensitive antennas. So in 1969 they began work on DSS-43, which has a 64-meter-diameter dish.

DSS-43 was brought online in December 1972—just in time to receive video and audio transmissions sent by Apollo 17 from the surface of the moon. It had greater reach and sensitivity than DSS-42 even after 42’s dish was upgraded in the early 1980s.

The gap between the two antennas’ capabilities widened in 1987, when DSS-43 was equipped with a 70-meter dish in anticipation of Voyager 2’s 1989 encounter with the planet Neptune.

DSS-43 has been indispensable in maintaining contact with the deep-space probe ever since.

The dish’s size isn’t its only remarkable feature. The dish’s manufacturer took great pains to ensure that its surface had no bumps or rough spots. The smoother the dish surface, the better it is at focusing incident waves onto the signal detector so there’s a higher signal-to-noise ratio.

DSS-43 boasts a pointing accuracy of 0.005 degrees (18 arc seconds)—which is important for ensuring that it is pointed directly at the receiver on a distant spacecraft. Voyager 2 broadcasts using a 23-watt radio. But by the time the signals traverse the multibillion-kilometer distance from the heliopause to Earth, their power has faded to a level 20 billion times weaker than what is needed to run a digital watch. Capturing every bit of the incident signals is crucial to gathering useful information from the transmissions.

The antenna has a transmitter capable of 400 kilowatts, with a beam width of 0.0038 degrees. Without the 1987 upgrade, signals sent from DSS-43 to a spacecraft venturing outside the solar system likely never would reach their target.

NASA’s Deep Space Network

The Canberra Deep Space Complex, where DSS-43 resides, is one of three such tracking stations operated by JPL. The other two are DSS-11 at the Goldstone Deep Space Communications Complex near Barstow, Calif., and DSS-63 at the Madrid Deep Space Communications Complex in Robledo de Chavela, Spain. Together, the facilities make up the Deep Space Network, which is the most sensitive scientific telecommunications system on the planet, according to NASA. At any given time, the network is tracking dozens of spacecraft carrying out scientific missions. The three facilities are spaced about 120 degrees longitude apart. The strategic placement ensures that as the Earth rotates, at least one of the antennas has a line of sight to an object being tracked, at least for those close to the plane of the solar system.

But DSS-43 is the only member of the trio that can maintain contact with Voyager 2. Ever since its flyby of Neptune’s moon Triton in 1989, Voyager 2 has been on a trajectory below the plane of the planets, so that it no longer has a line of sight with any radio antennas in the Earth’s Northern Hemisphere.

To ensure that DSS-43 can still place the longest of long-distance calls, the antenna underwent a round of updates in 2020. A new X-band cone was installed. DSS-43 transmits radio signals in the X (8 to 12 gigahertz) and S (2 to 4 GHz) bands; it can receive signals in the X, S, L (1 to 2 GHz), and K (12 to 40 GHz) bands. The dish’s pointing accuracy also was tested and recertified.

Once the updates were completed, test commands were sent to Voyager 2. After about 37 hours, DSS-43 received a response from the space probe confirming it had received the call, and it executed the test commands with no issues.

DSS-43 is still relaying signals between Earth and Voyager 2, which passed the heliopause in 2018 and is now some 20 billion km from Earth.

[From left] IEEE Region 10 director Lance Fung, Kevin Furguson, IEEE President-Elect Kathleen Kramer, and Ambarish Natu, past chair of the IEEE Australian Capital Territory Section at the IEEE Milestone dedication ceremony held at the Canberra Deep Space Communication Complex in Australia. Furguson is the director of the complex.Ambarish Natu

[From left] IEEE Region 10 director Lance Fung, Kevin Furguson, IEEE President-Elect Kathleen Kramer, and Ambarish Natu, past chair of the IEEE Australian Capital Territory Section at the IEEE Milestone dedication ceremony held at the Canberra Deep Space Communication Complex in Australia. Furguson is the director of the complex.Ambarish Natu

Other important missions

DSS-43 has played a vital role in missions closer to Earth as well, including NASA’s Mars Science Laboratory mission. When the space agency sent Curiosity, a golf cart–size rover, to explore the Gale crater and Mount Sharp on Mars in 2011, DSS-43 tracked Curiosity as it made its nail-biting seven-minute descent into Mars’s atmosphere. It took roughly 20 minutes for radio signals to traverse the 320-million km distance between Mars and Earth, and then DSS-43 delivered the good news: The rover had landed safely and was operational.

“NASA plans to send future generations of astronauts from the Moon to Mars, and DSS-43 will play an important role as part of NASA’s Deep Space Network,” says Ambarish Natu, an IEEE senior member who is a past chair of the IEEE Australian Capital Territory (ACT) Section.

DSS-43 was honored with an IEEE Milestone in March during a ceremony held at the Canberra Deep Space Communication Complex.

“This is the second IEEE Milestone recognition given in Australia, and the first for ACT,” Lance Fung, IEEE Region 10 director, said during the ceremony. A plaque recognizing the technology is now displayed at the complex. It reads:

First operational in 1972 and later upgraded in 1987, Deep Space Station 43 (DSS-43) is a steerable parabolic antenna that supported the Apollo 17 lunar mission, Viking Mars landers, Pioneer and Mariner planetary probes, and Voyager’s encounters with Jupiter, Saturn, Uranus, and Neptune. Planning for many robotic and human missions to explore the solar system and beyond has included DSS-43 for critical communications and tracking in NASA’s Deep Space Network.

Administered by the IEEE History Center and supported by donors, the Milestone program recognizes outstanding technical developments around the world. The IEEE Australian Capital Territory Section sponsored the nomination.

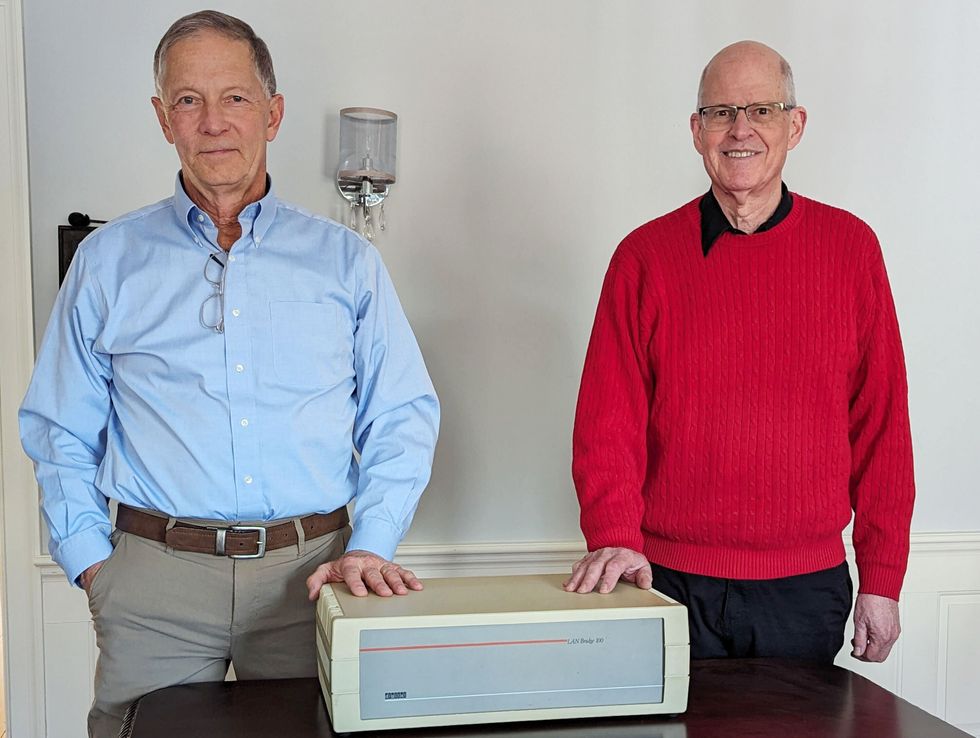

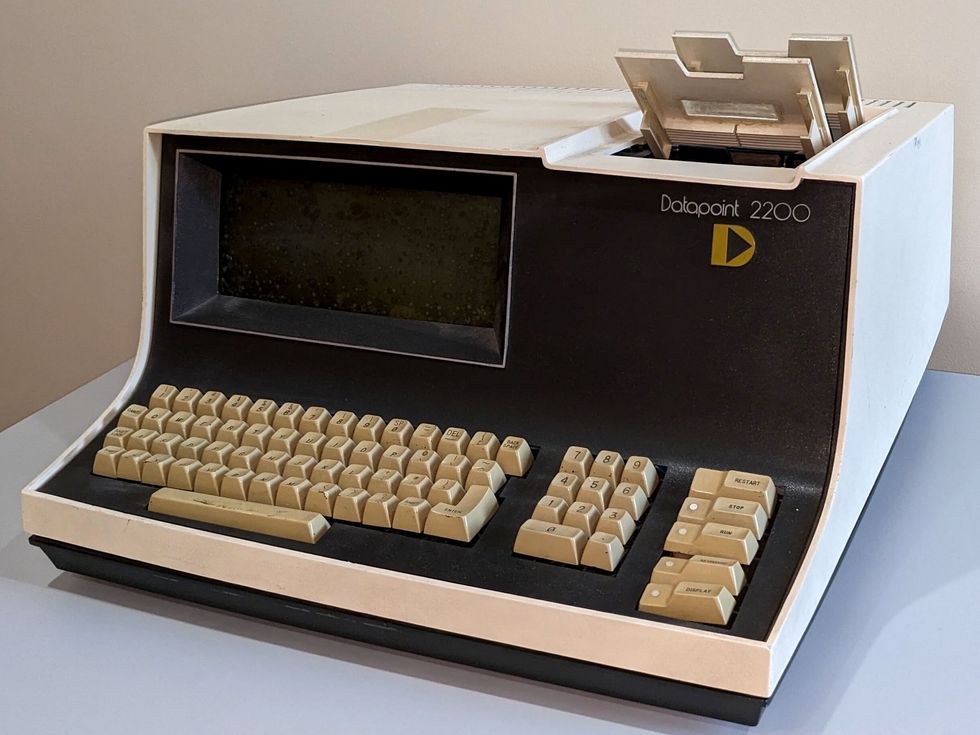

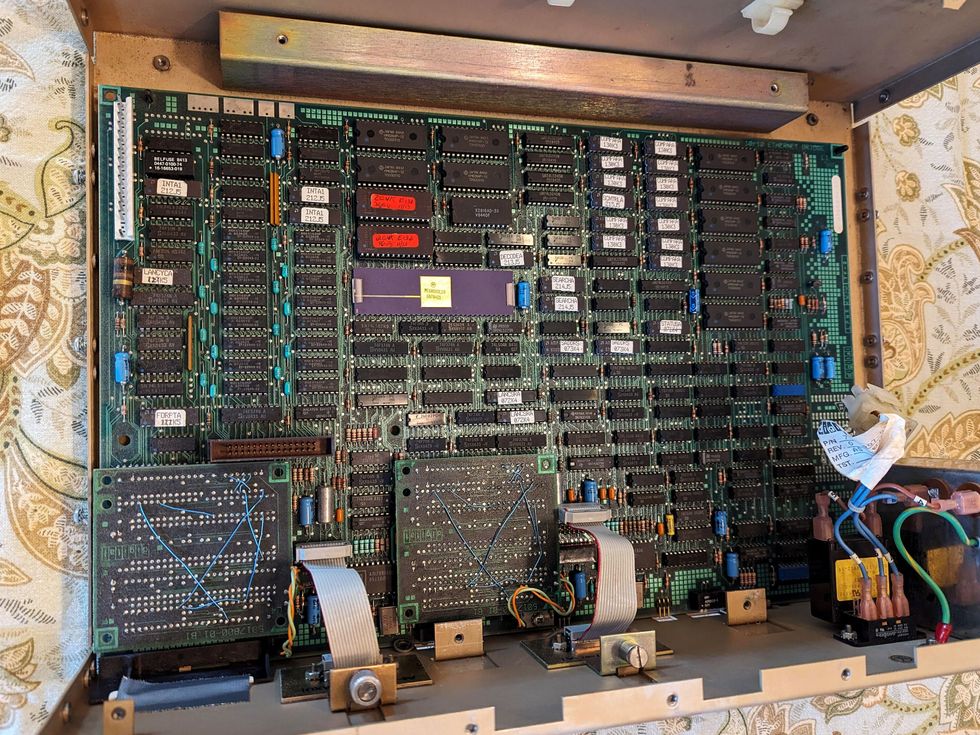

The logic module of a disassembled LANBridge 100, which was released by Digital Equipment Corp. in 1986. Alan Kirby

The logic module of a disassembled LANBridge 100, which was released by Digital Equipment Corp. in 1986. Alan Kirby