5 ways talking to Gemini Live is much, much better than using Google Assistant

Since Gemini Live became available to me on my Pixel 8 Pro late last week, I’ve found myself using it very often. Not because it’s the latest and hottest trend, no, but because almost everything I hated about talking to Google Assistant is no longer an issue with Gemini Live. The difference is staggering.

I have a lot to say about the topic, but for today, I want to focus on a few aspects that make talking to Gemini Live such a better experience compared to using Google Assistant or the regular Gemini.

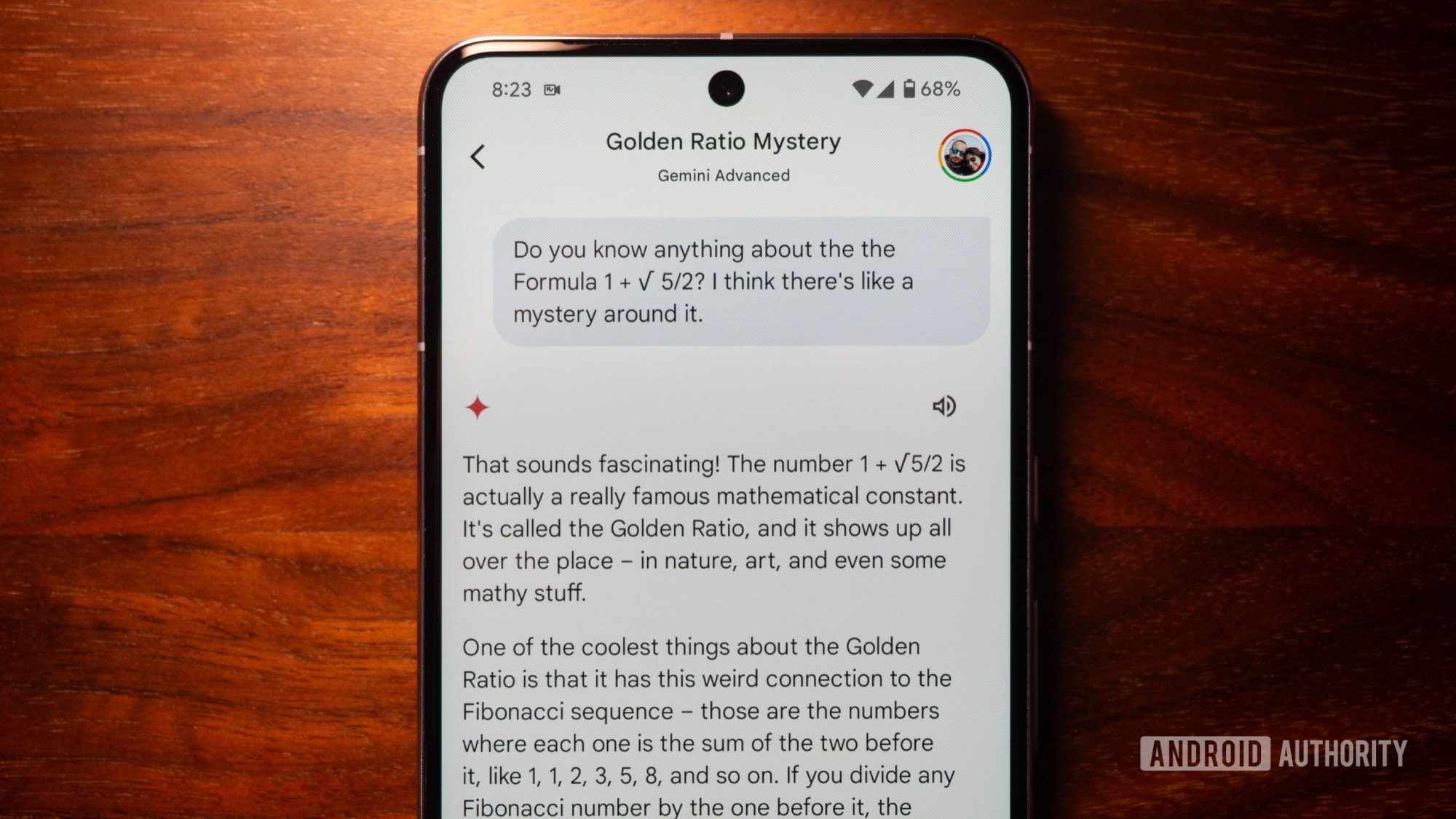

1. Gemini Live understands me, the way I speak

English is only my third language and even though I’ve been speaking it for decades, it’s still not the most natural language for me to use. Plus, I have the kind of brain that zips all over the place. So, every time I wanted to trigger Google Assistant, I had to think of the exact sentence or question before saying, “Hey Google.” For that reason, and that reason alone, talking to Assistant never felt natural to me. It’s always pre-meditated, and it always requires me to pause what I’m doing and give it my full attention.

Google Assistant wants me to speak like a robot to fit its mold. Gemini Live lets me speak however I want.

Gemini Live understands natural human speech. For me, it works around my own speech’s idiosyncracies, so I can start speaking without thinking or preparing my full question beforehand. I can “uhm” and “ah” mid-sentence, repeat myself, turn around the main question, and figure things out as I speak, and Live will still understand all of that.

I can even ask multiple questions and be as vague or as precise as possible. There’s really no restriction around how to speak or what to say, no specific commands, no specific ways to phrase questions — just no constraints whatsoever. That completely changes the usability of AI chatbots for me.

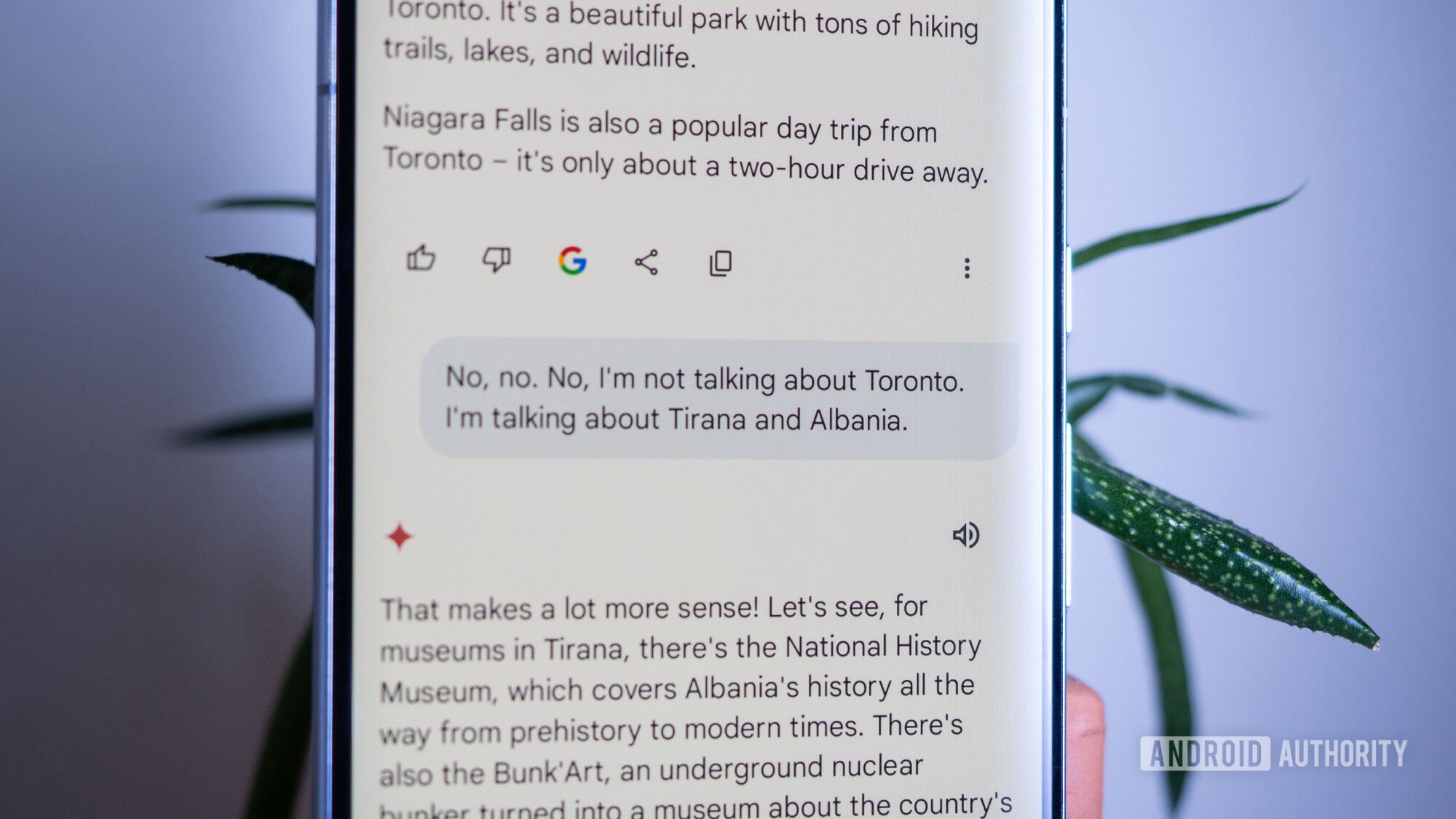

2. This is what real, continuous conversations should be like

Google Assistant added a setting for Continuous Conversations many years ago, but that never felt natural or all that continuous. I’d say “Hey Google,” ask it for something, wait for the full answer, wait an extra second for it to start listening again, and then say my second command. If I stay silent for a couple of seconds, the conversation is done and I have to re-trigger Assistant again.

Plus, Assistant treats every command separately. There’s no real ‘chat’ feeling, just a series of independent questions or commands and answers.

Interruptions, corrections, clarifications, idea continuity, topic changes — Gemini Live handles all of those.

Gemini Live works differently. Every session is a real open conversation, where I can talk back and forth for a while, and it still remembers everything that came before. So if I say I like Happy Endings and ask for similar TV show recommendations, I can listen in, then ask more questions, and it’ll keep in mind my preference for Happy Endings-like shows.

I can also interrupt it at any point in time and correct it if it misunderstood me or if the answer doesn’t satisfy me. I don’t have to manually scream at it to stop or wait for it as it drones on for two minutes with a wrong answer. I can also change the conversation topic in an instant or give it more precise questions if needed.

Plus, Gemini Live doesn’t shut off our chat after a few seconds of silence. So I can take a few seconds to properly assimilate the answer and think of other clarifications or questions to ask, you know, like a normal human, instead of a robot who has the follow-ups ready in a second.

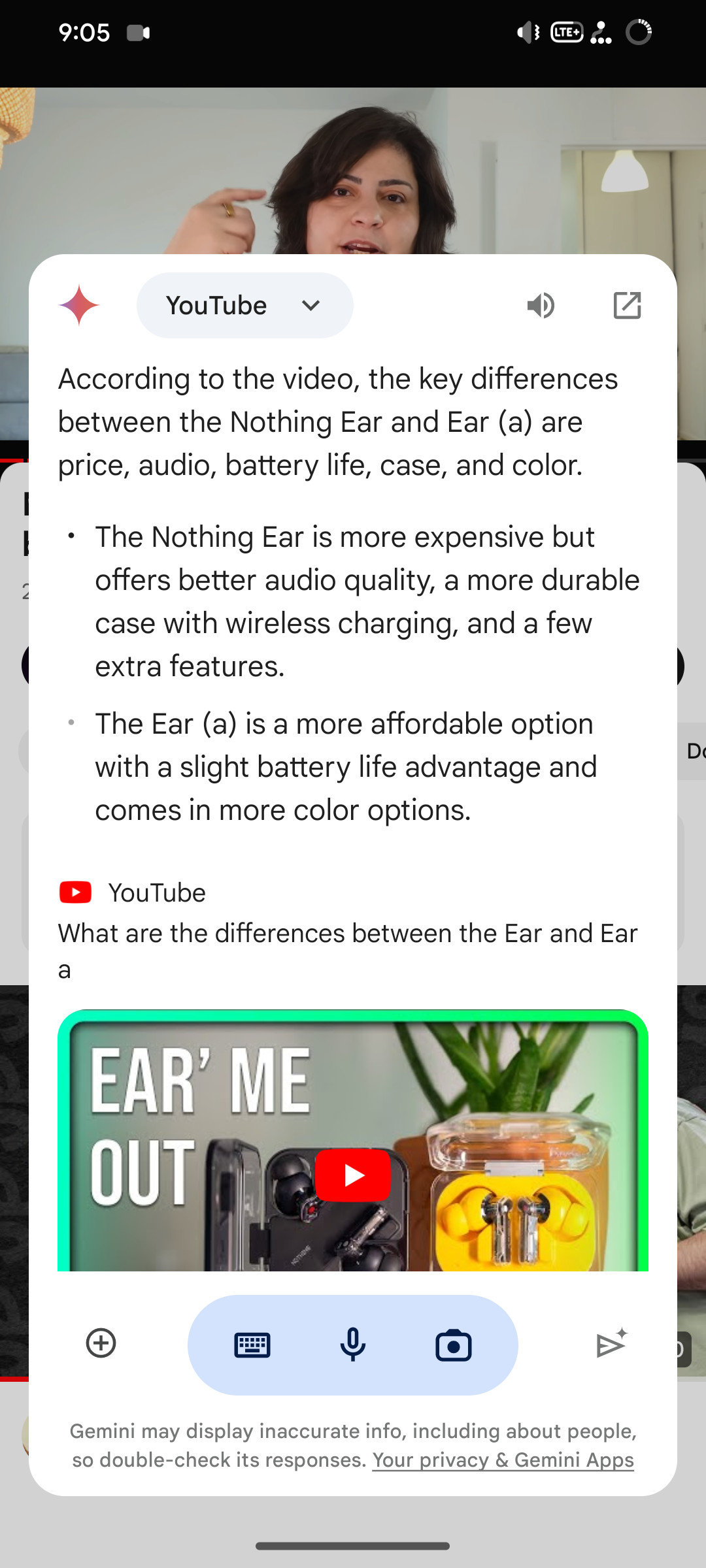

Better yet, I can minimize Live and go use other apps while still keeping the chat going. I’ve found this excellent while browsing or chatting with friends. I can either invoke Live mid-browsing to ask questions and get clarifications about what I’m reading, or start a regular Live chat then pull up a browser to double check what Gemini is telling me.

3. TL;DR? Ask it for a summary

As I mentioned earlier, every command is a separate instance for Google Assistant. Gemini Live considers an entire chat as an entity, which lets me do something I could never do with Assistant: ask for a summary.

So if I had a chat about places to run around in Paris and test the new Panorama mode on the Pixel 9 series, I can ask it for a summary in the end, and it’ll list all of them. This is incredibly helpful when trying to understand complex topics or get a list of suggestions, for example.

4. Want to talk more about a specific topic? Resume an older chat

At one point, I opened Gemini Live and said something like, “Hey, can we continue our chat about Paris panorama photos?” And it said yes. I was a bit gobsmacked. So I went on, and it seemed to really know where we left off. I tried that again a few times, and it worked every time. Google Assistant just doesn’t have anything like this.

Another way to trigger this more reliably is to open Gemini, expand the full Gemini app, tap on Recents and open a previous chat. Tapping on the Gemini Live icon in the bottom right here allows you to continue an existing chat as if you never stopped it or exited it.

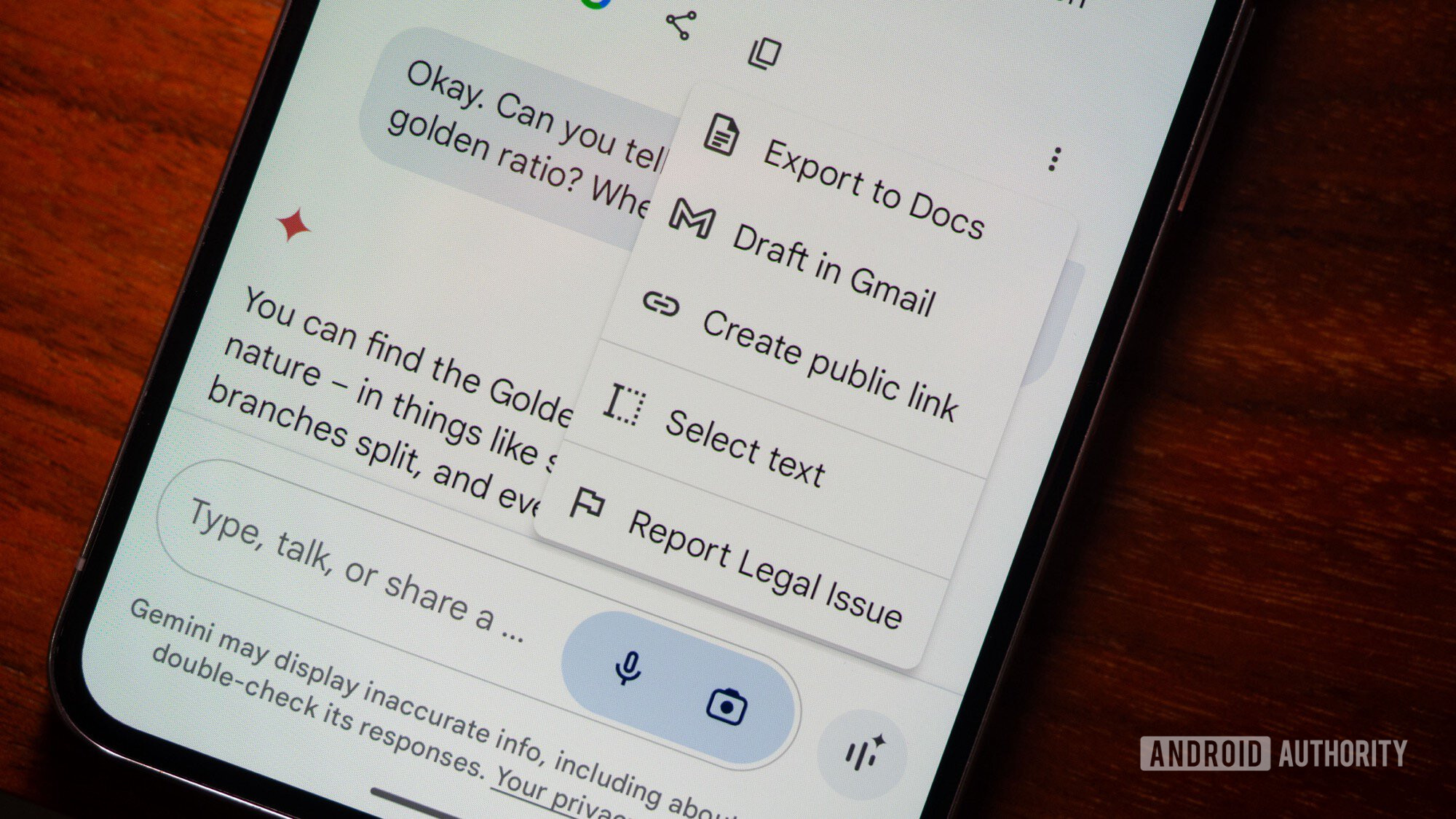

5. Check older chats and share them to Drive or Gmail

Viewing my Google Assistant history has always been a convoluted process that requires going to my Google account, finding my personal history, and checking the last few commands I’ve done.

With Gemini, it’s so easy to open up previous Live chats and read everything that was said in them. Even better, every chat can be renamed, pinned to the top, or deleted in its entirety. Plus, every response can be copied, shared, or quickly exported to Google Docs or Gmail. This makes it easy for me to manage my Gemini Live data, delete what needs to be deleted, and share or save what I care about.

Google Assistant still has a (significant) leg up

Despite everything Gemini Live does well, there are so many instances where I felt its limitations while using it. For one, the Live session is separate from the main Gemini experience, and Live only treats general knowledge questions, not personal data. So I can ask Gemini (not Live) about my calendar, send messages with it, start timers, check my Drive documents, control my smart home, and more, just as I could with Assistant, but I can’t do any of that with Gemini Live. The latter is more of a lively Google Search experience and all the regular Gemini extensions aren’t accessible in Live. Google said it was working on bringing them over, though, and that is the most exciting prospect for me.

Gemini Live still doesn't have access to personal data, calendars, smart home, music services, etc...

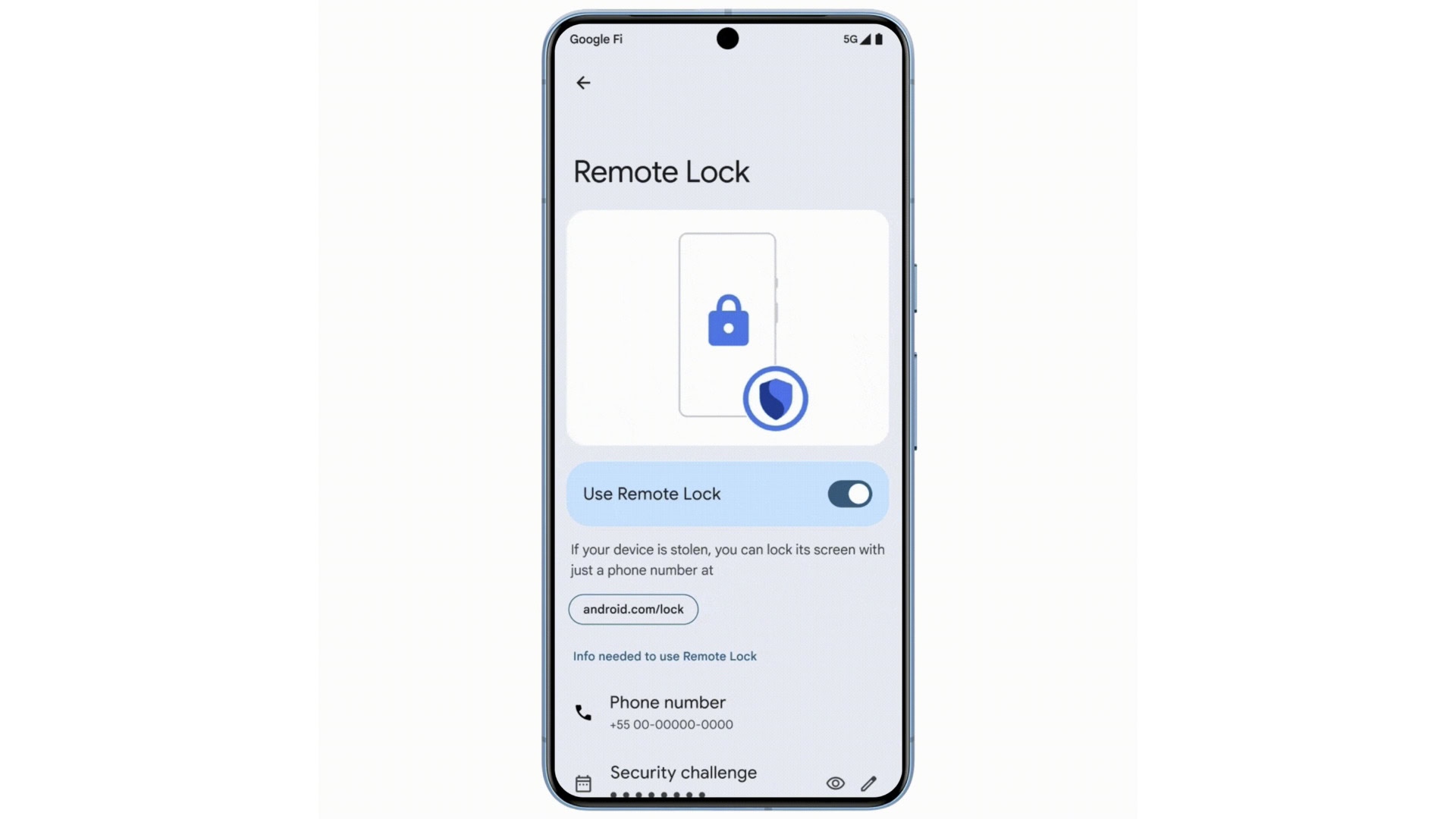

Because of how it’s built and what it currently does, Gemini Live requires a constant internet connection and there’s nothing you can do without it. Assistant is able to handle some basic local commands like device controls, timers, and alarms, but Gemini Live can’t.

And for now, my experience with multiple language in Gemini Live support has been iffy at best — not like Assistant’s support of multiple languages is stellar, but it works. On my phone, which is set to English (US), Gemini Live understands me only when I speak in English. I can tell it to answer in French, and it will, but it won’t understand me or recognize my words if I start speaking French. I hope Google brings in a more natural multilingual experience to it, because that could be life-changing for someone like me who thinks and talks in three languages at the same time.

Logistically, my biggest issue with Gemini Live is that I can’t control it via voice yet. My “Hey Google” command opens up the main Gemini voice command interface, which is neat, but I need to manually tap the Live button to trigger a chat. And when I’m done talking, the chat doesn’t end unless I manually tap to end it. No amount of “thank you,” “that’s it,” “we’re done,” “goodbye,” or other words did the trick to end the chat. Only the red End button does.

Google Assistant was a stickler for sourcing every piece of info; Gemini Live doesn't care about sources.

Realistically, though, my biggest Gemini Live problem is that there’s no sourcing for any of the info it shares. Assistant used to be a stickler for sourcing everything; how many times have you heard say something like, “According to [website];” or, “on the [website], they say…?” Gemini Live just states facts, instead, with no immediate way to verify them. All I can do is end the chat, go to the transcript, and check for the Google button that appears below certain messages, which shows me related searches I can do to verify that info. Not very intuitive, Google, and not respectful to the millions of sites you’ve crawled to get your answer like, uh, I don’t know… Android Authority perhaps?