Credit: Robert Triggs / Android Authority

Qualcomm’s Snapdragon X series of processors are designed for PCs — well, Windows on Arm, Copilot Plus laptops, to be precise. They take some of the Snapdragon sauce we are familiar with from high-end smartphones and blends it with the high-performance requirements of the PC space. The aim is to provide a chip with performance that rivals Intel and Apple, with the energy efficiency we’ve become accustomed to in smartphones.

The core ingredients common to all Snapdragon X series chips are Qualcomm’s custom Arm- rather than x86-based Oryon CPU (no Intel or AMD here), a bigger version of its Adreno GPU taken from mobile, Hexagon NPU smarts for AI, and top-tier networking that enables the latest Wi-Fi and 5G standards. Microsoft chips in, providing the emulation layer in Windows on Arm to run x64 applications that haven’t yet been ported to run natively on Arm processors.

Here’s everything you need to know about the Snapdragon X series inside the latest Windows laptops.

Snapdragon X Elite vs X Plus explained

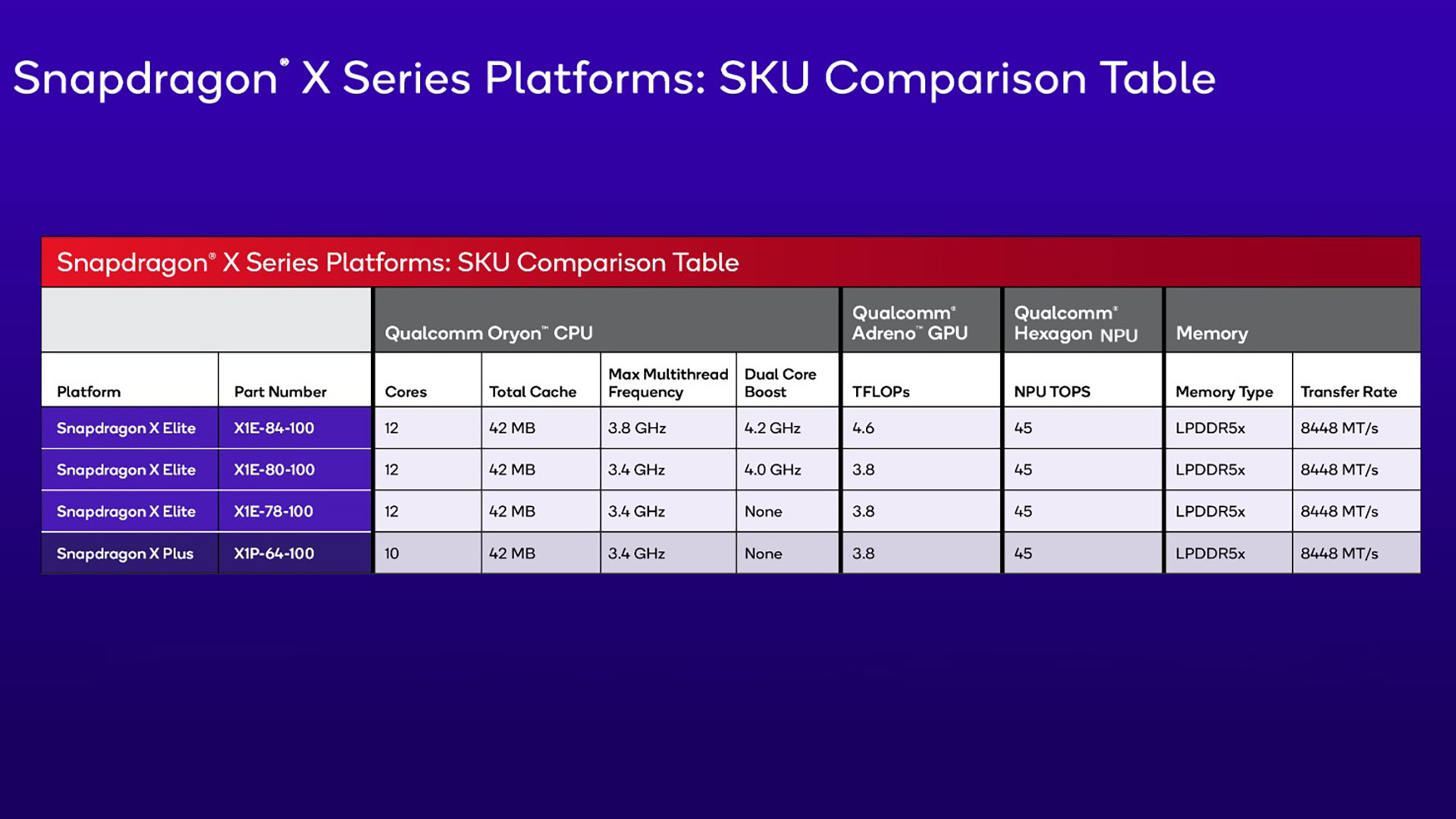

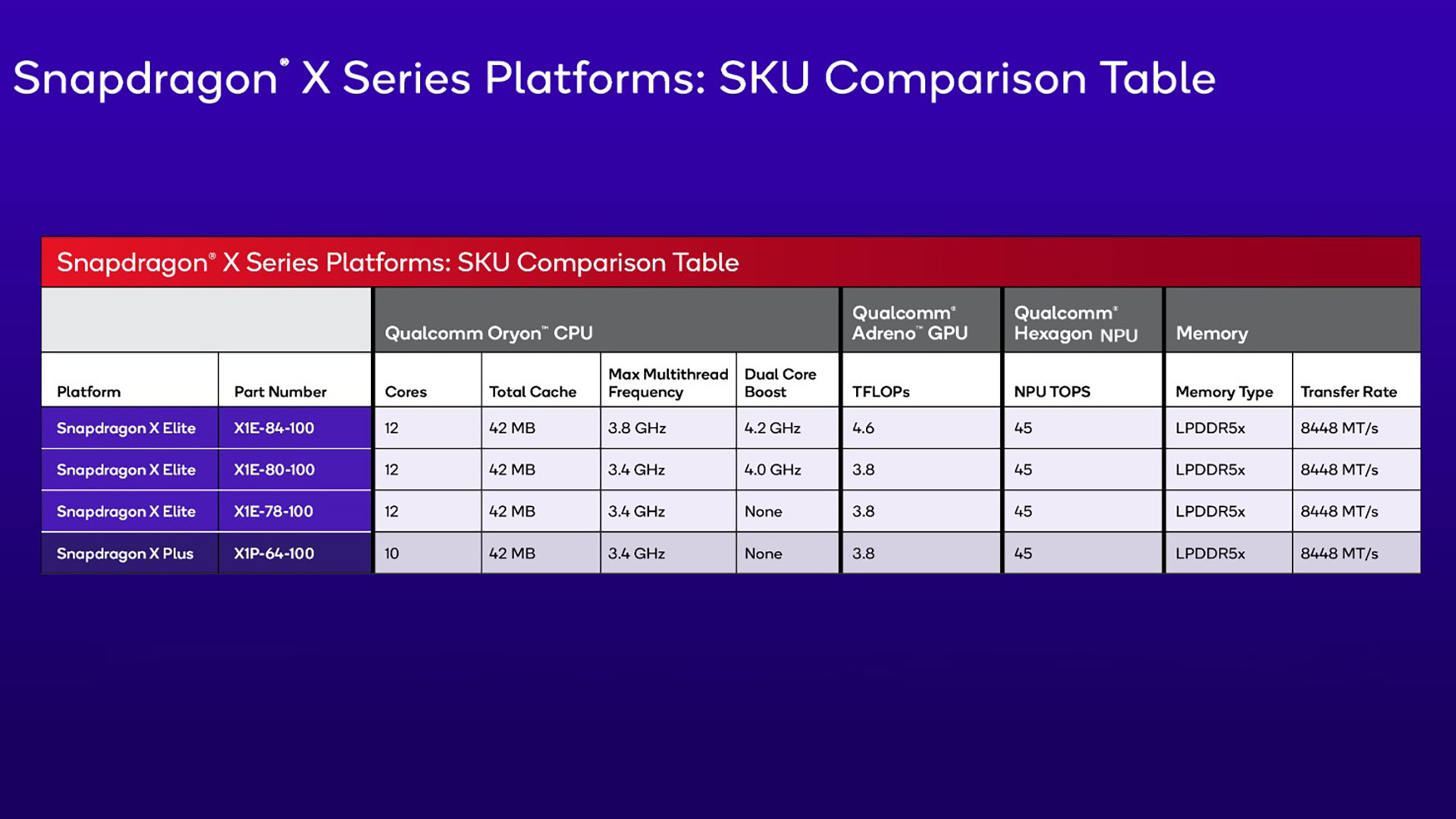

Snapdragon X comes in two major flavors — X Elite, which powers the first wave of top-tier CoPilot Plus PCs, and X Plus, destined for more affordable laptops later in 2024. In total, Qualcomm has four Snapdragon X SKUs (and one unofficial model we leaked) — three under the X Elite branding and one more affordable X Plus unit. There is reportedly an additional low-end X Plus model (X1P-42-100), but we haven’t heard anything official about it yet.

So what’s the difference between Snapdragon X Elite and X Plus, besides their intended price points? Well, Elite boasts 12 Oryon CPU cores versus 10 cores for the Plus. There’s also a smaller eight-core Plus model, which Qualcomm didn’t officially announce. Furthermore, Elite models have higher all-core and two-core turbo clock speeds, up to 4.2GHz, compared to the Plus’ 3.4GHz. This varies by specific model, but the top-tier Elite models pack the Apple M-series rivaling performance with higher power consumption to boot.

The top-tier X1E-84-100 SKU also has a more powerful GPU than all the other models, hitting 4.6 TFLOPS vs 3.8 TFLOPS for the standard Ardreno GPU setup. This is thanks to a higher GPU frequency of 1.5GHz, up from 1.2GHz.

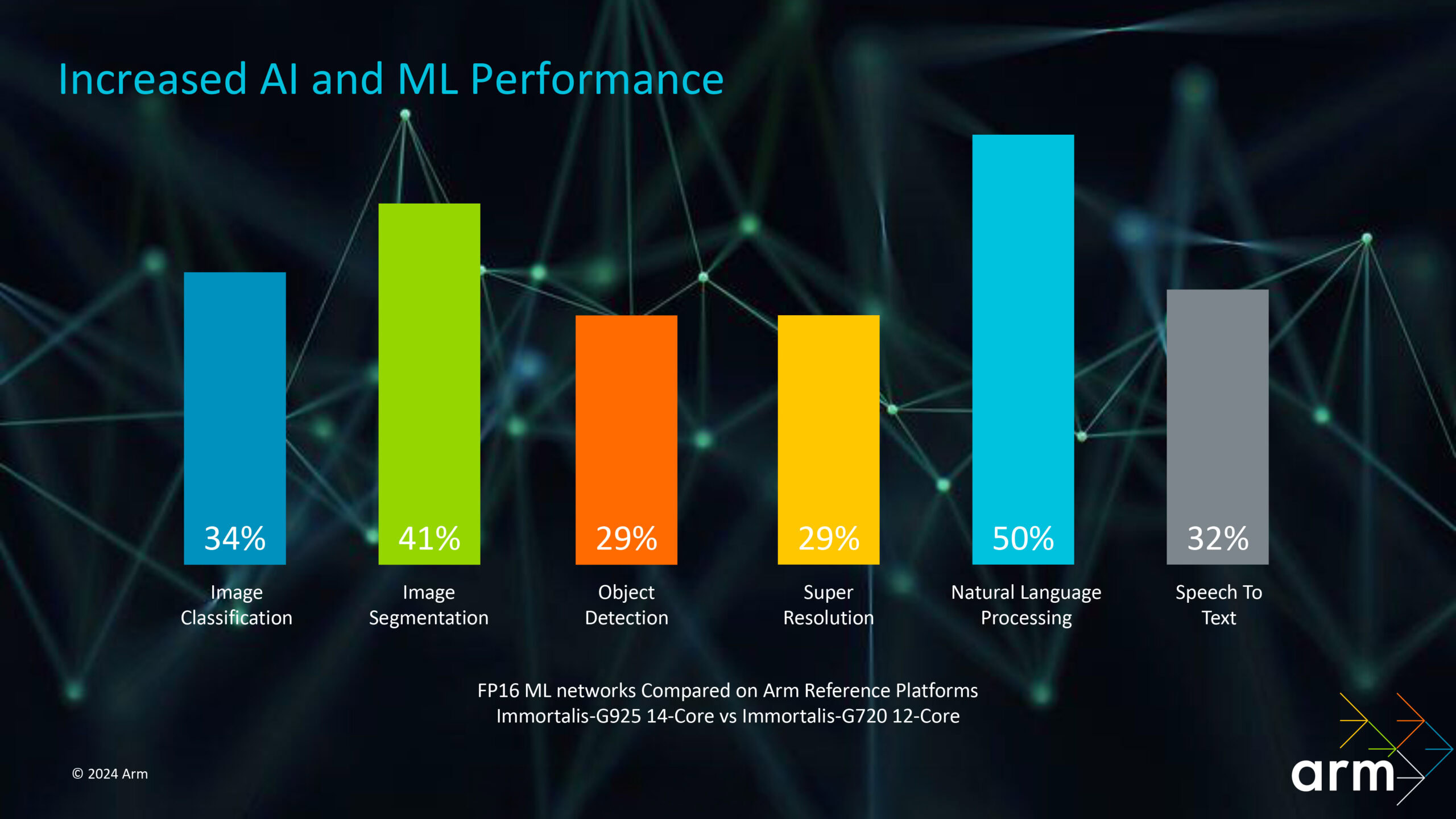

Fortunately, all of the Snapdragon X models sport the same 45TOPS Neural Processing Unit (NPU), ensuring they’re all capable of running the same AI features. If you’re unfamiliar, an NPU augments traditional CPU capabilities with machine learning (AI) specific number crunching capabilities. Not only is an NPU faster, but it’s more power efficient too.

NPUs are purpose-built to handle machine learning workloads for CoPilot Plus. Every Snapdragon X chip has the same one.

The series all support LDRR5X memory at 8448MT/s too, 4K120 video decoding, and 8+4 lanes of PCIe 4.0 for storage and the like. All except the unofficial X1P-42-100, which supposedly drops to 4K60 decode and 4+4 PCIe 4.0 lanes. The range is manufactured using TSMC’s N4 process and supports Wi-Fi 7, Bluetooth 5.4, and 5G networking, with a discrete modem attached.

The bottom line is that CPU performance is the big differentiator between the Snapdragon X line. There’s a showcase X Elite chip that pushes performance on the CPU and GPU front (no doubt the model the benchmarkers will want), but without knowing the TDP, this might not be the most interesting chip in the range. The other Elite chips are more conservative on clocks and power, whilst the Plus steps performance down just a little with a smaller CPU configuration.

Snapdragon X – Oryon CPU deep dive

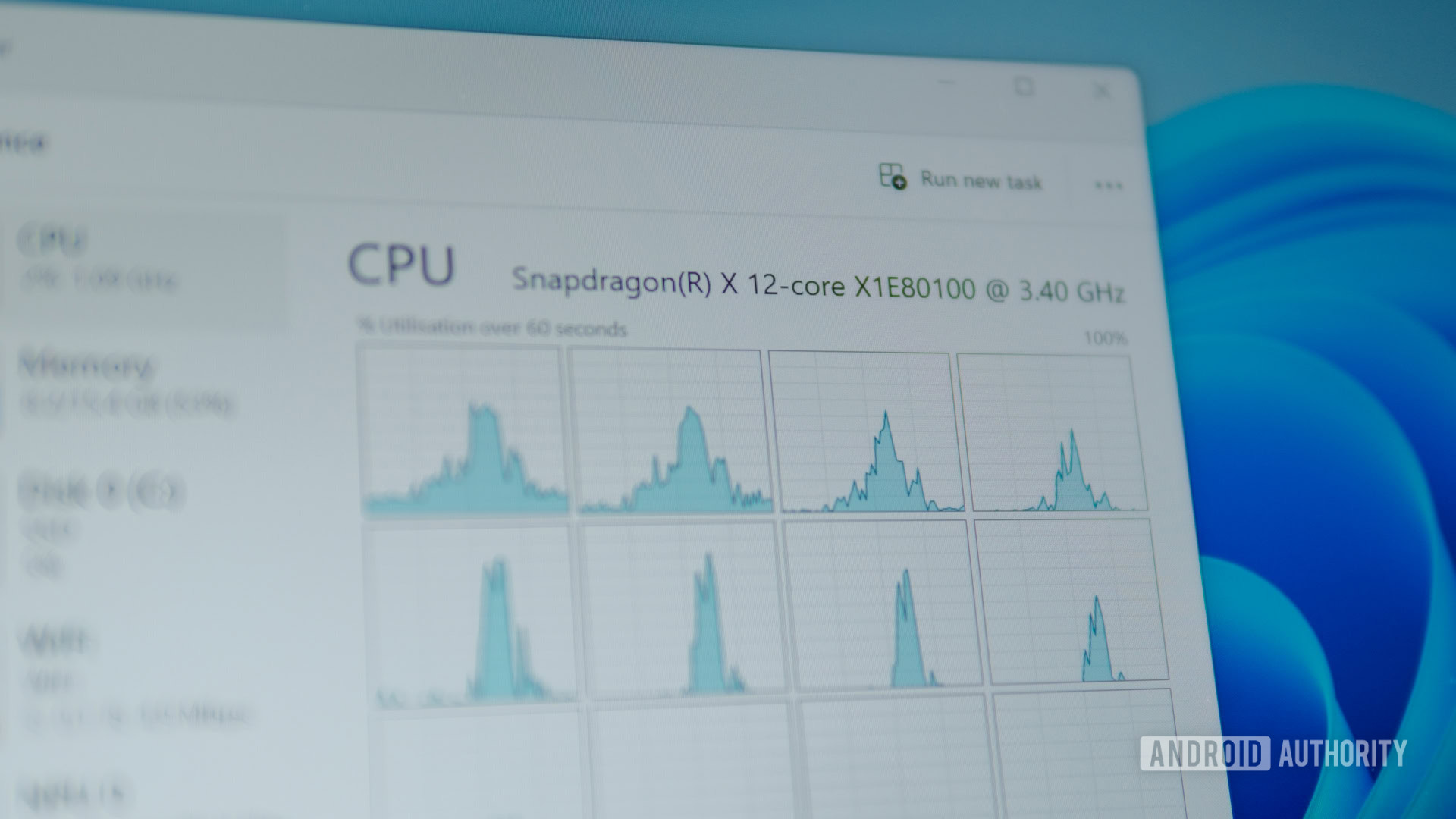

Speaking of CPUs, perhaps the most interesting aspect of the Snapdragon X series is Qualcomm’s in-house Oryon CPU. I say Qualcomm’s CPU, but the company bought Nuvia for $1.4 billion in 2021, which had started work on a custom Arm CPU for data centers called Phoenix. That work would quickly become Oryon for Windows on Arm devices.

The most interesting thing about Oryon is that it’s not based on the x86/x64 architecture that PC stalwarts AMD and Intel use. Instead, Oryon is built on the Arm architecture (Armv8.7-A, to be precise) found in smartphone processors and Apple’s M-series of laptop chips. However, the latter are now on Armv9, which introduces additional important features.

Oryon is an Arm-based CPU, rather than x86/x64 like rivals Intel and AMD.

Anyway, let’s start with the high-end topology. Snapdragon X uses three clusters of up to four cores (though it can technically support eight cores in a cluster). Unlike smartphones, there aren’t separate performance-optimized and efficiency-optimized CPU cores. There’s no Arm-style big.LITTLE or Intel-type low-power E-cores; every Oryon core is the same micro-architecture-wise. However, it’s likely that different clusters have different peak frequencies to balance power consumption. For instance, we know that two CPU cores in different clusters can push the peak boost clocks.

Each cluster shares its L2 cache, which is 12MB in size. This means that four cores share access to a large pool of local memory for multi-threaded performance. Cluster-to-cluster snooping is implemented when a CPU group needs to grab data from another. There’s also a smaller 6MB L3 cache as part of the shared memory subsystem across clusters, GPU, and NPU, with a minimal 6-29 nanoseconds of latency for fast access. Altogether, that’s a hefty memory footprint in the vein of the Apple M series (Apple is estimated to use even bigger caches) and is likely key to Qualcomm reaching a similar level of performance.

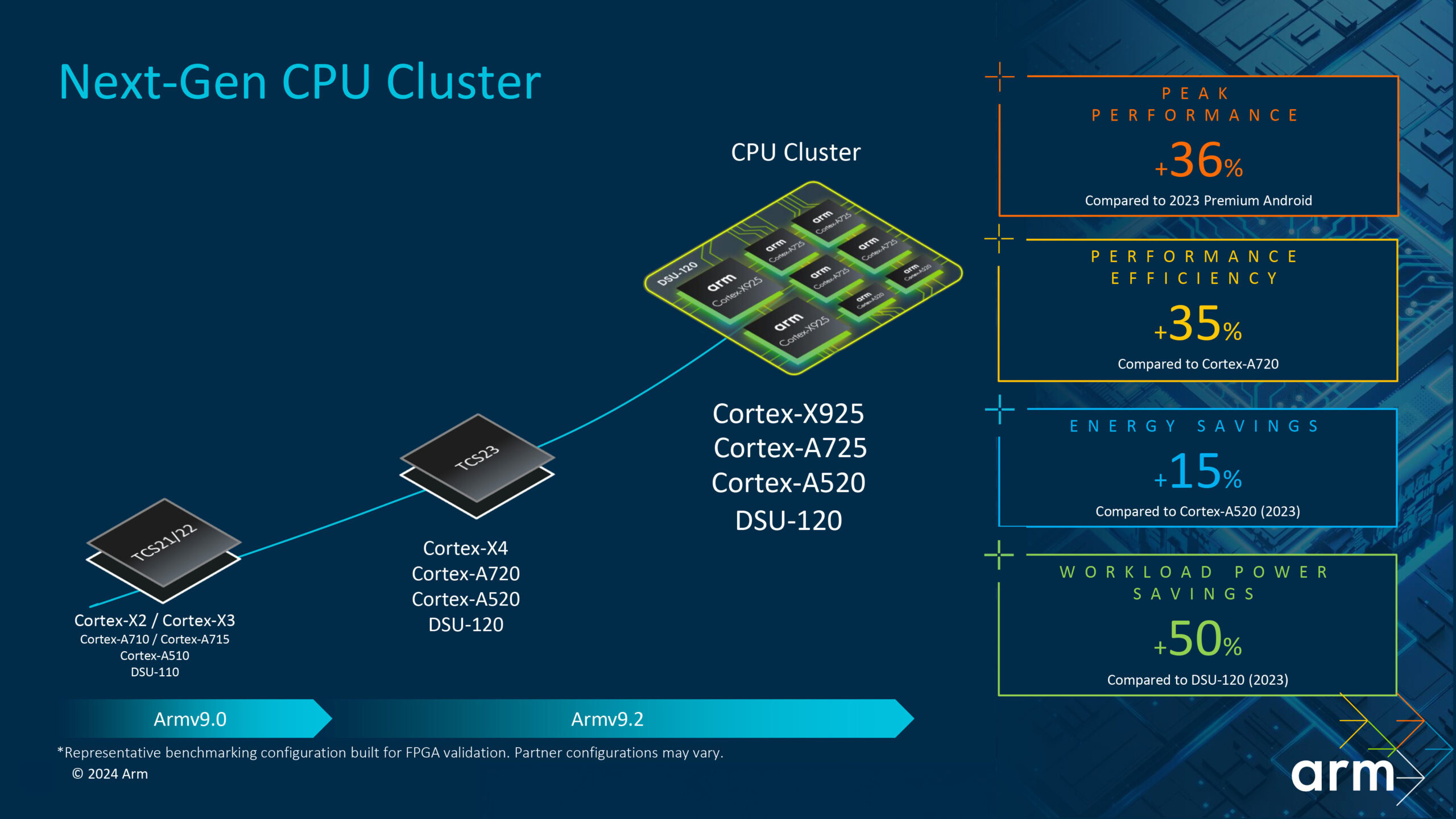

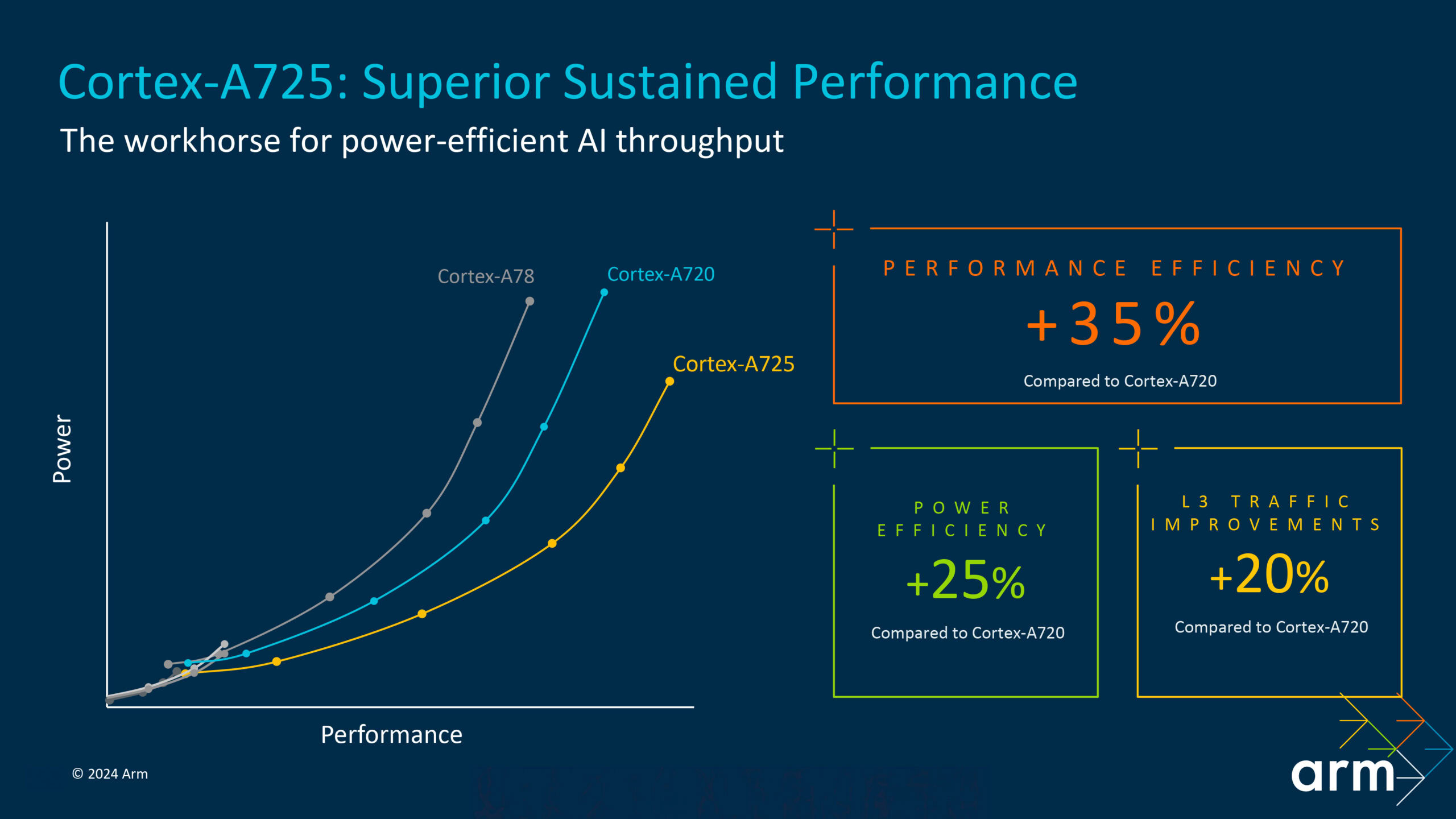

Peeking inside each core, Oryon provides six integer number crunching units, four floating point units (two with multiply-accumulate for machine learning workloads), and four load/store units. Importantly, each FP unit supports 128-bit NEON for number crunching on smaller data sizes right down to INT8, but not as small as INT4 used by some highly compressed smartphone machine-learning models. This helps mitigate the lack of SVE (introduced in Armv9) and the wider pipelines that we see in modern AMD and Intel chips. Still, that’s a pretty big CPU that’s a smidgen larger (execution-wise) than the latest Arm Cortex-A925 destined for 2025 smartphones.

No efficiency-cores here, Snapdragon X goes all in with up to 12 big CPU cores.

Keeping that CPU core fed is a major task. Qualcomm accomplishes this with a large 192Kb L1 instruction and 96KB data cache, paired with 8 instructions per cycle decoding. The re-order buffer hits a huge 650 micro-ops (or larger), allowing for a frankly huge out-of-order execution window (think of this as a queue of little instructions the processor could run).

Jargon aside, keeping a big core running with things to do and powering off when it’s not in use is the key to robust power consumption. You want to avoid situations where the core is on but suffers a “bubble” without instruction to process. The aim of having so many instructions sitting around within easy reach is that there’s always something it could be doing. However, historically, there’s been a diminishing return for storing so many instructions that are simply waiting, but this doesn’t seem to apply for modern Arm chips. For comparison, the Cortex-X925 has a 750 micro-op re-order buffer for a 1,500 out-of-order window, but Intel’s Lunar Lake stores just 416 entries.

Anyway, the TLDR is that the Snapdragon X’s Oryon CPU has a pretty big core paired up with tons of memory to keep it running at full tilt when needed. That’s likely to produce solid performance, but all that memory costs a small fortune in silicon area, hence why this is a premium-tier product.

Adreno graphics explained (finally)

Those familiar with Snapdragon will recognize the X-series’ GPU — the Adreno X1 is a bigger version of Qualcomm’s mobile GPU. Usually, Qualcomm doesn’t spill the beans on its graphics architecture but has opened up a lot more about the Adreno X1 as it dukes it out with bigger GPU names in the PC space.

At a high level, the Adreno X1 supports many key desktop-class GPU features, including DirectX 12.1 (not 12.2), DirectX 11, OpenCL 3.0, and Vulkan 1.3 feature sets. This includes ray tracing (via Vulkan) and variable rate shading, which are essential in modern PC titles and are slowly gaining traction in mobile.

Qualcomm levels up its Adreno GPU from mobile, making it a solid competitor for Intel's integrated graphics.

The Adreno X1 is built for both tile-based rendering (binned mode), typically seen in smartphones, and direct rendering that is more associated with the PC space. The difference is that a tile-based approach splits up the scene into smaller sections, keeping local data in the local cache to reduce power consumption. A binned-direct mode also attempts to leverage the best of both, leveraging a local high-bandwidth 3MB SRAM. The mode of operation is determined by the graphics driver and Qualcomm calls this rather unique setup FlexRender. The idea here is that the X1 can benefit from mobile-style power consumption or PC-class performance, depending on what best suites.

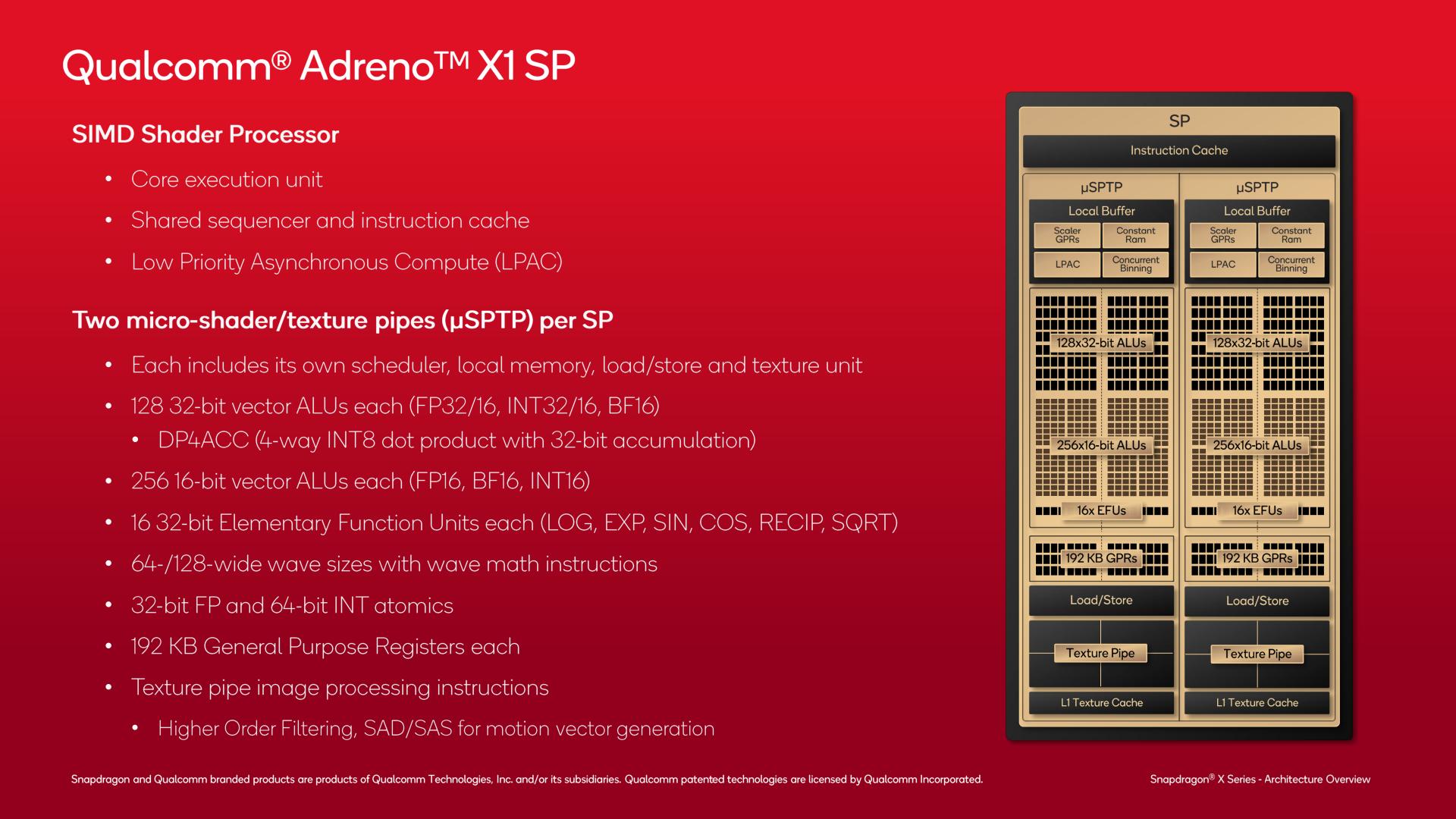

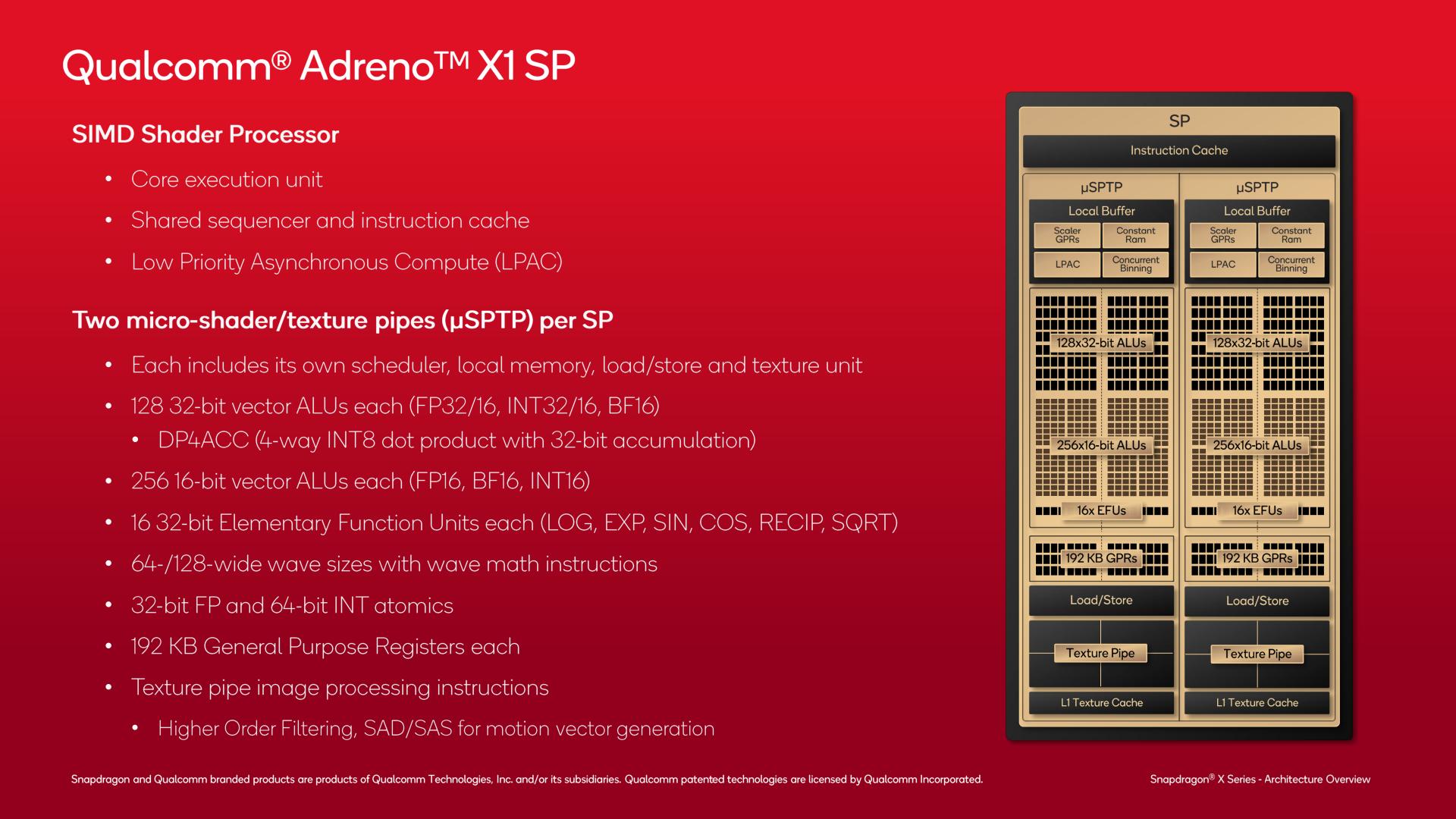

Regardless of the mode of operation, the Adreno X1 features six shader processors with 256 32-bit floating point units each, for a total of 1536 FP32 units. Peering deeper into each shader processor, one can see two micro-shader/texture pipelines with their own scheduler and power domain. Each comprises a 192KB L1 cache, a texture unit running at eight texels per clock, 16 elementary functional units (EFUs) for advanced math functions, 128 32-bit ALUs, and 256 16-bit ALUs.

That latter part is important; the core can run FP32 and FP16 operations concurrently, and the FP32 ALUs can pitch in for even more 16-bit data crunching if required. Speaking of number formats, the 32-bit ALU supports INT32/16, BF16, and INT8 dot products, making it adept at matching learning workloads. The 16-bit ALUs also support BF16, which is handy for ML.

Another interesting point is that Qualcomm uses a large wavefront (parallel operations) size compared to rivals AMD and NVIDIA. 32-bit operations arrive in groups of 64, while 16-bit operations stream in 128 at a time. Very wide designs typically suffer from bubbles where the core runs out of things to compute (rivals AMD and NVIDIA use 32 wide wavefronts for 32-bit operations), which is bad for power efficiency. Perhaps Qualcomm mitigates this intelligently, powering down its micro-shader cores.

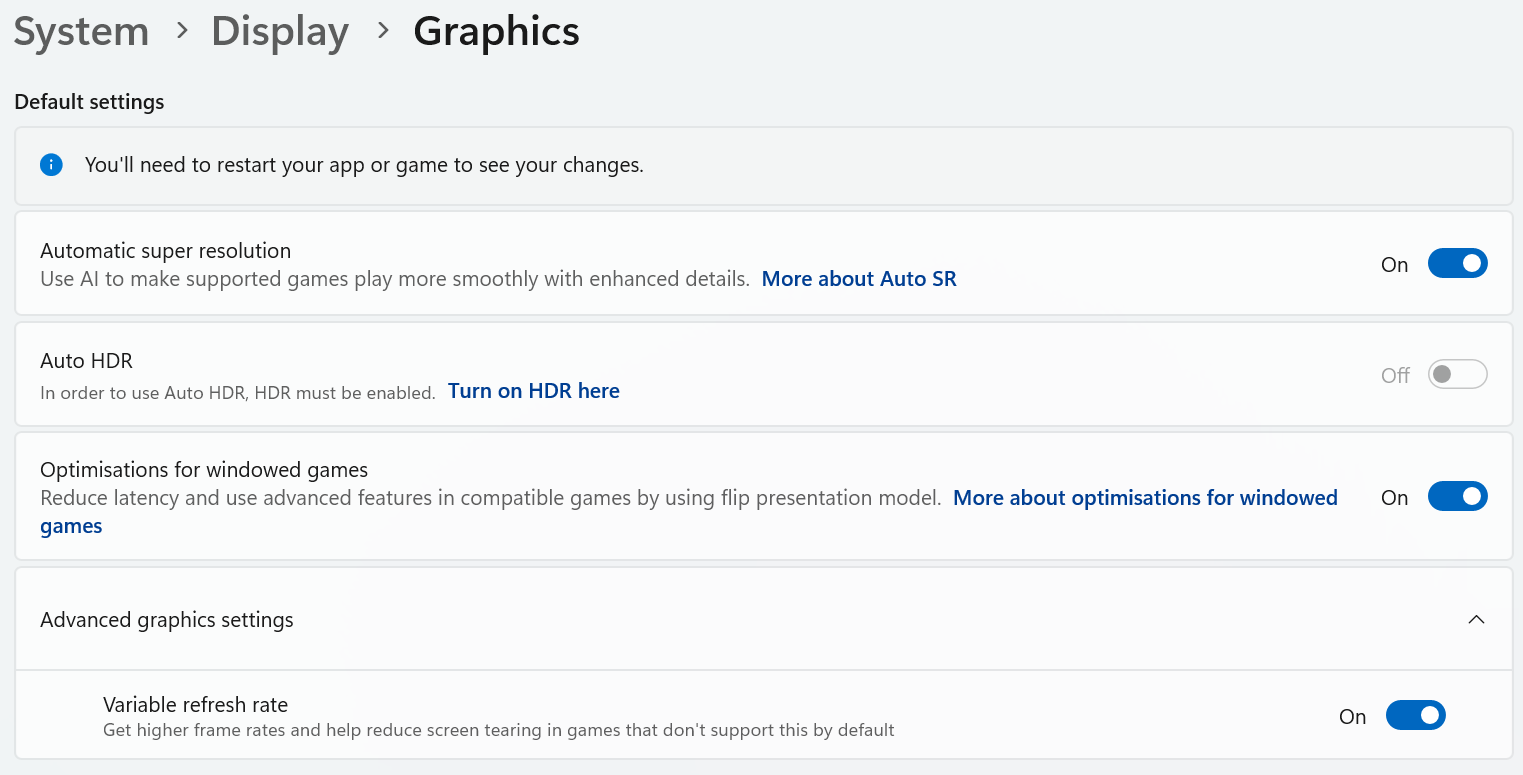

In terms of performance, we ran Crysis on the Snapdragon X Elite but had to comprise with a 720p resolution and medium graphics to achieve semi-decent frame rates. Other titles can leverage Microsoft’s new Automatic Super Resolution technology to improve frame rates in supported titles, including The Witcher 3 and Hitman 3. The trade-off is you’re limited to a very low 1,152 x 768 pixels. This certainly isn’t a gamer’s chipset, but you can achieve decent frame rates with some heavy compromises.

For a quick comparison, an entry-level laptop gaming GPU like the NVIDIA GTX4050 packs 13.5TLOPS of FP32 computing, which is almost three times the performance of the Adreno X1. Instead, the X1 looks more competitive with Intel’s latest integrated graphics parts, which range between 2 and 8 TFLOPS. However, Snapdragon X1 has the added complication of emulating games compiled for x64. Speaking of…

What you need to know about Windows on Arm emulation

Credit: Edgar Cervantes / Android Authority

While Arm CPUs can deliver high performance and remarkable energy efficiency, this transition brings new problems in the form of supporting legacy applications.

Windows has historically run on x86 and x64 platforms of AMD and Intel, meaning the low-level CPU instructions that OS applications run on a CPU aren’t supported by Arm. Microsoft rebuilt Windows on Arm to support the core OS on Arm CPUs and has released developer tools to help developers compile native Arm applications more easily.

Running older apps that aren't Arm-native? You'll take a (small) emulation performance penalty.

This has paid off somewhat over the past seven years of the project; Microsoft says that about 90% of “app minutes” a user spends time with daily has a native Arm application (likely because of web browsers). However, there are still swathes of modern and legacy Windows applications that aren’t yet Arm-native.

Windows on Arm has long run an emulator that converts code in real-time to support these apps. That ensures that software works but comes with a hit to performance, particularly for demanding real-time applications, like video conversion and gaming, and those requiring specific instructions like AVX2. Microsoft calls this hit “minor,” but previous Snapdragon chips have suffered. We’ll have to see if it’s much improved with the more powerful X-series of chips.

Fortunately, just before CoPilot PCs arrived, Microsoft’s updated emulation layer (now called Prism) claimed 10% to 20% additional performance for existing Arm chips (like the older Snapdragon 8cx). We tested the emulator’s performance on the 8cx before and after the update; here are the results:

- Firefox (Speedometer 3): +10%

- Cinebench r23 (Single-core): +8%

- Cinebench r23 (Multi-core): +4.5%

- HandBrake (h.264 software encoding time in seconds): +8%

Lofty claims of 20% improved performance are clearly the outliers, but these are still pretty decent gains for applications that still rely on emulation.

While the software emulation problem is more in Microsoft’s hands than Qualcomm’s, the latter has built features into its Oryon CPU to assist with memory store and floating-point architectures for x86 that should further boost emulation performance. If Qualcomm moves to Armv9 with its next-gen laptop CPU, SVE support will also help improve performance for instructions that require wider vector widths. We expect emulation performance to be pretty OK and will likely improve in the coming years.

Should you buy a Snapdragon X / CoPilot Plus PC?

Credit: Robert Triggs / Android Authority

In addition to pure specifications, there are many features to consider when looking up the first wave of CoPilot Plus PCs. First and foremost, the addition of an NPU means these laptops benefit from exclusive Windows features but will have to wait a while before Recall re-debuts.

As we’ve seen, Snapdragon X promises competitive performance with Intel’s latest chips and the powerhouse Apple M3 (though perhaps not quite the newer M4). On top of that, battery life should last well in excess of a busy workday, setting these laptops up as true MacBook competitors. Perhaps the biggest unknown, though, is just how well x64 applications will hold up under emulation.

The first reviews are rolling in as we speak, so it won’t hurt to wait a few more weeks to see if the Snapdragon X Elite and CoPilot Plus PC are worth your hard-earned cash.

![]()