The term x86-64v3 is once again a discussion point for Linux users, sparking curiosity and questions about its relevance to the platform. But what is it, why does it matter to Linux, and what is all the fuss about? Find out everything you need to know about x86-64v3 below.

A brief overview of microarchitecture history

The story of the x86 instruction set began about 39 years ago with the introduction of the Intel 80386, commonly referred to as the 386. This was a pivotal moment in the history of modern desktop and server computing. Launched in 1985, the 386 was Intel’s first 32-bit processor and was equipped with a full memory management unit, enabling it to run operating systems that utilize virtual memory. However, the evolution of x86 technology didn’t stop at the 386. Over time, this older chip, its microarchitecture, and its instructions were phased out. Debian Linux discontinued 386 support in 2005 and completely removed it in 2007. The Linux kernel followed suit in 2012, despite Linux’s original development on 386 and 486 machines.

The 586, the 686, and so forth followed their predecessors, which were later named the Pentium, Pentium II, and so on. Each new version introduced additional instructions to the x86 instruction set and new extensions like MMX and SSE. Eventually, common operating systems began phasing out support for 32-bit x86 entirely, ushering in the 64-bit era. For instance, Windows 11 is exclusively 64-bit, Ubuntu ceased supporting 32-bit PCs in 2018, and macOS transitioned to fully 64-bit in 2011.

x86-64v3 essentially adds AVX2, MOVBE, FMA, and some additional bit manipulation instructions.

However, the advent of the 64-bit era didn’t signify the end of progress for x86. The first milestone here was the introduction of the baseline AMD64 x86-64 instruction set with MMX, SSE, and SSE2. This was essentially used by the 2003 AMD K8 processor family and ensured compatibility with the first EMT64T — Intel’s 64-bit processors. In short, AMD’s processors were the first to be released in 64-bit, and they are the ones that defined the x86-64-bit architecture.

The next evolution of this baseline instruction set is referred to as x86-64v2, which includes SSE3, SSE4.1, and SSE4.2. This corresponds to the mainline CPUs from around 2008 to 2011, such as the AMD Bulldozer and Intel Nehalem. As of May 2023, Red Hat Enterprise Linux 9 discontinued support for older baseline x86-64-bit processors, requiring support from x86-64v2 processors instead. openSUSE Tumbleweed also began transitioning to require v2 processors in late 2023. SUSE Linux Enterprise Server recommends v2 for optimal performance and may discontinue support for older processors in the future.

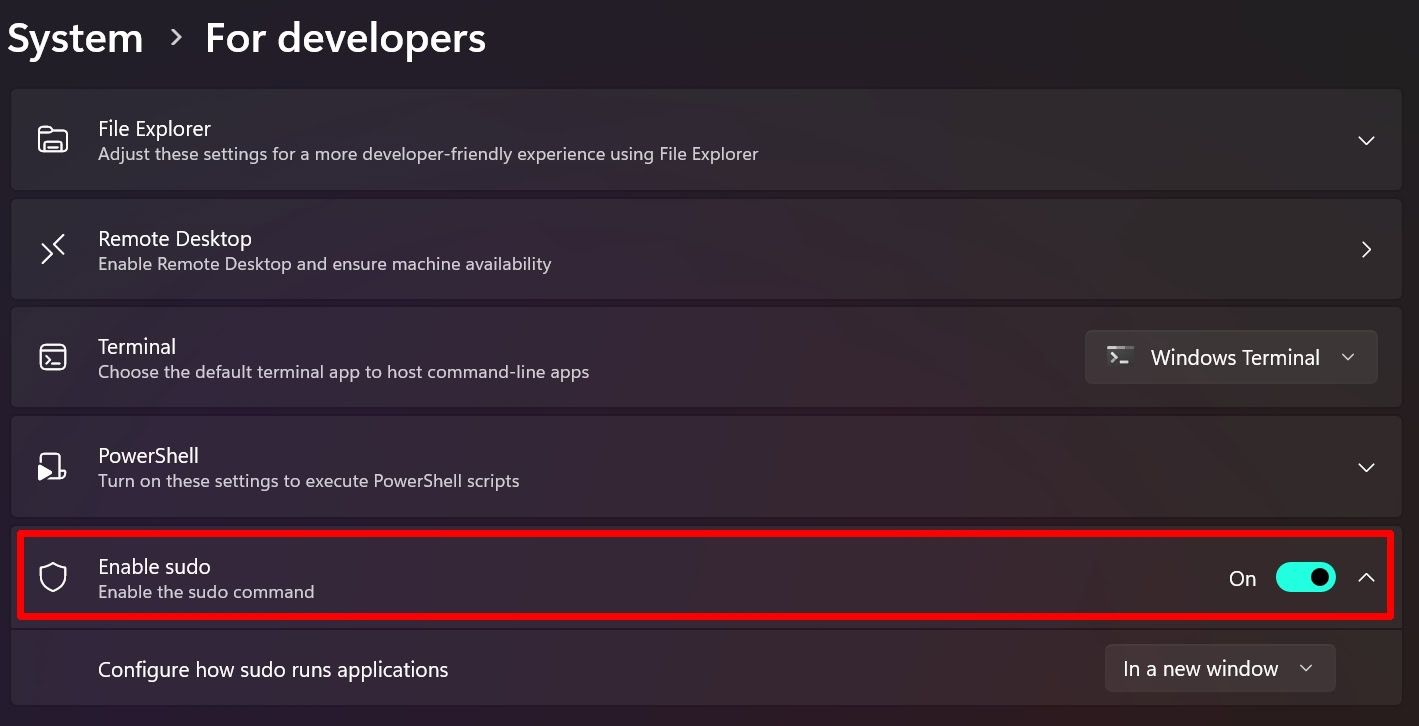

What is x86-64v3?

Credit: Oliver Cragg / Android Authority

x86-64v3 essentially adds vector instructions up to AVX2, MOVBE, FMA, and some additional bit manipulation instructions. AVX2, also known as the Haswell instructions, expands to the original AVX instruction set introduced in Intel’s Haswell microarchitecture. The MOVBE instruction allows for quick conversion to little-endian and big-endian in hardware by swapping bytes on a read from memory or on a write to memory. The FMA (fused multiply-add) instruction combines multiplication and addition into a single operation that computes the intermediate result with finite precision. This is particularly useful for gaming, matrix operations, and neural network applications.

x86v3 was first implemented in the first Intel Haswell generation CPUs in 2013, and AMD implemented it in 2015 with the Excavator microarchitecture. However, Intel’s Atom product line only added v3 support with the Gracemont microarchitecture in 2021. Despite this, Intel continued to release Atom CPUs without AVX or AVX2, including the Parker Ridge line in 2022 and some Elkhart Lake variants in 2023.

This is why v3 has been slow in becoming the new baseline, as not all Intel processors support it, making its mandate problematic. However, these are Atom processors, not server processors. Therefore, v3 support is not guaranteed and needs to be checked for each specific processor. It’s also worth mentioning that there is an x86-64v4 introduced with Intel Skylake and AMD Zen 4 platforms, which adds AVX-512.

To test whether your CPU has x86-64, use the x86-64-level tool available on GitHub or the ld-linux command on Ubuntu on other distros. Here’s an example string for Ubuntu:

/usr/lib64/ld-linux-x86-64.so.2 --help

This will provide a listing and indicate whether it supports v2, v3, and v4.

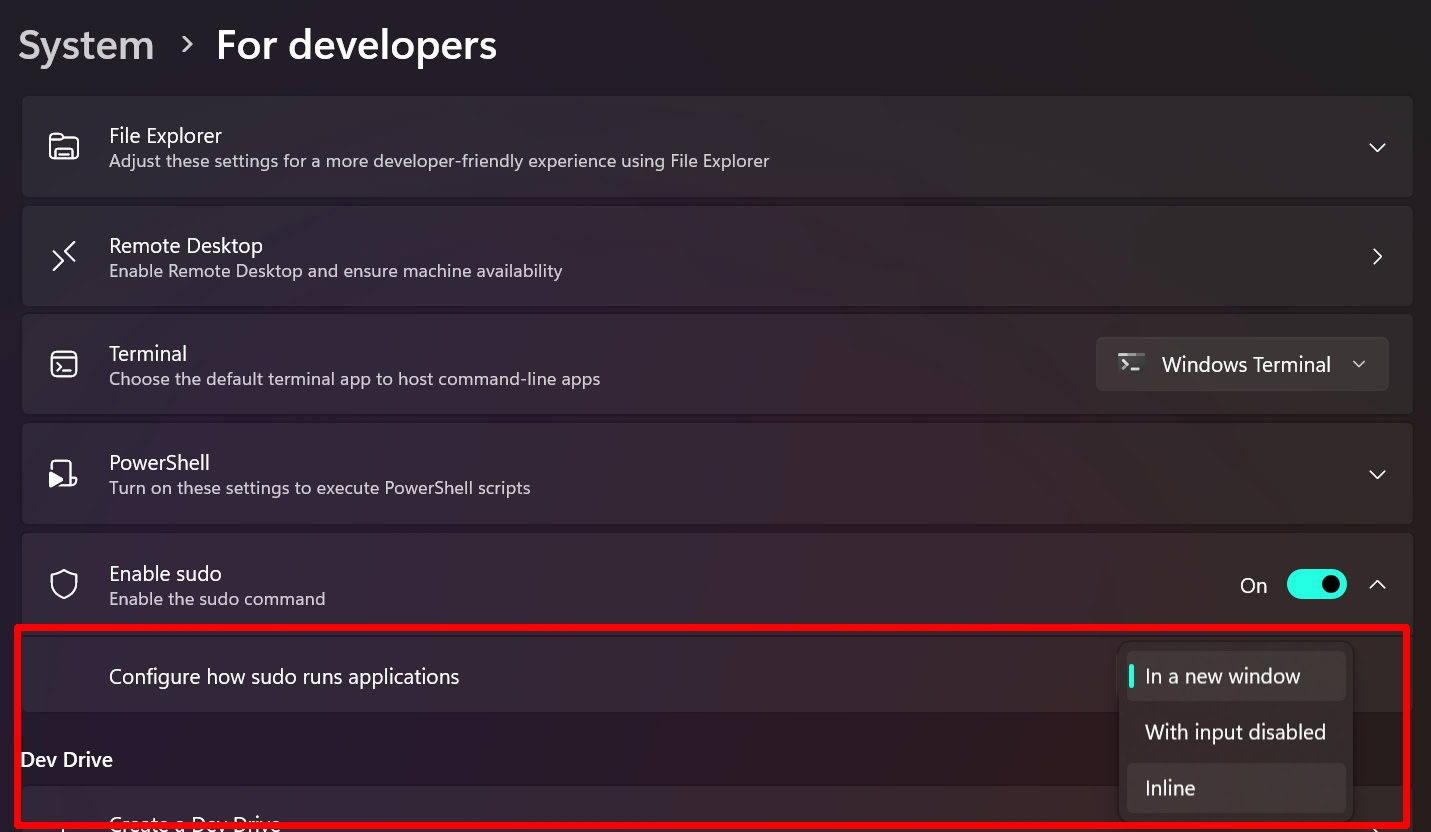

Why is everyone talking about x86-64v3?

Credit: Edgar Cervantes / Android Authority

The buzz is primarily due to Red Hat Linux Enterprise Linux 10 moving to the v3 baseline. Gentoo is now offering v3 packages, and there are experimental builds of Ubuntu Server using v3. This essentially means that when they compile it, they use the right compiler flags to ensure that AVX2 can be used when necessary but this requires hardware support. Different Linux distributions will roll this support out at different stages. For instance, NixOS is transitioning to v2 in 2024 and subsequently to v3 by 2027.

Comparisons of Linux distros compiled with baseline or v2 to those with v3 show varying performance results. In some cases, there is a performance boost, while in others, there is a performance decline. This is a maturing technology regarding the compiler flags and the code the compiler produces for different use cases.

Just because a distro requires a certain microarchitecture level, doesn't mean that Linux itself demands it.

However, it’s important to note that just because a distro requires a certain microarchitecture level, it doesn’t mean that Linux itself demands it. For example, 32-bit Linux distributions are still available today. Therefore, you can always find a version of a Linux distribution that suits your specific hardware, especially if you have an older PC. The focus here is on what the leading edge and most popular distros are doing. Of course, if the Linux kernel dropped support for a certain microarchitecture level as it did with the 386, that would be a different matter. However, we’re not at that stage yet.

Lastly, this pertains only to 64-bit x86 desktop processors from Intel or AMD. It does not apply to, for example, Arm processors that you might find in a Raspberry Pi or in your smartphone. Moreover, Windows 11 has already mandated the use of modern CPUs, requiring an eighth-generation Intel Core or Zen 2 to run Windows 11 officially.