“Something has gone seriously wrong,” dual-boot systems warn after Microsoft update

Enlarge (credit: Getty Images)

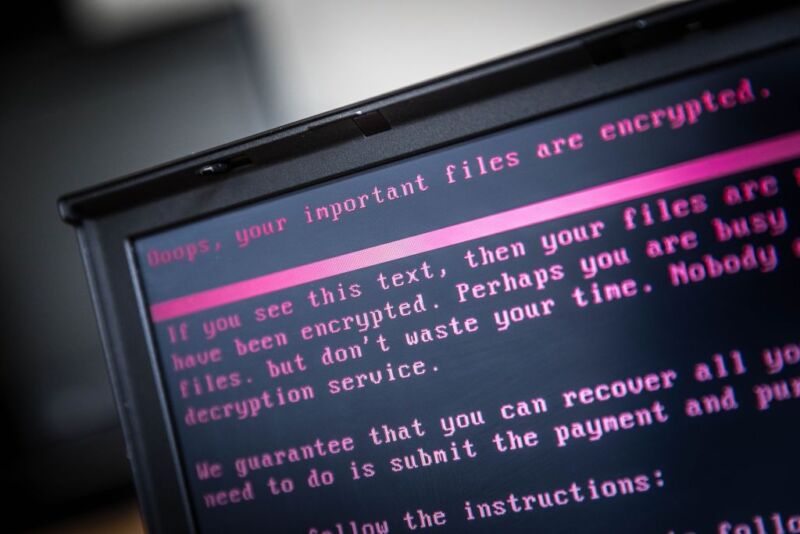

Last Tuesday, loads of Linux users—many running packages released as early as this year—started reporting their devices were failing to boot. Instead, they received a cryptic error message that included the phrase: “Something has gone seriously wrong.”

The cause: an update Microsoft issued as part of its monthly patch release. It was intended to close a 2-year-old vulnerability in GRUB, an open source boot loader used to start up many Linux devices. The vulnerability, with a severity rating of 8.6 out of 10, made it possible for hackers to bypass secure boot, the industry standard for ensuring that devices running Windows or other operating systems don’t load malicious firmware or software during the bootup process. CVE-2022-2601 was discovered in 2022, but for unclear reasons, Microsoft patched it only last Tuesday.

Multiple distros, both new and old, affected

Tuesday’s update left dual-boot devices—meaning those configured to run both Windows and Linux—no longer able to boot into the latter when Secure Boot was enforced. When users tried to load Linux, they received the message: “Verifying shim SBAT data failed: Security Policy Violation. Something has gone seriously wrong: SBAT self-check failed: Security Policy Violation.” Almost immediately support and discussion forums lit up with reports of the failure.