‘Accelerate Everything,’ NVIDIA CEO Says Ahead of COMPUTEX

“Generative AI is reshaping industries and opening new opportunities for innovation and growth,” NVIDIA founder and CEO Jensen Huang said in an address ahead of this week’s COMPUTEX technology conference in Taipei.

“Today, we’re at the cusp of a major shift in computing,” Huang told the audience, clad in his trademark black leather jacket. “The intersection of AI and accelerated computing is set to redefine the future.”

Huang spoke ahead of one of the world’s premier technology conferences to an audience of more than 6,500 industry leaders, press, entrepreneurs, gamers, creators and AI enthusiasts gathered at the glass-domed National Taiwan University Sports Center set in the verdant heart of Taipei.

The theme: NVIDIA accelerated platforms are in full production, whether through AI PCs and consumer devices featuring a host of NVIDIA RTX-powered capabilities or enterprises building and deploying AI factories with NVIDIA’s full-stack computing platform.

“The future of computing is accelerated,” Huang said. “With our innovations in AI and accelerated computing, we’re pushing the boundaries of what’s possible and driving the next wave of technological advancement.”

‘One-Year Rhythm’

More’s coming, with Huang revealing a roadmap for new semiconductors that will arrive on a one-year rhythm. Revealed for the first time, the Rubin platform will succeed the upcoming Blackwell platform, featuring new GPUs, a new Arm-based CPU — Vera — and advanced networking with NVLink 6, CX9 SuperNIC and the X1600 converged InfiniBand/Ethernet switch.

“Our company has a one-year rhythm. Our basic philosophy is very simple: build the entire data center scale, disaggregate and sell to you parts on a one-year rhythm, and push everything to technology limits,” Huang explained.

NVIDIA’s creative team used AI tools from members of the NVIDIA Inception startup program, built on NVIDIA NIM and NVIDIA’s accelerated computing, to create the COMPUTEX keynote. Packed with demos, this showcase highlighted these innovative tools and the transformative impact of NVIDIA’s technology.

‘Accelerated Computing Is Sustainable Computing’

NVIDIA is driving down the cost of turning data into intelligence, Huang explained as he began his talk.

“Accelerated computing is sustainable computing,” he emphasized, outlining how the combination of GPUs and CPUs can deliver up to a 100x speedup while only increasing power consumption by a factor of three, achieving 25x more performance per Watt over CPUs alone.

“The more you buy, the more you save,” Huang noted, highlighting this approach’s significant cost and energy savings.

Industry Joins NVIDIA to Build AI Factories to Power New Industrial Revolution

Leading computer manufacturers, particularly from Taiwan, the global IT hub, have embraced NVIDIA GPUs and networking solutions. Top companies include ASRock Rack, ASUS, GIGABYTE, Ingrasys, Inventec, Pegatron, QCT, Supermicro, Wistron and Wiwynn, which are creating cloud, on-premises and edge AI systems.

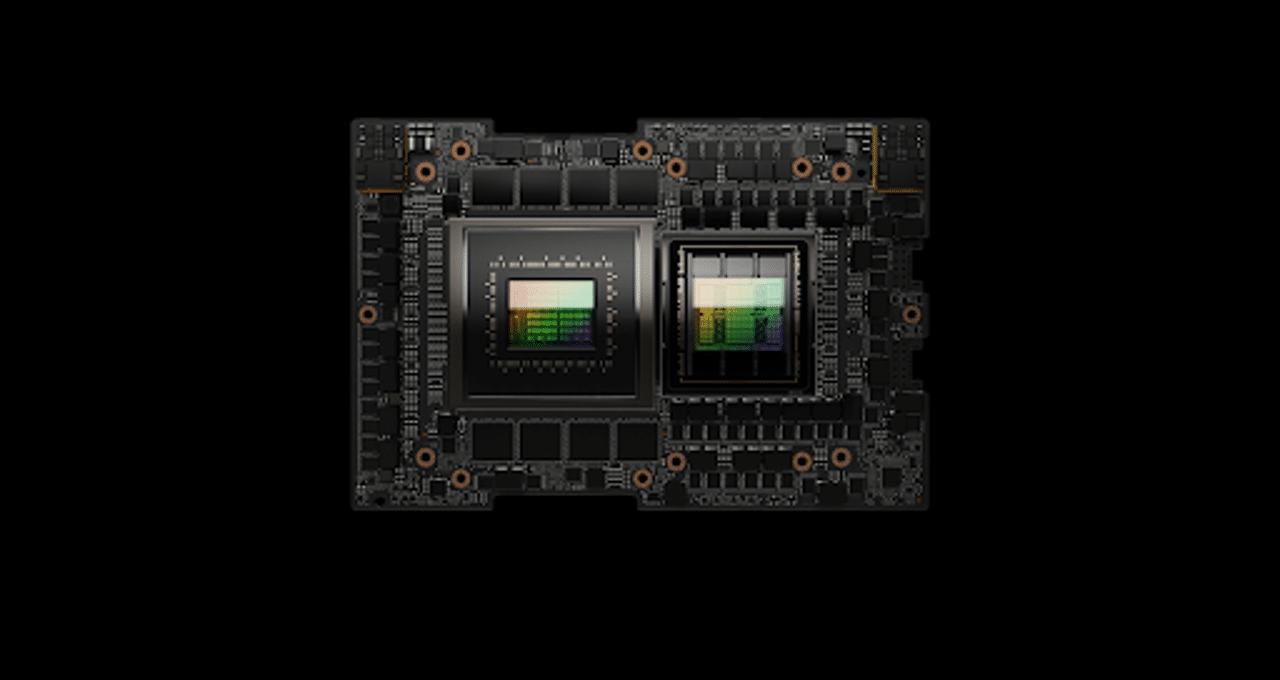

The NVIDIA MGX modular reference design platform now supports Blackwell, including the GB200 NVL2 platform, designed for optimal performance in large language model inference, retrieval-augmented generation and data processing.

AMD and Intel are supporting the MGX architecture with plans to deliver, for the first time, their own CPU host processor module designs. Any server system builder can use these reference designs to save development time while ensuring consistency in design and performance.

Next-Generation Networking with Spectrum-X

In networking, Huang unveiled plans for the annual release of Spectrum-X products to cater to the growing demand for high-performance Ethernet networking for AI.

NVIDIA Spectrum-X, the first Ethernet fabric built for AI, enhances network performance by 1.6x more than traditional Ethernet fabrics. It accelerates the processing, analysis and execution of AI workloads and, in turn, the development and deployment of AI solutions.

CoreWeave, GMO Internet Group, Lambda, Scaleway, STPX Global and Yotta are among the first AI cloud service providers embracing Spectrum-X to bring extreme networking performance to their AI infrastructures.

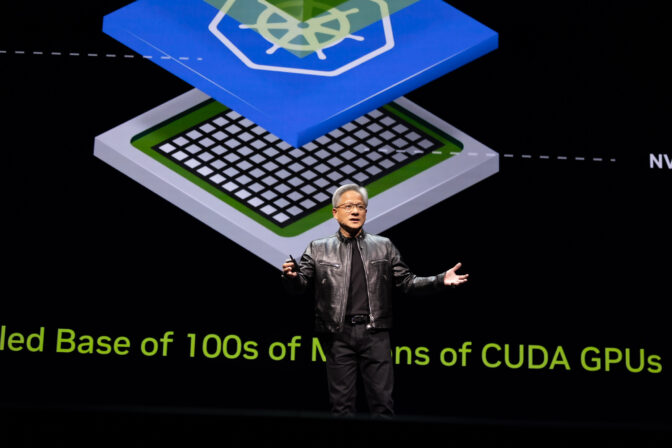

NVIDIA NIM to Transform Millions Into Gen AI Developers

With NVIDIA NIM, the world’s 28 million developers can now easily create generative AI applications. NIM — inference microservices that provide models as optimized containers — can be deployed on clouds, data centers or workstations.

NIM also enables enterprises to maximize their infrastructure investments. For example, running Meta Llama 3-8B in a NIM produces up to 3x more generative AI tokens on accelerated infrastructure than without NIM.

Nearly 200 technology partners — including Cadence, Cloudera, Cohesity, DataStax, NetApp, Scale AI, and Synopsys — are integrating NIM into their platforms to speed generative AI deployments for domain-specific applications, such as copilots, code assistants, digital human avatars and more. Hugging Face is now offering NIM — starting with Meta Llama 3.

“Today we just posted up in Hugging Face the Llama 3 fully optimized, it’s available there for you to try. You can even take it with you,” Huang said. “So you could run it in the cloud, run it in any cloud, download this container, put it into your own data center, and you can host it to make it available for your customers.”

NVIDIA Brings AI Assistants to Life With GeForce RTX AI PCs

NVIDIA’s RTX AI PCs, powered by RTX technologies, are set to revolutionize consumer experiences with over 200 RTX AI laptops and more than 500 AI-powered apps and games.

The RTX AI Toolkit and newly available PC-based NIM inference microservices for the NVIDIA ACE digital human platform underscore NVIDIA’s commitment to AI accessibility.

Project G-Assist, an RTX-powered AI assistant technology demo, was also announced, showcasing context-aware assistance for PC games and apps.

And Microsoft and NVIDIA are collaborating to help developers bring new generative AI capabilities to their Windows native and web apps with easy API access to RTX-accelerated SLMs that enable RAG capabilities that run on-device as part of Windows Copilot Runtime.

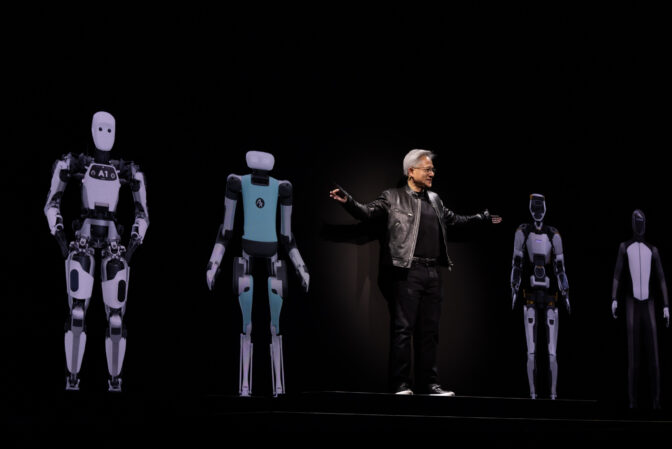

NVIDIA Robotics Adopted by Industry Leaders

NVIDIA is spearheading the $50 trillion industrial digitization shift, with sectors embracing autonomous operations and digital twins — virtual models that enhance efficiency and cut costs. Through its Developer Program, NVIDIA offers access to NIM, fostering AI innovation.

Taiwanese manufacturers are transforming their factories using NVIDIA’s technology, with Huang showcasing Foxconn’s use of NVIDIA Omniverse, Isaac and Metropolis to create digital twins, combining vision AI and robot development tools for enhanced robotic facilities.

“The next wave of AI is physical AI. AI that understands the laws of physics, AI that can work among us,” Huang said, emphasizing the importance of robotics and AI in future developments.

The NVIDIA Isaac platform provides a robust toolkit for developers to build AI robots, including AMRs, industrial arms and humanoids, powered by AI models and supercomputers like Jetson Orin and Thor.

“Robotics is here. Physical AI is here. This is not science fiction, and it’s being used all over Taiwan. It’s just really, really exciting,” Huang added.

Global electronics giants are integrating NVIDIA’s autonomous robotics into their factories, leveraging simulation in Omniverse to test and validate this new wave of AI for the physical world. This includes over 5 million preprogrammed robots worldwide.

“All the factories will be robotic. The factories will orchestrate robots, and those robots will be building products that are robotic,” Huang explained.

Huang emphasized NVIDIA Isaac’s role in boosting factory and warehouse efficiency, with global leaders like BYD Electronics, Siemens, Teradyne Robotics and Intrinsic adopting its advanced libraries and AI models.

NVIDIA AI Enterprise on the IGX platform, with partners like ADLINK, Advantech and ONYX, delivers edge AI solutions meeting strict regulatory standards, essential for medical technology and other industries.

Huang ended his keynote on the same note he began it on, paying tribute to Taiwan and NVIDIA’s many partners there. “Thank you,” Huang said. “I love you guys.”