Given computer vision’s place as the cornerstone of an increasing number of applications from ADAS to medical diagnosis and robotics, it is critical that its weak points be mitigated, such as the ability to identify corner cases or if algorithms are trained on shallow datasets. While well-known bloopers are often the result of human decisions, there are also fundamental technical issues that require further research.

“Computer vision” and “machine vision” were once used nearly interchangeably, with machine vision most often referring to the hardware embodiment of vision, such as in robots. Computer vision (CV), which started as the academic amalgam of neuroscience and AI research, has now become the dominant idea and preferred term.

“In today’s world, even the robotics people now call it computer vision,” said Jay Pathak, director, software development at Ansys. “The classical computer vision that used to happen outside of deep learning has been completely superseded. In terms of the success of AI, computer vision has a proven track record. Anytime self-driving is involved, any kind of robot that is doing work — its ability to perceive and take action — that’s all driven by deep learning.”

The original intent of CV was to replicate the power and versatility of human vision. Because vision is such a basic sense, the problem seemed like it would be far easier than higher-order cognitive challenges, like playing chess. Indeed, in the canonical anecdote about the field’s initial naïve optimism, Marvin Minsky, co-founder of the MIT AI Lab, having forgotten to include a visual system in a robot, assigned the task to undergraduates. But instead of being quick to solve, the problem consumed a generation of researchers.

Both academic and industry researchers work on problems that roughly can be split into three categories:

- Image capture: The realm of digital cameras and sensors. It may use AI for refinements or it may rely on established software and hardware.

- Image classification/detection: A subset of AI/ML that uses image datasets as training material to build models for visual recognition.

- Image generation: The most recent work, which uses tools like LLMs to create novel images, and with the breakthrough demonstration of OpenAI’s Sora, even photorealistic videos.

Each one alone has spawned dozens of PhD dissertations and industry patents. Image classification/detection, the primary focus of this article, underlies ADAS, as well as many inspection applications.

The change from lab projects to everyday uses came as researchers switched from rules-based systems that simulated visual processing as a series of if/then statements (if red and round, then apple) to neural networks (NNs), in which computers learned to derive salient features by training on image datasets. NNs are basically layered graphs. The earliest model, 1943’s Perceptron, was a one-layer simulation of a biological neuron, which is one element in a vast network of interconnecting brain cells. Neurons have inputs (dendrites) and outputs (axons), driven by electrical and chemical signaling. The Perceptron and its descendant neural networks emulated the form but skipped the chemistry, instead focusing on electrical signals with algorithms that weighted input values. Over the decades, researchers refined different forms of neural nets with vastly increased inputs and layers, eventually becoming the deep learning networks that underlie the current advances in AI.

The most recent forms of these network models are convolutional neural networks (CNNs) and transformers. In highly simplified terms, the primary difference between them is that CNNs are very good at distinguishing local features, while transformers perceive a more globalized picture.

Thus, transformers are a natural evolution from CNNs and recurrent neural networks, as well as long short-term memory approaches (RNNs/LSTMs), according to Gordon Cooper, product marketing manager for Synopsys’ embedded vision processor family.

“You get more accuracy at the expense of more computations and parameters. More data movement, therefore more power,” said Cooper. “But there are cases where accuracy is the most important metric for a computer vision application. Pedestrian detection comes to mind. While some vision designs still will be well served with CNNs, some of our customers have determined they are moving completely to transformers. Ten years ago, some embedded vision applications that used DSPs moved to NNs, but there remains a need for both NNs and DSPs in a vision system. Developers still need a good handle on both technologies and are better served to find a vendor that can provide a combined solution.”

The emergence of CNN-based neural networks began supplanting traditional CV techniques for object detection and recognition.

“While first implemented using hardwired CNN accelerator hardware blocks, many of those CNN techniques then quickly migrated to programmable solutions on software-driven NPUs and GPNPUs,” said Aman Sikka, chief architect at Quadric.

Two parallel trends continue to reshape CV systems. “The first is that transformer networks for object detection and recognition, with greater accuracy and usability than their convolution-based predecessors, are beginning to leave the theoretical labs and enter production service in devices,” Sikka explained. “The second is that CV experts are reinventing the classical ISP functions with NN and transformer-based models that offer superior results. Thus, we’ve seen waves of ISP functionality migrating first from pure hardwired to C++ algorithmic form, and now into advanced ML network formats, with a modern design today in 2024 consisting of numerous machine-learning models working together.”

CV for inspection

While CV is well-known for its essential role in ADAS, another primary application is inspection. CV has helped detect everything from cancer tumors to manufacturing errors, or in the case of IBM’s productized research, critical flaws in the built environment. For example, a drone equipped with the IBM system could check if a bridge had cracks, a far safer and more precise way to perform visual inspection than having a human climb to dangerous heights.

By combining visual transformers with self-supervised learning, the annotation requirement is vastly reduced. In addition, the company has introduced a new process named “visual prompting,” where the AI can be taught to make the correct distinctions with limited supervision by using “in-context learning,” such as a scribble as a prompt. The optimal end result is that it should be able to respond to LLM-like prompts, such as “find all six-inch cracks.”

“Even if it makes mistakes and needs the help of human annotations, you’re doing far less labeling work than you would with traditional CNNs, where you’d have to do hundreds if not thousands of labels,” said Jayant Kalagnanam, director, AI applications at IBM Research.

Beware the humans

Ideally, domain-specific datasets should increase the accuracy of identification. They are often created by expanding on foundation models already trained on general datasets, such as ImageNet. Both types of datasets are subject to human and technical biases. Google’s infamous racial identification gaffes resulted from both technical issues and subsequent human overcorrections.

Meanwhile, IBM was working on infrastructure identification, and the company’s experience of getting its model to correctly identify cracks, including the problem of having too many images of one kind of defect, suggests a potential solution to the bias problem, which is to allow the inclusion of contradictory annotations.

“Everybody who is not a civil engineer can easily say what a crack is,” said Cristiano Malossi, IBM principal research scientist. “Surprisingly, when we discuss which crack has to be repaired with domain experts, the amount of disagreement is very high because they’re taking different considerations into account and, as a result, they come to different conclusions. For a model, this means if there’s ambiguity in the annotations, it may be because the annotations have been done by multiple people, which may actually have the advantage of introducing less bias.”

Fig. 1: IBM’s Self-supervised learning model. Source: IBM

Corner cases and other challenges to accuracy

The true image dataset is infinity, which in practical terms leaves most computer vision systems vulnerable to corner cases, potentially with fatal results, noted Alan Yuille, Bloomberg distinguished professor of cognitive science and computer science at Johns Hopkins University.

“So-called ‘corner cases’ are rare events that likely aren’t included in the dataset and may not even happen in everyday life,” said Yuille. “Unfortunately, all datasets have biases, and algorithms aren’t necessarily going to generalize to data that differs from the datasets they’re trained on. And one thing we have found with deep nets is if there is any bias in the dataset, the deep nets are wonderful at finding it and exploiting it.”

Thus, corner cases remain a problem to watch for. “A classic example is the idea of a baby in the road. If you’re training a car, you’re typically not going to have many examples of images with babies in the road, but you definitely want your car to stop if it sees a baby,” said Yuille. “If the companies are working in constrained domains, and they’re very careful about it, that’s not necessarily going to be a problem for them. But if the dataset is in any way biased, the algorithms may exploit the biases and corner cases, and may not be able to detect them, even if they may be of critical importance.”

This includes instances, such as real-world weather conditions, where an image may be partly occluded. “In academic cases, you could have algorithms that when evaluated on standard datasets like ImageNet are getting almost perfect results, but then you can give them an image which is occluded, for example, by a heavy rain,” he said. “In cases like that, the algorithms may fail to work, even if they work very well under normal weather conditions. A term for this is ‘out of domain.’ So you train in one domain and that may be cars in nice weather conditions, you test in out of domain, where there haven’t been many training images, and the algorithms would fail.”

The underlying reasons go back to the fundamental challenge of trying to replicate a human brain’s visual processing in a computer system.

“Objects are three-dimensional entities. Humans have this type of knowledge, and one reason for that is humans learn in a very different way than machine learning AI algorithms,” Yuille said. “Humans learn over a period of several years, where they don’t only see objects. They play with them, they touch them, they taste them, they throw them around.”

By contrast, current algorithms do not have that type of knowledge.

“They are trained as classifiers,” said Yuille. “They are trained to take images and output a class label — object one, object two, etc. They are not trained to estimate the 3D structure of objects. They have some sort of implicit knowledge of some aspects of 3D, but they don’t have it properly. That’s one reason why if you take some of those models, and you’ve contaminated the images in some way, the algorithms start degrading badly, because the vision community doesn’t have datasets of images with 3D ground truth. Only for humans, do we have datasets with 3D ground truth.”

Hardware implementation, challenges

The hardware side is becoming a bottleneck, as academics and industry work to resolve corner cases and create ever-more comprehensive and precise results. “The complexity of the operation behind the transformer is quadratic,“ said Malossi. “As a result, they don’t scale linearly with the size of the problem or the size of the model.“

While the situation might be improved with a more scalable iteration of transformers, for now progress has been stalled as the industry looks for more powerful hardware or any suitable hardware. “We’re at a point right now where progress in AI is actually being limited by the supply of silicon, which is why there’s so much demand, and tremendous growth in hardware companies delivering AI,” said Tony Chan Carusone, CTO of Alphawave Semi. “In the next year or two, you’re going to see more supply of these chips come online, which will fuel rapid progress, because that’s the only thing holding it back. The massive investments being made by hyperscalers is evidence about the backlogs in delivering silicon. People wouldn’t be lining up to write big checks unless there were very specific projects they had ready to run as soon as they get the silicon.”

As more AI silicon is developed, designers should think holistically about CV, since visual fidelity depends not only on sophisticated algorithms, but image capture by a chain of co-optimized hardware and software, according to Pulin Desai, group director of product marketing and management for Tensilica vision, radar, lidar, and communication DSPs at Cadence. “When you capture an image, you have to look at the full optical path. You may start with a camera, but you’ll likely also have radar and lidar, as well as different sensors. You have to ask questions like, ‘Do I have a good lens that can focus on the proper distance and capture the light? Can my sensor perform the DAC correctly? Will the light levels be accurate? Do I have enough dynamic range? Will noise cause the levels to shift?’ You have to have the right equipment and do a lot of pre-processing before you send what’s been captured to the AI. Remember, as you design, don’t think of it as a point solution. It’s an end-to-end solution. Every different system requires a different level of full path, starting from the lens to the sensor to the processing to the AI.”

One of the more important automotive CV applications is passenger monitoring, which can help reduce the tragedies of parents forgetting children who are strapped into child seats. But such systems depend on sensors, which can be challenged by noise to the point of being ineffective.

“You have to build a sensor so small it goes into your rearview mirror,” said Jayson Bethurem, vice president of marketing and business development at Flex Logix. “Then the issue becomes the conditions of your car. The car can have the sun shining right in your face, saturating everything, to the complete opposite, where it’s completely dark and the only light in the car is emitting off your dashboard. For that sensor to have that much dynamic range and the level of detail that it needs to have, that’s where noise creeps in, because you can’t build a sensor of that much dynamic range to be perfect. On the edges, or when it’s really dark or oversaturated bright, it’s losing quality. And those are sometimes the most dangerous times.”

Breaking into the black box

Finally, yet another serious concern for computer vision systems is the fact that they can’t be tested. Transformers, especially, are a notorious black box.

“We need to have algorithms that are more interpretable so that we can understand what’s going on inside them,” Yuille added. “AI will not be satisfactory till we move to a situation where we evaluate algorithms by being able to find the failure mode. In academia, and I hope companies are more careful, we test them on random samples. But if those random samples are biased in some way — and often they are — they may discount situations like the baby in the road, which don’t happen often. To find those issues, you’ve got to let your worst enemy test your algorithm and find the images that break it.”

Related Reading

Dealing With AI/ML Uncertainty

How neural network-based AI systems perform under the hood is currently unknown, but the industry is finding ways to live with a black box.

The post Fundamental Issues In Computer Vision Still Unresolved appeared first on Semiconductor Engineering.

In traditional neural networks, sometimes called multi-layer perceptrons [left], each synapse learns a number called a weight, and each neuron applies a simple function to the sum of its inputs. In the new Kolmogorov-Arnold architecture [right], each synapse learns a function, and the neurons sum the outputs of those functions.The NSF Institute for Artificial Intelligence and Fundamental Interactions

In traditional neural networks, sometimes called multi-layer perceptrons [left], each synapse learns a number called a weight, and each neuron applies a simple function to the sum of its inputs. In the new Kolmogorov-Arnold architecture [right], each synapse learns a function, and the neurons sum the outputs of those functions.The NSF Institute for Artificial Intelligence and Fundamental Interactions

Santiago Tenorio holds one of Lime Microsystems’ private 5G base-station kits.Vodafone

Santiago Tenorio holds one of Lime Microsystems’ private 5G base-station kits.Vodafone

A Cessna [top left] carried a 38 GHz antenna [top right] during a flight, functioning as a 5G base station for receivers on the ground [bottom right]. The plane was able to connect to multiple ground stations at once [illustration, bottom left].NTT Docomo

A Cessna [top left] carried a 38 GHz antenna [top right] during a flight, functioning as a 5G base station for receivers on the ground [bottom right]. The plane was able to connect to multiple ground stations at once [illustration, bottom left].NTT Docomo

Nvidia’s GH200 Grace Hopper superchip, here deployed in Jülich’s JEDI machine, now powers the world’s top three most efficient HPC systems. Jülich Supercomputing Center

Nvidia’s GH200 Grace Hopper superchip, here deployed in Jülich’s JEDI machine, now powers the world’s top three most efficient HPC systems. Jülich Supercomputing Center

![Fig. 1: Asymmetric forward back-biasing scheme for permanent erasing. (a) All the data are reset to 1. (b) All the data are reset to 0. Whether all the data where reset to 1 or 0 is determined by the asymmetric forward back-biasing scheme. Source: KAIST/Creative Commons [2]](https://i0.wp.com/semiengineering.com/wp-content/uploads/Fig01_KAIST_SRAM_back-biasing_CC.png?resize=1264%2C1404&ssl=1)

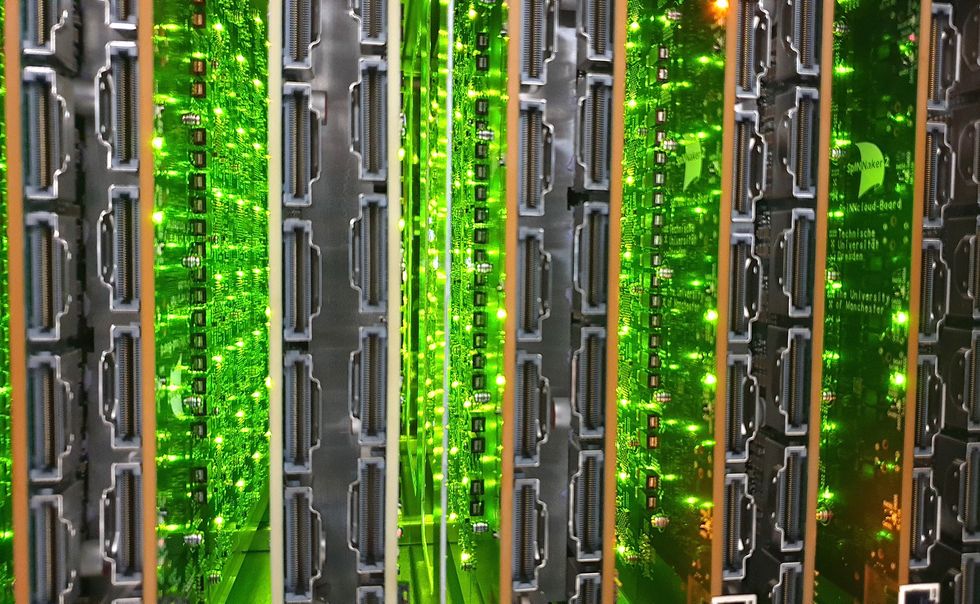

The SpiNNaker2 supercomputer has been designed to model up to 10 billion neurons, about one-tenth the number in the human brain. SpiNNCloud Systems

The SpiNNaker2 supercomputer has been designed to model up to 10 billion neurons, about one-tenth the number in the human brain. SpiNNCloud Systems