- Google has announced that the Android 15 update will improve the platform’s support for hearing aids.

- The latest release will work with hearing aids that support Bluetooth LE Audio.

- The update will also offer better hearing aid management features like a Quick Settings tile, the ability to change presets, and the ability to view the battery level.

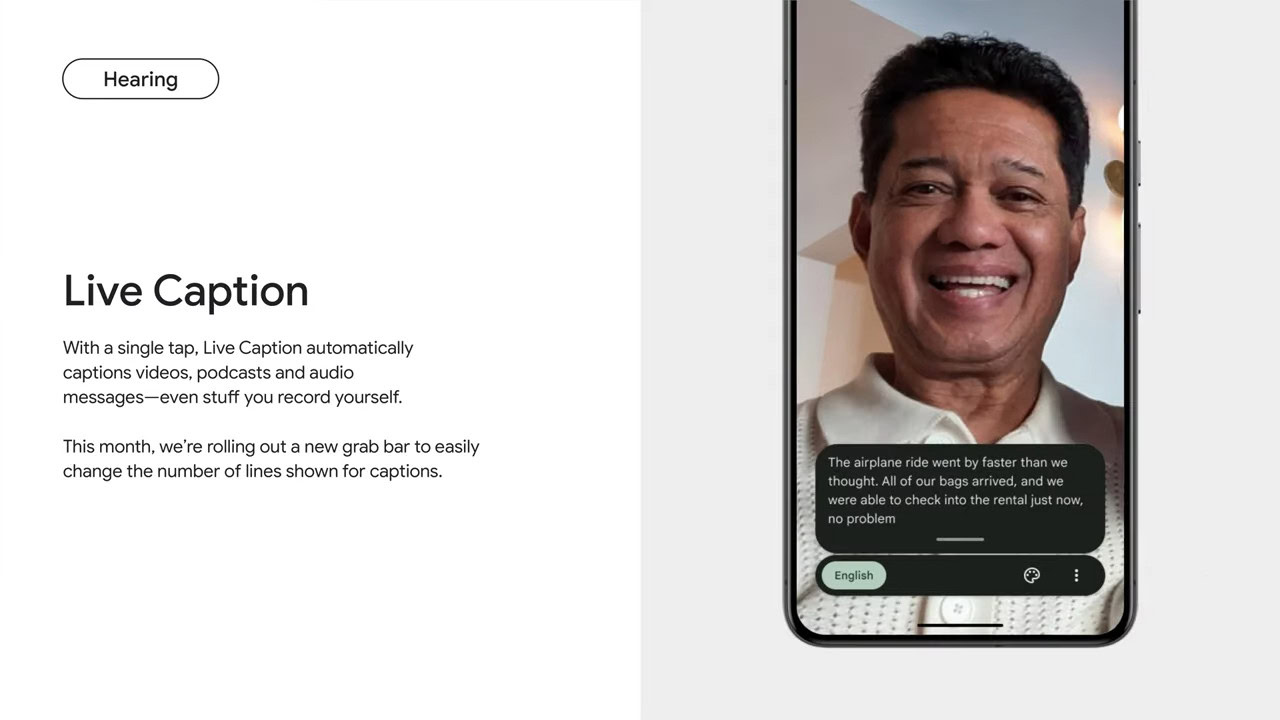

Because Android is used by billions of people worldwide, Google has to design the operating system with accessibility in mind. Hundreds of millions of people suffer from a degree of hearing loss, which is why Android offers assistive features like Live Captions. There’s only so much that Android itself can do to compensate for hearing loss, though, which is where dedicated assistive devices like hearing aids come in. Android has technically supported hearing aids since Android 10 was released in 2019, but with the upcoming update to Android 15 later this year, the operating system will significantly improve support for them.

Hearing aids, if you aren’t aware, are a type of electronic device that’s designed to help people with hearing loss. They’re inserted into your ears, similar to other types of hearables like wireless earbuds, but their main purpose is not to stream music but to amplify environmental sounds so you can hear better. Many sounds originate from your phone, though, which is why many hearing aids nowadays support Bluetooth connectivity. People with hearing loss want to be able to hear who they’re speaking to in voice calls, watch videos on YouTube, or even listen to music, all of which is possible thanks to Bluetooth.

However, hearing aids, unlike wireless earbuds, absolutely need to have all-day battery life. That’s challenging to achieve when using a standard Bluetooth Classic connection to stream audio from your phone to your hearing aids. Streaming audio between two devices connected via Bluetooth Low Energy (Bluetooth LE) is more battery efficient, but for the longest time, there wasn’t a standardized way to stream audio using Bluetooth LE.

That left things up to companies like Apple and Google to create their own proprietary, Bluetooth LE-based hearing aid protocols. Apple has its Made for iPhone (MFi) hearing aid protocol, while Google has its Audio Streaming for Hearing Aids (ASHA) protocol. The former was introduced to iOS way back in 2013, while the latter was introduced more recently in 2019 with the release of Android 10. While there are now several hearing aids on the market compatible with both MFi and ASHA, the fragmentation problem remains. Any advancements in the protocol made by one company will only be enjoyed by users of that company’s ecosystem, and since we’re talking about an accessibility service that people rely on, that’s a problem.

Fortunately, there’s now a standardized way for devices to stream audio over Bluetooth Low Energy, and it’s aptly called Bluetooth LE Audio. LE Audio not only supports the development of standard Bluetooth hearing aids that work across platforms but also implements new features like Auracast. We’ve already shown you how Android 15 is baking in better support for LE Audio through a new audio-sharing feature, but that’s not the only LE Audio-related improvement the operating system update will bring.

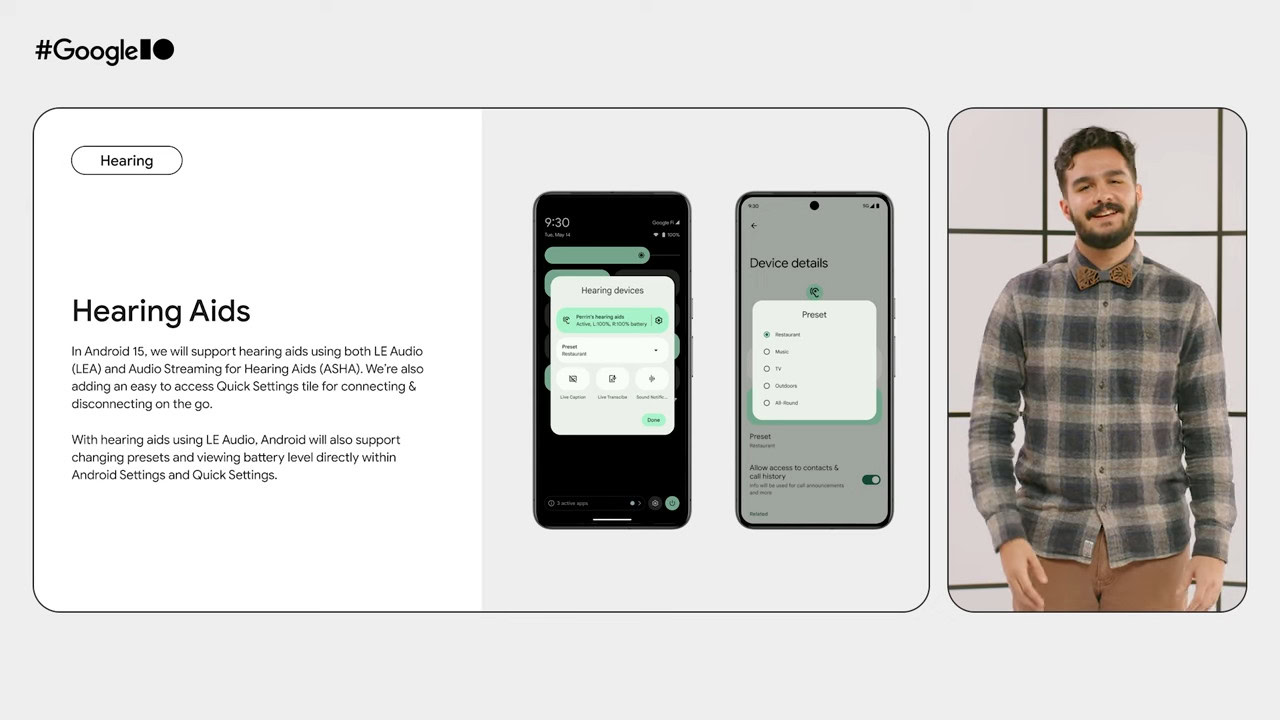

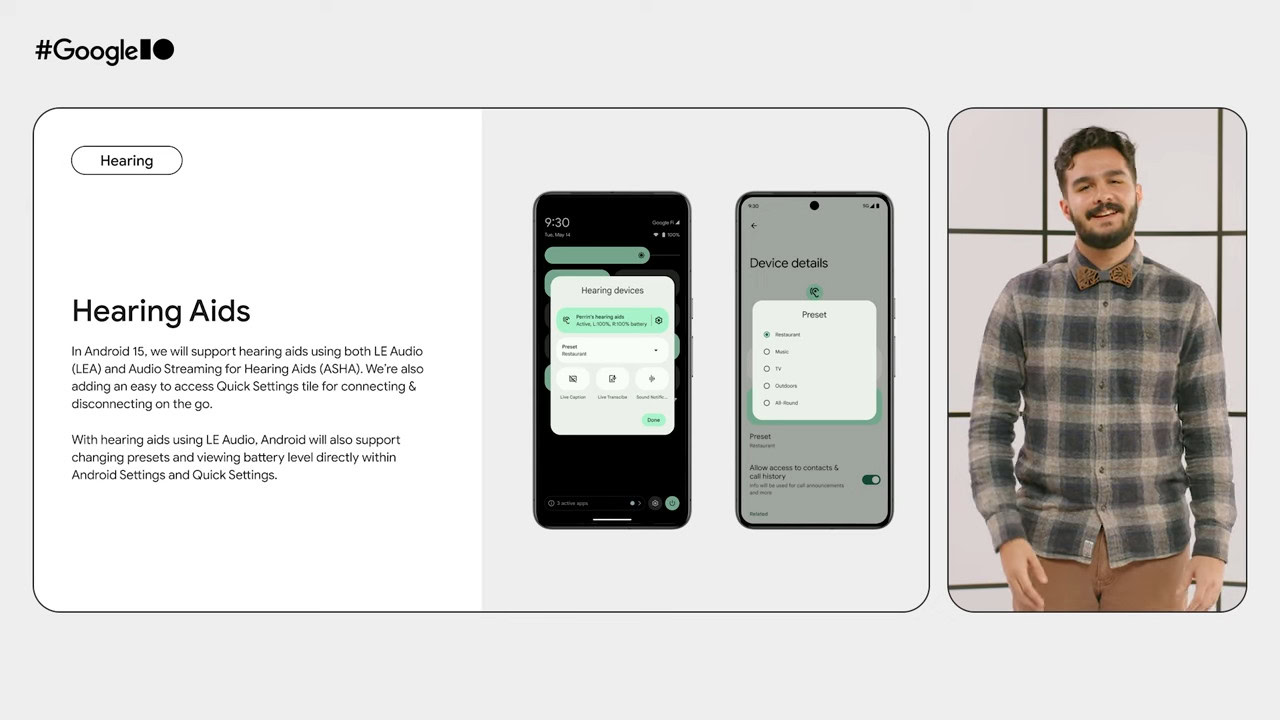

At Google I/O earlier this month, Google announced that Android 15 will support hearing aids that use both Bluetooth LE Audio (LEA) as well as the company’s ASHA protocol. Furthermore, the update will introduce a new Quick Settings tile that makes connecting and disconnecting to hearing aids much easier. The hearing aid Quick Settings tile is already live in Android 15 Beta 2, in fact, but I don’t have any hearing aids myself to test this feature out.

Credit: Mishaal Rahman / Android Authority

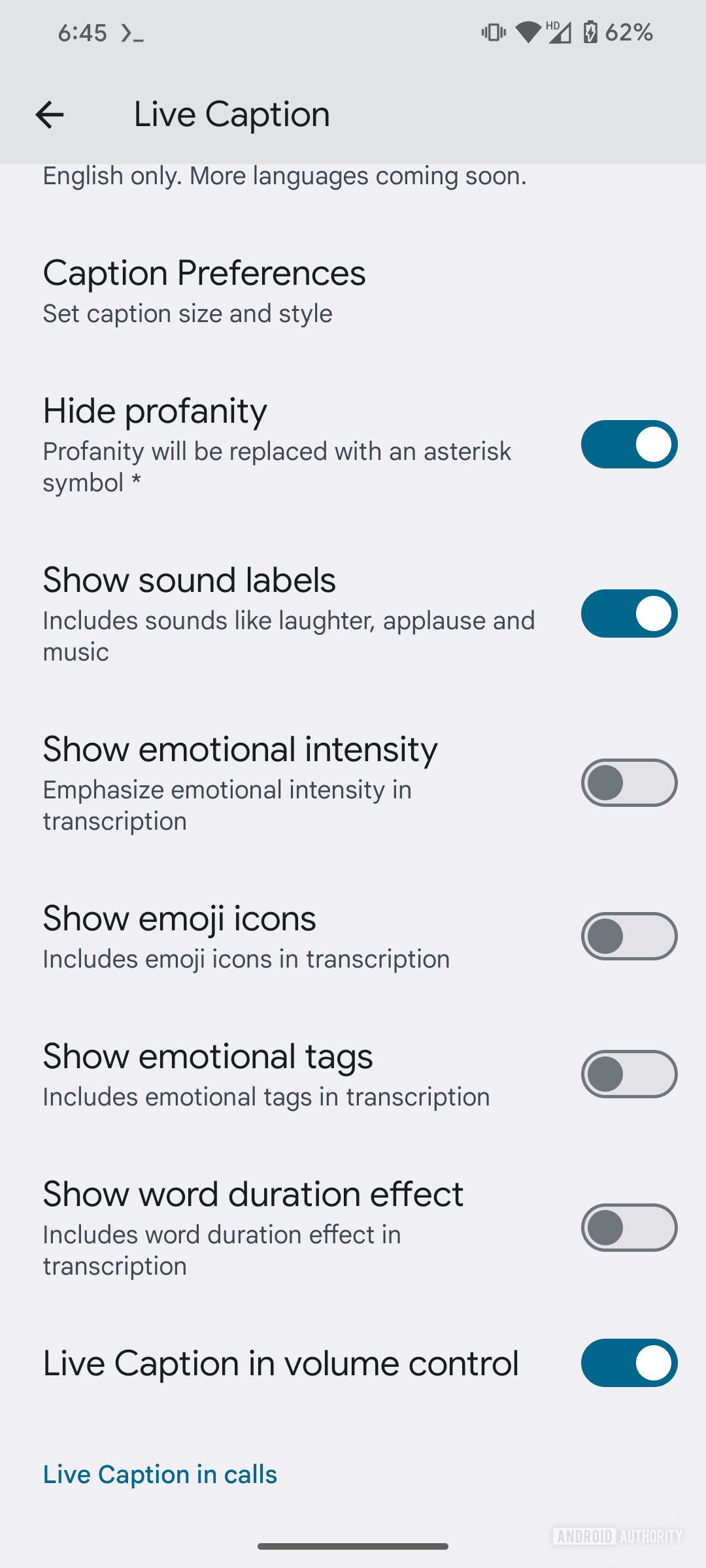

According to the images that Google showed, though, the Quick Setting pop-up will let users toggle various accessibility features like Live Caption, Live Transcribe, and Sound Notifications. It’ll also let users change the hearing aid preset, which “represents a configuration of the hearing aid signal processing parameters tailored to a specific listening situation,” according to the Bluetooth SIG. The exact presets that can be selected depends on what the hearing aid reports to Android. In the example image that Google shared, there were presets for “Restaurant,” “Music,” “TV,” “Outdoors,” and “All-Round.” Finally, Google says that users will also be able to view the battery level of their connected hearing aids directly within Android’s Settings and Quick Settings.

Improved hearing aid support isn’t the only accessibility-related improvement coming to Android. During Global Accessibility Awareness Day earlier this month, Google announced a number of accessibility updates to its Android apps, including Lookout, Look to Speak, Project Relate, and more. These changes, along with the upcoming improvements to Live Captions that we recently detailed, will make Android even more accessible to people with difficulty hearing or seeing.