Exploring frontiers of mechanical engineering

From cutting-edge robotics, design, and bioengineering to sustainable energy solutions, ocean engineering, nanotechnology, and innovative materials science, MechE students and their advisors are doing incredibly innovative work. The graduate students highlighted here represent a snapshot of the great work in progress this spring across the Department of Mechanical Engineering, and demonstrate the ways the future of this field is as limitless as the imaginations of its practitioners.

Democratizing design through AI

Lyle Regenwetter

Hometown: Champaign, Illinois

Advisor: Assistant Professor Faez Ahmed

Interests: Food, climbing, skiing, soccer, tennis, cooking

Lyle Regenwetter finds excitement in the prospect of generative AI to "democratize" design and enable inexperienced designers to tackle complex design problems. His research explores new training methods through which generative AI models can be taught to implicitly obey design constraints and synthesize higher-performing designs. Knowing that prospective designers often have an intimate knowledge of the needs of users, but may otherwise lack the technical training to create solutions, Regenwetter also develops human-AI collaborative tools that allow AI models to interact and support designers in popular CAD software and real design problems.

Solving a whale of a problem

Loïcka Baille

Hometown: L’Escale, France

Advisor: Daniel Zitterbart

Interests: Being outdoors — scuba diving, spelunking, or climbing. Sailing on the Charles River, martial arts classes, and playing volleyball

Loïcka Baille’s research focuses on developing remote sensing technologies to study and protect marine life. Her main project revolves around improving onboard whale detection technology to prevent vessel strikes, with a special focus on protecting North Atlantic right whales. Baille is also involved in an ongoing study of Emperor penguins. Her team visits Antarctica annually to tag penguins and gather data to enhance their understanding of penguin population dynamics and draw conclusions regarding the overall health of the ecosystem.

Water, water anywhere

Carlos Díaz-Marín

Hometown: San José, Costa Rica

Advisor: Professor Gang Chen | Former Advisor: Professor Evelyn Wang

Interests: New England hiking, biking, and dancing

Carlos Díaz-Marín designs and synthesizes inexpensive salt-polymer materials that can capture large amounts of humidity from the air. He aims to change the way we generate potable water from the air, even in arid conditions. In addition to water generation, these salt-polymer materials can also be used as thermal batteries, capable of storing and reusing heat. Beyond the scientific applications, Díaz-Marín is excited to continue doing research that can have big social impacts, and that finds and explains new physical phenomena. As a LatinX person, Díaz-Marín is also driven to help increase diversity in STEM.

Scalable fabrication of nano-architected materials

Somayajulu Dhulipala

Hometown: Hyderabad, India

Advisor: Assistant Professor Carlos Portela

Interests: Space exploration, taekwondo, meditation.

Somayajulu Dhulipala works on developing lightweight materials with tunable mechanical properties. He is currently working on methods for the scalable fabrication of nano-architected materials and predicting their mechanical properties. The ability to fine-tune the mechanical properties of specific materials brings versatility and adaptability, making these materials suitable for a wide range of applications across multiple industries. While the research applications are quite diverse, Dhulipala is passionate about making space habitable for humanity, a crucial step toward becoming a spacefaring civilization.

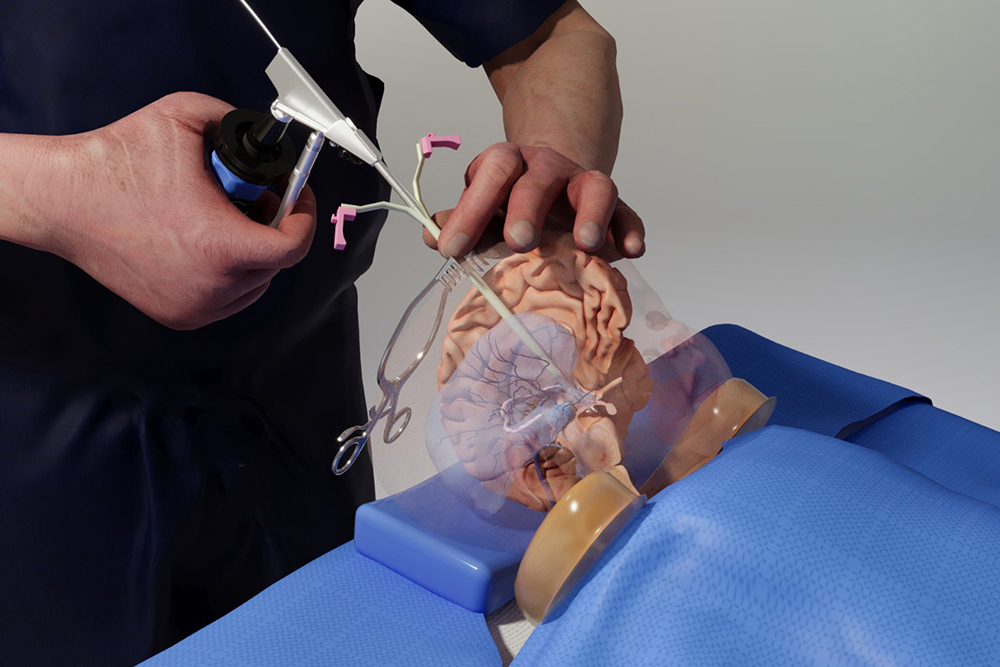

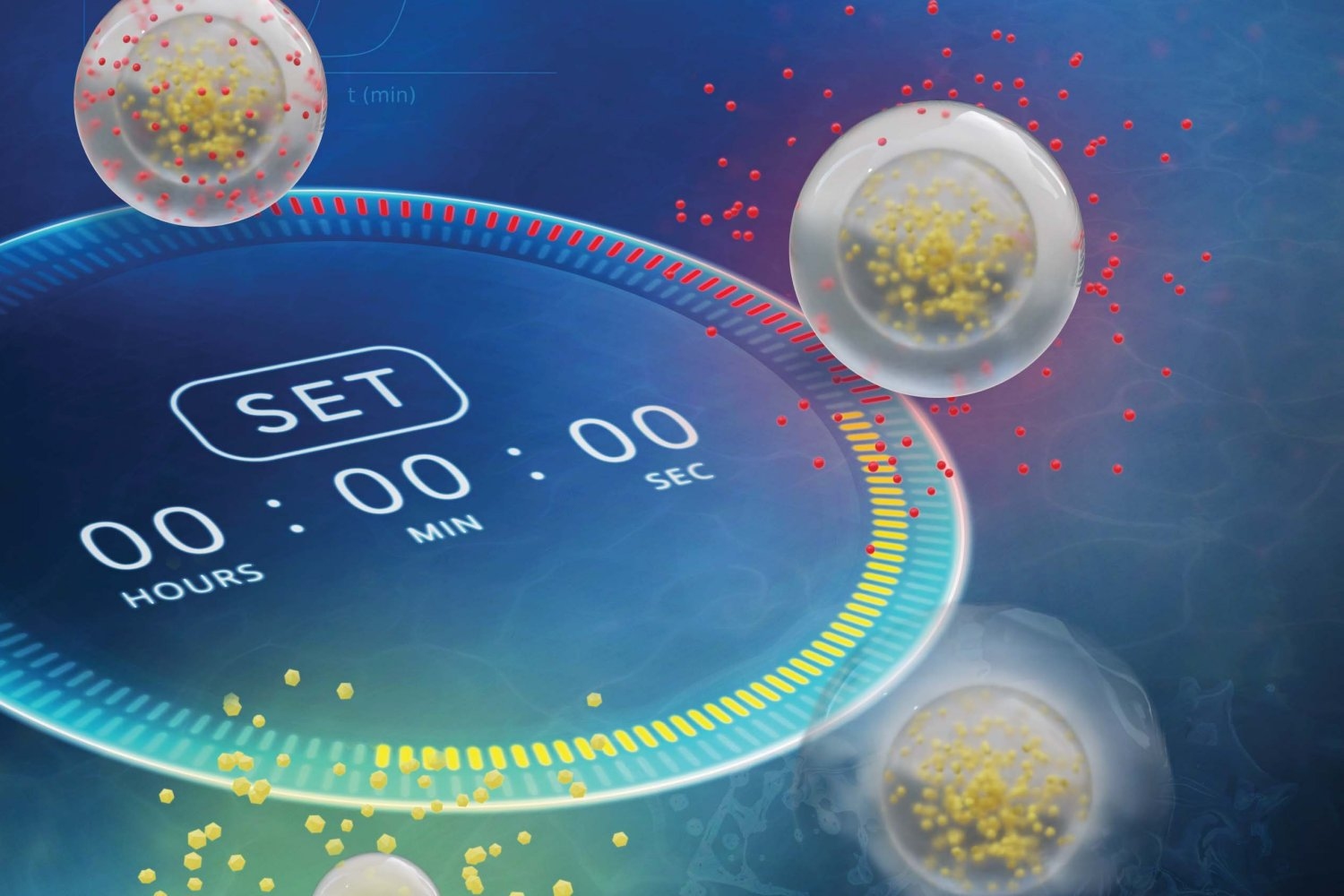

Ingestible health-care devices

Jimmy McRae

Hometown: Woburn, Massachusetts

Advisor: Associate Professor Giovani Traverso

Interests: Anything basketball-related: playing, watching, going to games, organizing hometown tournaments

Jimmy McRae aims to drastically improve diagnostic and therapeutic capabilities through noninvasive health-care technologies. His research focuses on leveraging materials, mechanics, embedded systems, and microfabrication to develop novel ingestible electronic and mechatronic devices. This ranges from ingestible electroceutical capsules that modulate hunger-regulating hormones to devices capable of continuous ultralong monitoring and remotely triggerable actuations from within the stomach. The principles that guide McRae’s work to develop devices that function in extreme environments can be applied far beyond the gastrointestinal tract, with applications for outer space, the ocean, and more.

Freestyle BMX meets machine learning

Eva Nates

Hometown: Narberth, Pennsylvania

Advisor: Professor Peko Hosoi

Interests: Rowing, running, biking, hiking, baking

Eva Nates is working with the Australian Cycling Team to create a tool to classify Bicycle Motocross Freestyle (BMX FS) tricks. She uses a singular value decomposition method to conduct a principal component analysis of the time-dependent point-tracking data of an athlete and their bike during a run to classify each trick. The 2024 Olympic team hopes to incorporate this tool in their training workflow, and Nates worked alongside the team at their facilities on the Gold Coast of Australia during MIT’s Independent Activities Period in January.

Augmenting Astronauts with Wearable Limbs

Erik Ballesteros

Hometown: Spring, Texas

Advisor: Professor Harry Asada

Interests: Cosplay, Star Wars, Lego bricks

Erik Ballesteros’s research seeks to support astronauts who are conducting planetary extravehicular activities through the use of supernumerary robotic limbs (SuperLimbs). His work is tailored toward design and control manifestation to assist astronauts with post-fall recovery, human-leader/robot-follower quadruped locomotion, and coordinated manipulation between the SuperLimbs and the astronaut to perform tasks like excavation and sample handling.

This article appeared in the Spring 2024 edition of the Department of Mechanical Engineering's magazine, MechE Connects.

© Photo courtesy of Loïcka Baille.