The Saga of AD-X2, the Battery Additive That Roiled the NBS

Senate hearings, a post office ban, the resignation of the director of the National Bureau of Standards, and his reinstatement after more than 400 scientists threatened to resign. Who knew a little box of salt could stir up such drama?

What was AD-X2?

It all started in 1947 when a bulldozer operator with a 6th grade education, Jess M. Ritchie, teamed up with UC Berkeley chemistry professor Merle Randall to promote AD-X2, an additive to extend the life of lead-acid batteries. The problem of these rechargeable batteries’ dwindling capacity was well known. If AD-X2 worked as advertised, millions of car owners would save money.

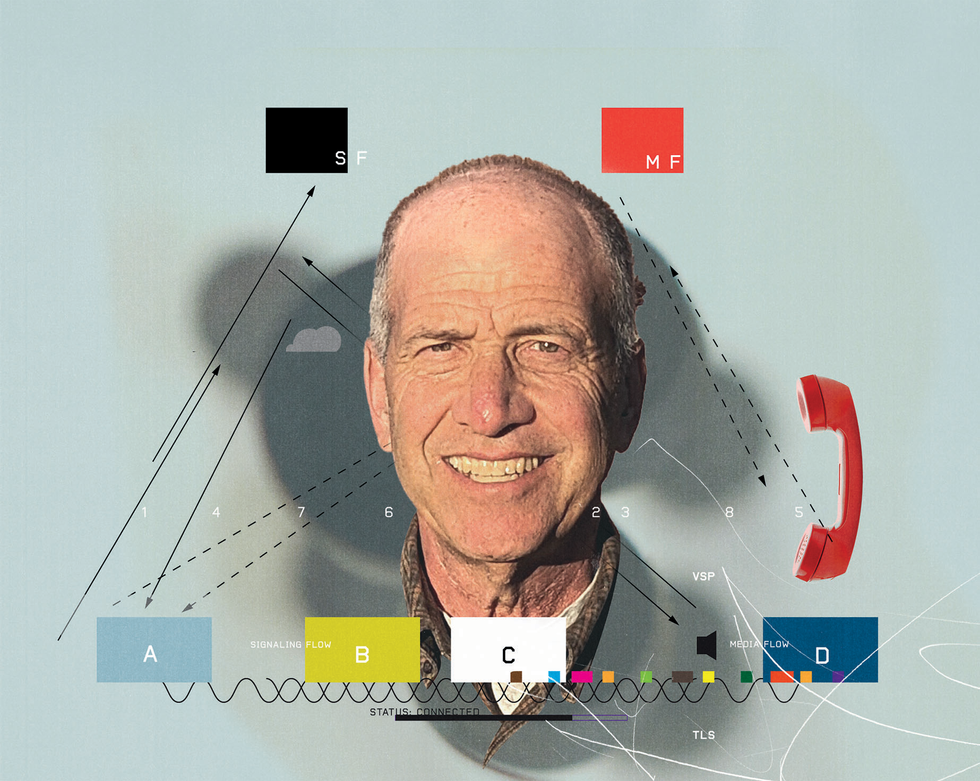

![]() Jess M. Ritchie demonstrates his AD-X2 battery additive before the Senate Select Committee on Small Business.National Institute of Standards and Technology Digital Collections

Jess M. Ritchie demonstrates his AD-X2 battery additive before the Senate Select Committee on Small Business.National Institute of Standards and Technology Digital Collections

A basic lead-acid battery has two electrodes, one of lead and the other of lead dioxide, immersed in dilute sulfuric acid. When power is drawn from the battery, the chemical reaction splits the acid molecules, and lead sulfate is deposited in the solution. When the battery is charged, the chemical process reverses, returning the electrodes to their original state—almost. Each time the cell is discharged, the lead sulfate “hardens” and less of it can dissolve in the sulfuric acid. Over time, it flakes off, and the battery loses capacity until it’s dead.

By the 1930s, so many companies had come up with battery additives that the U.S. National Bureau of Standards stepped in. Its lab tests revealed that most were variations of salt mixtures, such as sodium and magnesium sulfates. Although the additives might help the battery charge faster, they didn’t extend battery life. In May 1931, NBS (now the National Institute of Standards and Technology, or NIST) summarized its findings in Letter Circular No. 302: “No case has been found in which this fundamental reaction is materially altered by the use of these battery compounds and solutions.”

Of course, innovation never stops. Entrepreneurs kept bringing new battery additives to market, and the NBS kept testing them and finding them ineffective.

Do battery additives work?

After World War II, the National Better Business Bureau decided to update its own publication on battery additives, “Battery Compounds and Solutions.” The publication included a March 1949 letter from NBS director Edward Condon, reiterating the NBS position on additives. Prior to heading NBS, Condon, a physicist, had been associate director of research at Westinghouse Electric in Pittsburgh and a consultant to the National Defense Research Committee. He helped set up MIT’s Radiation Laboratory, and he was also briefly part of the Manhattan Project. Needless to say, Condon was familiar with standard practices for research and testing.

Meanwhile, Ritchie claimed that AD-X2’s secret formula set it apart from the hundreds of other additives on the market. He convinced his senator, William Knowland, a Republican from Oakland, Calif., to write to NBS and request that AD-X2 be tested. NBS declined, not out of any prejudice or ill will, but because it tested products only at the request of other government agencies. The bureau also had a longstanding policy of not naming the brands it tested and not allowing its findings to be used in advertisements.

![]() AD-X2 consisted mainly of Epsom salt and Glauber’s salt.National Institute of Standards and Technology Digital Collections

AD-X2 consisted mainly of Epsom salt and Glauber’s salt.National Institute of Standards and Technology Digital Collections

Ritchie cried foul, claiming that NBS was keeping new businesses from entering the marketplace. Merle Randall launched an aggressive correspondence with Condon and George W. Vinal, chief of NBS’s electrochemistry section, extolling AD-X2 and the testimonials of many users. In its responses, NBS patiently pointed out the difference between anecdotal evidence and rigorous lab testing.

Enter the Federal Trade Commission. The FTC had received a complaint from the National Better Business Bureau, which suspected that Pioneers, Inc.—Randall and Ritchie’s distribution company—was making false advertising claims. On 22 March 1950, the FTC formally asked NBS to test AD-X2.

By then, NBS had already extensively tested the additive. A chemical analysis revealed that it was 46.6 percent magnesium sulfate (Epsom salt) and 49.2 percent sodium sulfate (Glauber’s salt, a horse laxative) with the remainder being water of hydration (water that’s been chemically treated to form a hydrate). That is, AD-X2 was similar in composition to every other additive on the market. But, because of its policy of not disclosing which brands it tests, NBS didn’t immediately announce what it had learned.

The David and Goliath of battery additives

NBS then did something unusual: It agreed to ignore its own policy and let the National Better Business Bureau include the results of its AD-X2 tests in a public statement, which was published in August 1950. The NBBB allowed Pioneers to include a dissenting comment: “These tests were not run in accordance with our specification and therefore did not indicate the value to be derived from our product.”

Far from being cowed by the NBBB’s statement, Ritchie was energized, and his story was taken up by the mainstream media. Newsweek’s coverage pitted an up-from-your-bootstraps David against an overreaching governmental Goliath. Trade publications, such as Western Construction News and Batteryman, also published flattering stories about Pioneers. AD-X2 sales soared.

Then, in January 1951, NBS released its updated pamphlet on battery additives, Circular 504. Once again, tests by the NBS found no difference in performance between batteries treated with additives and the untreated control group. The Government Printing Office sold the circular for 15 cents, and it was one of NBS’s most popular publications. AD-X2 sales plummeted.

Ritchie needed a new arena in which to challenge NBS. He turned to politics. He called on all of his distributors to write to their senators. Between July and December 1951, 28 U.S. senators and one U.S. representative wrote to NBS on behalf of Pioneers.

Condon was losing his ability to effectively represent the Bureau. Although the Senate had confirmed Condon’s nomination as director without opposition in 1945, he was under investigation by the House Committee on Un-American Activities for several years. FBI Director J. Edgar Hoover suspected Condon to be a Soviet spy. (To be fair, Hoover suspected the same of many people.) Condon was repeatedly cleared and had the public backing of many prominent scientists.

But Condon felt the investigations were becoming too much of a distraction, and so he resigned on 10 August 1951. Allen V. Astin became acting director, and then permanent director the following year. And he inherited the AD-X2 mess.

Astin had been with NBS since 1930. Originally working in the electronics division, he developed radio telemetry techniques, and he designed instruments to study dielectric materials and measurements. During World War II, he shifted to military R&D, most notably development of the proximity fuse, which detonates an explosive device as it approaches a target. I don’t think that work prepared him for the political bombs that Ritchie and his supporters kept lobbing at him.

Mr. Ritchie almost goes to Washington

On 6 September 1951, another government agency entered the fray. C.C. Garner, chief inspector of the U.S. Post Office Department, wrote to Astin requesting yet another test of AD-X2. NBS dutifully submitted a report that the additive had “no beneficial effects on the performance of lead acid batteries.” The post office then charged Pioneers with mail fraud, and Ritchie was ordered to appear at a hearing in Washington, D.C., on 6 April 1952. More tests were ordered, and the hearing was delayed for months.

Back in March 1950, Ritchie had lost his biggest champion when Merle Randall died. In preparation for the hearing, Ritchie hired another scientist: Keith J. Laidler, an assistant professor of chemistry at the Catholic University of America. Laidler wrote a critique of Circular 504, questioning NBS’s objectivity and testing protocols.

Ritchie also got Harold Weber, a professor of chemical engineering at MIT, to agree to test AD-X2 and to work as an unpaid consultant to the Senate Select Committee on Small Business.

Life was about to get more complicated for Astin and NBS.

Why did the NBS Director resign?

Trying to put an end to the Pioneers affair, Astin agreed in the spring of 1952 that NBS would conduct a public test of AD-X2 according to terms set by Ritchie. Once again, the bureau concluded that the battery additive had no beneficial effect.

However, NBS deviated slightly from the agreed-upon parameters for the test. Although the bureau had a good scientific reason for the minor change, Ritchie had a predictably overblown reaction—NBS cheated!

Then, on 18 December 1952, the Senate Select Committee on Small Business—for which Ritchie’s ally Harold Weber was consulting—issued a press release summarizing the results from the MIT tests: AD-X2 worked! The results “demonstrate beyond a reasonable doubt that this material is in fact valuable, and give complete support to the claims of the manufacturer.” NBS was “simply psychologically incapable of giving Battery AD-X2 a fair trial.”

![]() The National Bureau of Standards’ regular tests of battery additives found that the products did not work as claimed.National Institute of Standards and Technology Digital Collections

The National Bureau of Standards’ regular tests of battery additives found that the products did not work as claimed.National Institute of Standards and Technology Digital Collections

But the press release distorted the MIT results.The MIT tests had focused on diluted solutions and slow charging rates, not the normal use conditions for automobiles, and even then AD-X2’s impact was marginal. Once NBS scientists got their hands on the report, they identified the flaws in the testing.

How did the AD-X2 controversy end?

The post office finally got around to holding its mail fraud hearing in the fall of 1952. Ritchie failed to attend in person and didn’t realize his reports would not be read into the record without him, which meant the hearing was decidedly one-sided in favor of NBS. On 27 February 1953, the Post Office Department issued a mail fraud alert. All of Pioneers’ mail would be stopped and returned to sender stamped “fraudulent.” If this charge stuck, Ritchie’s business would crumble.

But something else happened during the fall of 1952: Dwight D. Eisenhower, running on a pro-business platform, was elected U.S. president in a landslide.

Ritchie found a sympathetic ear in Eisenhower’s newly appointed Secretary of Commerce Sinclair Weeks, who acted decisively. The mail fraud alert had been issued on a Friday. Over the weekend, Weeks had a letter hand-delivered to Postmaster General Arthur Summerfield, another Eisenhower appointee. By Monday, the fraud alert had been suspended.

What’s more, Weeks found that Astin was “not sufficiently objective” and lacked a “business point of view,” and so he asked for Astin’s resignation on 24 March 1953. Astin complied. Perhaps Weeks thought this would be a mundane dismissal, just one of the thousands of political appointments that change hands with every new administration. That was not the case.

More than 400 NBS scientists—over 10 percent of the bureau’s technical staff— threatened to resign in protest. The American Academy for the Advancement of Science also backed Astin and NBS. In an editorial published in Science, the AAAS called the battery additive controversy itself “minor.” “The important issue is the fact that the independence of the scientist in his findings has been challenged, that a gross injustice has been done, and that scientific work in the government has been placed in jeopardy,” the editorial stated.

![]() National Bureau of Standards director Edward Condon [left] resigned in 1951 because investigations into his political beliefs were impeding his ability to represent the bureau. Incoming director Allen V. Astin [right] inherited the AD-X2 controversy, which eventually led to Astin’s dismissal and then his reinstatement after a large-scale protest by NBS researchers and others. National Institute of Standards and Technology Digital Collections

National Bureau of Standards director Edward Condon [left] resigned in 1951 because investigations into his political beliefs were impeding his ability to represent the bureau. Incoming director Allen V. Astin [right] inherited the AD-X2 controversy, which eventually led to Astin’s dismissal and then his reinstatement after a large-scale protest by NBS researchers and others. National Institute of Standards and Technology Digital Collections

Clearly, AD-X2’s effectiveness was no longer the central issue. The controversy was a stand-in for a larger debate concerning the role of government in supporting small business, the use of science in making policy decisions, and the independence of researchers. Over the previous few years, highly respected scientists, including Edward Condon and J. Robert Oppenheimer, had been repeatedly investigated for their political beliefs. The request for Astin’s resignation was yet another government incursion into scientific freedom.

Weeks, realizing his mistake, temporarily reinstated Astin on 17 April 1953, the day the resignation was supposed to take effect. He also asked the National Academy of Sciences to test AD-X2 in both the lab and the field. By the time the academy’s report came out in October 1953, Weeks had permanently reinstated Astin. The report, unsurprisingly, concluded that NBS was correct: AD-X2 had no merit. Science had won.

NIST makes a movie

On 9 December 2023, NIST released the 20-minute docudrama The AD-X2 Controversy. The film won the Best True Story Narrative and Best of Festival at the 2023 NewsFest Film Festival. I recommend taking the time to watch it.

The AD-X2 Controversy www.youtube.com

Many of the actors are NIST staff and scientists, and they really get into their roles. Much of the dialogue comes verbatim from primary sources, including congressional hearings and contemporary newspaper accounts.

Despite being an in-house production, NIST’s film has a Hollywood connection. The film features brief interviews with actors John and Sean Astin (of Lord of The Rings and Stranger Things fame)—NBS director Astin’s son and grandson.

The AD-X2 controversy is just as relevant today as it was 70 years ago. Scientific research, business interests, and politics remain deeply entangled. If the public is to have faith in science, it must have faith in the integrity of scientists and the scientific method. I have no objection to science being challenged—that’s how science moves forward—but we have to make sure that neither profit nor politics is tipping the scales.

Part of a continuing series looking at historical artifacts that embrace the boundless potential of technology.

An abridged version of this article appears in the August 2024 print issue as “The AD-X2 Affair.”

References

I first heard about AD-X2 after my IEEE Spectrum editor sent me a notice about NIST’s short docudrama The AD-X2 Controversy, which you can, and should, stream online. NIST held a colloquium on 31 July 2018 with John Astin and his brother Alexander (Sandy), where they recalled what it was like to be college students when their father’s reputation was on the line. The agency has also compiled a wonderful list of resources, including many of the primary source government documents.

The AD-X2 controversy played out in the popular media, and I read dozens of articles following the almost daily twists and turns in the case in the New York Times, Washington Post, and Science.

I found Elio Passaglia’s A Unique Institution: The National Bureau of Standards 1950-1969 to be particularly helpful. The AD-X2 controversy is covered in detail in Chapter 2: Testing Can Be Troublesome.

A number of graduate theses have been written about AD-X2. One I consulted was Samuel Lawrence’s 1958 thesis “The Battery AD-X2 Controversy: A Study of Federal Regulation of Deceptive Business Practices.” Lawrence also published the 1962 book The Battery Additive Controversy.