MIT spinout Arnasi begins applying LiquiGlide no-stick technology to help patients

The no-stick technology invented by Professor Kripa Varanasi and David Smith SM ’11, initially commercialized as LiquiGlide in 2012, went viral for its uncanny ability to make materials that stick to their containers — think ketchup, cosmetics, and toothpaste — slide out with ease.

Now, the company that brought you Colgate no-stick toothpaste is moving into the medical space, and the applications could improve millions of lives. The company, which recently rebranded as the Arnasi Group, has developed an ambitious plan to launch three new biomedical products over the next four years.

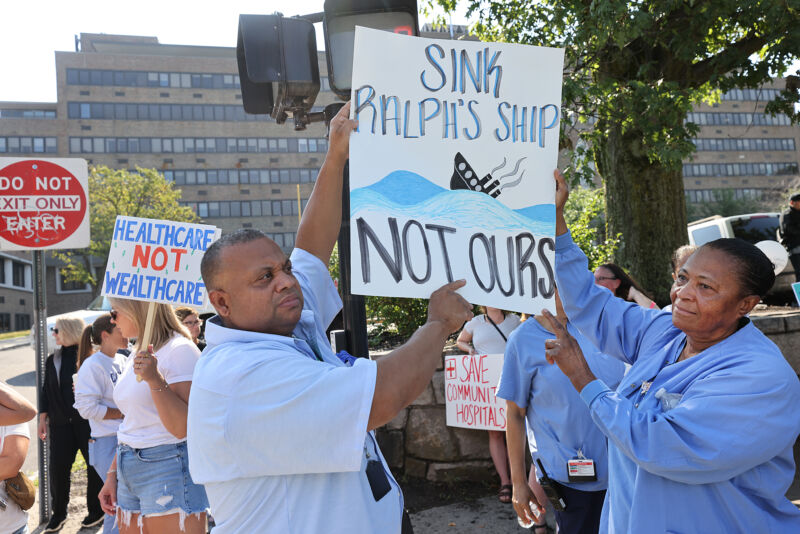

The first of those products, called Revel, is a deodorizing lubricant designed for ostomy pouches, which are used by individuals to collect bodily waste after digestive system surgeries. Up to 1 million people rely on such pouches in the United States. Ostomy pouches must be emptied multiple times per day, and issues resulting from sticking or clogging can cause embarrassing, time-consuming situations for the people relying on them.

Arnasi’s deodorizing lubricant can prevent clogging and simplify the ostomy pouch cleaning process. Unlike other options available, one application of its lubricant works for the entire day, the Arnasi team says, and they designed a single unit dose that fits in your pocket for added convenience.

An ostomy pouch “significantly impacts a person’s lifestyle,” Varanasi says. “They need to keep it clean, and they need to use it at all times. We are solving a very important problem while helping people by giving their dignity and lifestyles back.”

Revel, Arnasi’s FDA-registered product, officially launched this month, and it has already received promising feedback from nurses and patients.

Margaret is a nurse who relies on an ostomy pouch herself and cares for patients who need them after receiving colostomies and ileostomies. She received samples of Revel at a recent conference and says it could dramatically improve both her and her patients’ lives.

“These pouches need to be emptied frequently, and sometimes that’s very difficult to do,” she says. “This particular product makes everything slide out without any problems at all, and it’s a wonderful improvement. It also lasts long enough to empty the pouch three to four times, which is great because you don’t have to carry a bunch of this stuff around.”

Margaret’s experience echoes feedback Arnasi’s team has heard from many others.

“When we showed it to the nurses, they were blown away with the product,” says Arnasi CEO Dan Salain. “They asked us to get this product out to the market as fast as we could, and so that’s what we’re doing.”

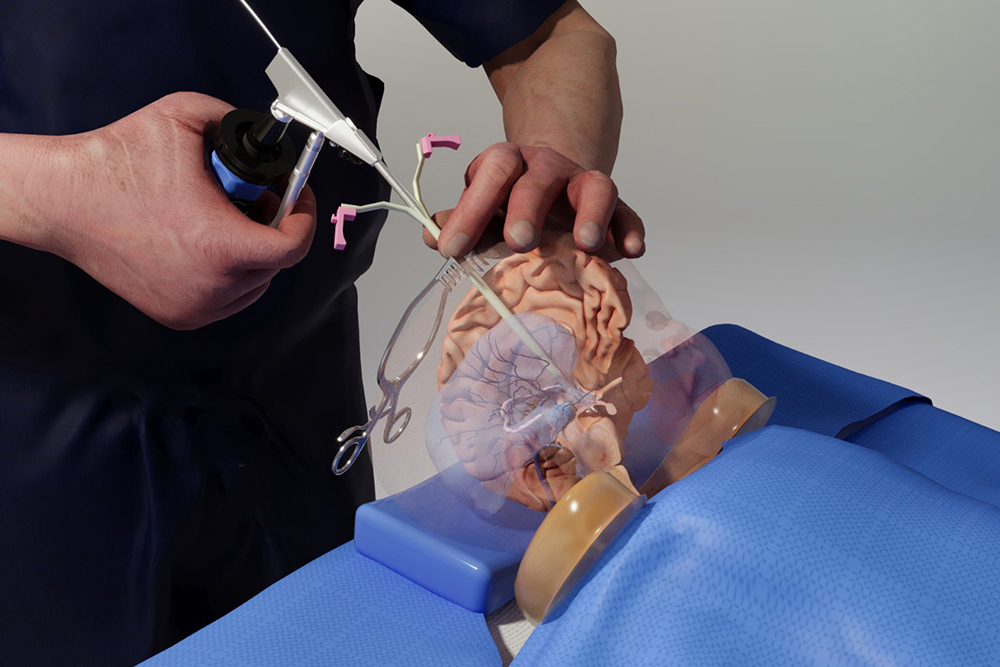

Arnasi’s next medical products will be used to prevent biofilm and bacterial infections caused by implants and catheters, and will also help people with cystic fibrosis.

“We want to create products that really help people,” Salain says. “Anything that’s implantable in the body, whether it’s a catheter, a hip, knee, or joint replacement, a breast implant, a bladder sling — those things lend themselves to our technology.”

From packages to patients

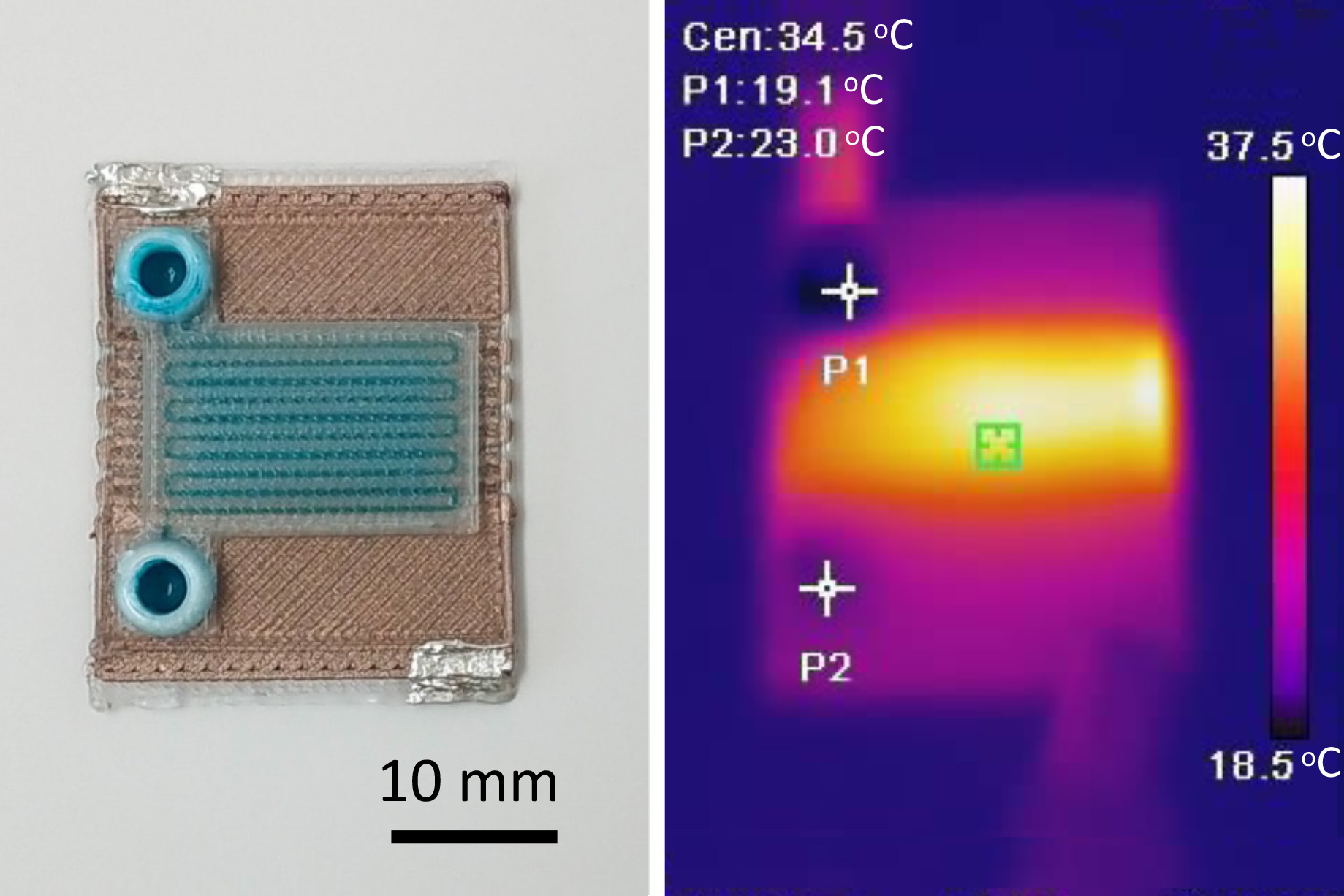

Varanasi initially developed Arnasi’s liquid-impregnated surface technology with Smith, Arnasi’s co-founder and current CTO, when Smith was a graduate student in Varanasi’s lab. The research was initially funded by the MIT Energy Initiative and the MIT Deshpande Center to work on solid-liquid interfaces with broad applications for energy, water, and more.

“There’s this fundamental friction constraint called the no-slip boundary condition between a liquid and a solid, so by creating a new surface in which we can infuse a liquid that is less viscous, we can now get the product to easily slide on surfaces,” Varanasi explains. “That aha moment meant we could get around a fundamental constraint in fluid dynamics.”

Still, sticky surfaces are everywhere, and the scientific co-founders had to decide where to apply their technology first. Shortly after the invention, Varanasi was at home trying to decide on the best application when he saw his wife across the kitchen table trying to get honey out of a bottle. It was another aha moment.

Soon after, Varanasi’s team entered the MIT $100K Entrepreneurship Competition. The competition — and the corresponding videos of ketchup and other materials sliding out their bottles with ease — created a media storm and a frenzy of attention.

“The press exploded,” Varanasi says. “For three months, my phone didn’t stop ringing. My group website crashed. There was a lot of market pull and in response, we founded the company.”

Arnasi, still operating as LiquiGlide, licensed the intellectual property from MIT’s Technology Licensing Office and eventually signed large deals with some of the world’s biggest consumer packaged goods companies, who used it to create products like fully recyclable toothpaste.

“There is so much waste just because we can't get all of the product, be it food, cosmetics, or medical products, out of containers,” Varanasi says. “Fifty billion-plus packages are sold every year, and 5 to 10 percent of product is left behind on average. So, you can imagine the CO2 footprint of the wasted product. And even though a lot of this is in recyclable packaging, they can’t be recycled because you need to wash out all the product. The water footprint of this is huge, not to mention the wasted product.”

While all of that was going on, Arnasi’s team was also looking into the biomedical space. For instance, Varanasi’s lab previously showed the technology could be used to prevent occlusion from blood clots and thrombosis and reduce biofilm formation, among other applications.

After studying the industry and speaking with patients and nurses, Arnasi realized a better lubricant for ostomy pouches could improve millions of people’s lives.

“Stool accumulates in these pouches outside of people’s bodies, and they need to empty it up to eight times a day,” explains Brienne Engel, Arnasi’s director of business development. “That process has a lot of challenges associated with it: It can be difficult to drain, leaving a lot of mass behind, it takes a long time to drain, so you can spend a long time in a restroom trying to clear out your pouch, and then there’s something called pancaking that can push the pouch off the [surgical opening], introducing issues like leakage, odor, and failure of the ostomy pouching system.”

Ostomy and beyond

Arnasi’s ostomy lubricant, Revel, is the first non-water-based solution on the market, and as-yet unpublished third-party testing has shown it allows for faster, more complete pouch drainage, along with other benefits.

“A lot of the existing brands treat their consumers like patients, but what we’ve found is they want to be treated like people and have a consumer experience,” Salain says. “The magic we saw with our toothpaste product was people got this amazing consumer experience out of it, and we wanted to create the same thing with Revel.”

Now Arnasi is planning to use its technology in medical products for skin infections, cystic fibrosis, and in implantable catheters and joint replacements. Arnasi’s team believes those last two use cases could prevent millions of deadly infections.

“When people are getting hemodialysis catheters, they have a 33 percent risk of developing infections, and those that do get those infections have a 25 percent chance of dying from them,” Engel says. “Taking our underlying technology and applying it to catheters, for example, imparts anti-biofilm properties and also prevent things like thrombosis, or blood clotting on the outside of these catheters, which is a problem in and of itself but also provides a space for bacteria to seed.”

Ultimately, Varanasi’s team is balancing making progress on its biomedical applications while exploring other avenues for its technology — including energy, manufacturing, and agriculture — to maximize its impact on the world.

“We think of this as a company with many companies within it because of all the different areas that it can impact. Liquid-solid interfaces are ubiquitous, viscous products are everywhere, and deploying this technology to solve difficult problems has been a dream,” Varanasi says. “It’s a great example of how MIT technology can be used for the benefit of humankind.”

© Image: Courtesy of Liquiglide