Microsoft breaks some Linux dual-boots in a recent Windows update

![]() .

.

Read the full article on GamingOnLinux.

![]() .

.

Read the full article on GamingOnLinux.

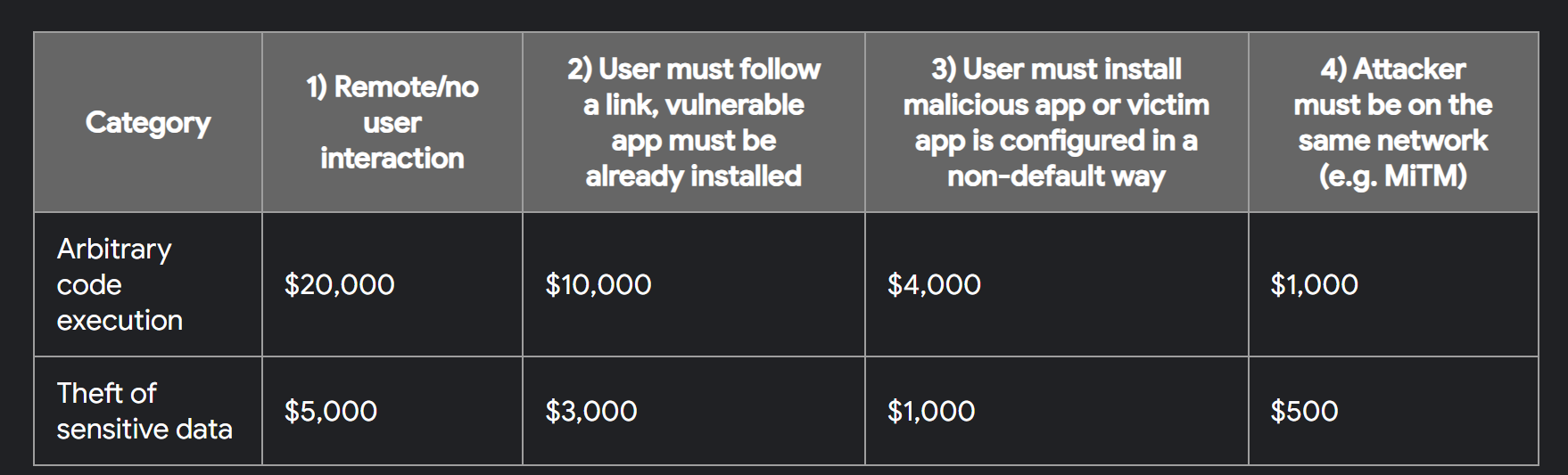

Security vulnerabilities are lurking in most of the apps you use on a day-to-day basis; there’s just no way for most companies to preemptively fix every possible security issue because of human error, deadlines, lack of resources, and a multitude of other factors. That’s why many organizations run bug bounty programs to get external help with fixing these issues. The Google Play Security Reward Program (GPSRP) is an example of a bug bounty program that paid security researchers to find vulnerabilities in popular Android apps, but it’s being shut down later this month.

Google announced the Google Play Security Reward Program back in October 2017 as a way to incentivize security searchers to find and, most importantly, responsibly disclose vulnerabilities in popular Android apps distributed through the Google Play Store.

When the GPSRP first launched, it was limited to a select number of developers who were only allowed to submit eligible vulnerabilities that affected applications from a small number of participating developers. Eligible vulnerabilities include those that lead to remote code execution or theft of insecure private data, with payouts initially reaching a maximum of $5,000 for vulnerabilities of the former type and $1,000 for the latter type.

Over the years, the scope of the Google Play Security Reward Program program expanded to cover developers of some of the biggest Android apps such as Airbnb, Alibaba, Amazon, Dropbox, Facebook, Grammarly, Instacart, Line, Lyft, Opera, Paypal, Pinterest, Shopify, Snapchat, Spotify, Telegram, Tesla, TikTok, Tinder, VLC, and Zomato, among many others.

In August 2019, Google opened up the GPSRP to cover all apps in Google Play with at least 100 million installations, even if they didn’t have their own vulnerability disclosure or bug bounty program. In July 2019, the rewards were increased to a maximum of $20,000 for remote code execution bugs and $3,000 for bugs that led to the theft of insecure private data or access to protected app components.

![]()

The purpose of the Google Play Security Reward Program was simple: Google wanted to make the Play Store a more secure destination for Android apps. According to the company, vulnerability data they collected from the program was used to help create automated checks that scanned all apps available in Google Play for similar vulnerabilities. In 2019, Google said these automated checks helped more than 300,000 developers fix more than 1,000,000 apps on Google Play. Thus, the downstream effect of the GPSRP is that fewer vulnerable apps are distributed to Android users.

However, Google has now decided to wind down the Google Play Security Reward Program. In an email to participating developers, such as Sean Pesce, the company announced that the GPSRP will end on August 31st.

The reason Google gave is that the program has seen a decrease in the number of actionable vulnerabilities reported. The company credits this success to the “overall increase in the Android OS security posture and feature hardening efforts.”

The full email sent to developers is reproduced below:

“Dear Researchers,

I hope this email finds you well. I am writing to express my sincere gratitude to all of you who have submitted bugs to the Google Play Security Reward Program over the past few years. Your contributions have been invaluable in helping us to improve the security of Android and Google Play.

As a result of the overall increase in the Android OS security posture and feature hardening efforts, we’ve seen fewer actionable vulnerabilities reported by the research community. Due to this decrease in actionable vulnerabilities reported, we are winding down the GPSRP program. The GPSRP program will end on August 31st. Any reports submitted before then will be triaged by September 15th. Final reward decisions will be made before September 30th when the program is officially discontinued. Final payments may take a few weeks to process.

I want to assure you that all of your reports will be reviewed and addressed before the program ends. We greatly value your input and want to make sure that any issues you have identified are resolved.

Thank you again for your support of the GPSRP program. We hope that you will continue working with us, on programs like the Android and Google Devices Security Reward Program.

Best regards,

Tony

On behalf of the Android Security Team”

In September of 2018, nearly a year after the GPSRP was announced, Google said that researchers had reported over 30 vulnerabilities through the program, earning a combined bounty of over $100k. Approximately a year later, in August of 2019, Google said that the program had paid out over $265k in bounties.

As far as we know, the company hasn’t disclosed how much they’ve paid out to security researchers since then, but we’d be surprised if the number isn’t notably higher than $265k given how long it’s been since the last disclosure and the number of popular apps in the crosshairs of security researchers.

Google shutting down this program is a mixed bag for users. On one hand, it means that popular apps have largely gotten their act together, but on the other hand, it means that some security researchers won’t have the incentive to disclose any future vulnerabilities responsibly, especially if those vulnerabilities impact an app made by a developer who doesn’t run their own bug bounty program.

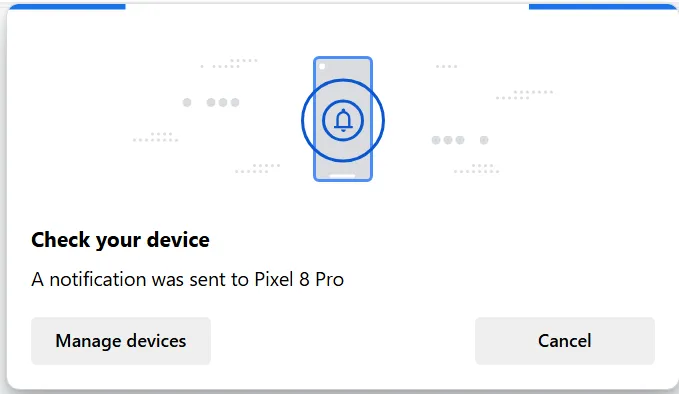

Google’s web based Home implementation evidently now requires passkeys. Passkeys are yet another hurdle to prevent hackers from gaining entry to your stuff and involve a second factor of authentication, in this case my Pixel 8 Pro and a thumb print.

Something happened when I attempted to use my phone as a passkey and that is it failed. Failed hard claiming it was not near the computer I was using, and I had to repeat the steps and pay attention.

The passkey request on your phone is that something has requested access, you have no options but to tap the box and then it asks for your thumbprint or other unlock – at no point on the passkey screen I was presented was there an option of “hell no, this isn’t me.”

I attempted to recreate the steps because, well, I wanted either a screenshot or a picture of what was happening but my computer that I am posting this on now has an inability to send a passkey request using Edge (Chrome has its 24 hours token so that’s not happening again.)

The inability to trigger a request for a passkey unlock concerning enough, but there being no clearly labeled what is requesting this is more. As a note there is a site name listed (google.com) I managed to force using a QR code to passkey unlock as it would never actually do anything.

There’s just a standard looking fingerprint unlock with small text saying something wants to identify me. No method visible to not validate and move on.

Pretty sure this lack of text to let people know their account is going to be accessed somewhere else is going to come back and bite someone on the butt.

“What? oh ignore that just unlock your phone and tell me what you see…” $15,000 later…

Would really love to see a more verbose implementation that includes “you’re unlocking your account to a different device, do you really want to do this?” message – or something similar.

Are passkeys really any good? by Paul E King first appeared on Pocketables.

A technical paper titled “HYPERPILL: Fuzzing for Hypervisor-bugs by Leveraging the Hardware Virtualization Interface” was presented at the August 2024 USENIX Security Symposium by researchers at EPFL, Boston University, and Zhejiang University.

“The security guarantees of cloud computing depend on the isolation guarantees of the underlying hypervisors. Prior works have presented effective methods for automatically identifying vulnerabilities in hypervisors. However, these approaches are limited in scope. For instance, their implementation is typically hypervisor-specific and limited by requirements for detailed grammars, access to source-code, and assumptions about hypervisor behaviors. In practice, complex closed-source and recent open-source hypervisors are often not suitable for off-the-shelf fuzzing techniques.

HYPERPILL introduces a generic approach for fuzzing arbitrary hypervisors. HYPERPILL leverages the insight that although hypervisor implementations are diverse, all hypervisors rely on the identical underlying hardware-virtualization interface to manage virtual-machines. To take advantage of the hardware-virtualization interface, HYPERPILL makes a snapshot of the hypervisor, inspects the snapshotted hardware state to enumerate the hypervisor’s input-spaces, and leverages feedback-guided snapshot-fuzzing within an emulated environment to identify vulnerabilities in arbitrary hypervisors. In our evaluation, we found that beyond being the first hypervisor-fuzzer capable of identifying vulnerabilities in arbitrary hypervisors across all major attack-surfaces (i.e., PIO/MMIO/Hypercalls/DMA), HYPERPILL also outperforms state-of-the-art approaches that rely on access to source-code, due to the granularity of feedback provided by HYPERPILL’s emulation-based approach. In terms of coverage, HYPERPILL outperformed past fuzzers for 10/12 QEMU devices, without the API hooking or source-code instrumentation techniques required by prior works. HYPERPILL identified 26 new bugs in recent versions of QEMU, Hyper-V, and macOS Virtualization Framework across four device-categories.”

Find the technical paper here. Published August 2024. Distinguished Paper Award Winner.

Bulekov, Alexander, Qiang Liu, Manuel Egele, and Mathias Payer. “HYPERPILL: Fuzzing for Hypervisor-bugs by Leveraging the Hardware Virtualization Interface.” In 33rd USENIX Security Symposium (USENIX Security 24). 2024.

Further Reading

SRAM Security Concerns Grow

Volatile memory threat increases as chips are disaggregated into chiplets, making it easier to isolate memory and slow data degradation.

The post A Generic Approach For Fuzzing Arbitrary Hypervisors appeared first on Semiconductor Engineering.

“We propose, FrameFlip, a novel attack for depleting DNN model inference with runtime code fault injections. Notably, Frameflip operates independently of the DNN models deployed and succeeds with only a single bit-flip injection. This fundamentally distinguishes it from the existing DNN inference depletion paradigm that requires injecting tens of deterministic faults concurrently. Since our attack performs at the universal code or library level, the mandatory code snippet can be perversely called by all mainstream machine learning frameworks, such as PyTorch and TensorFlow, dependent on the library code. Using DRAM Rowhammer to facilitate end-to-end fault injection, we implement Frameflip across diverse model architectures (LeNet, VGG-16, ResNet-34 and ResNet-50) with different datasets (FMNIST, CIFAR-10, GTSRB, and ImageNet). With a single bit fault injection, Frameflip achieves high depletion efficacy that consistently renders the model inference utility as no better than guessing. We also experimentally verify that identified vulnerable bits are almost equally effective at depleting different deployed models. In contrast, transferability is unattainable for all existing state-of-the-art model inference depletion attacks. Frameflip is shown to be evasive against all known defenses, generally due to the nature of current defenses operating at the model level (which is model-dependent) in lieu of the underlying code level.”

Find the technical paper here. Published August 2024. Distinguished Paper Award Winner.

Li, Shaofeng, Xinyu Wang, Minhui Xue, Haojin Zhu, Zhi Zhang, Yansong Gao, Wen Wu, and Xuemin Sherman Shen. “Yes, One-Bit-Flip Matters! Universal DNN Model Inference Depletion with Runtime Code Fault Injection.” In Proceedings of the 33th USENIX Security Symposium. 2024.

Related Reading

Why It’s So Hard To Secure AI Chips

Much of the hardware is the same, but AI systems have unique vulnerabilities that require novel defense strategies.

The post A Novel Attack For Depleting DNN Model Inference With Runtime Code Fault Injections appeared first on Semiconductor Engineering.

A technical paper titled “InSpectre Gadget: Inspecting the Residual Attack Surface of Cross-privilege Spectre v2” was presented at the August 2024 USENIX Security Symposium by researchers at Vrije Universiteit Amsterdam.

“Spectre v2 is one of the most severe transient execution vulnerabilities, as it allows an unprivileged attacker to lure a privileged (e.g., kernel) victim into speculatively jumping to a chosen gadget, which then leaks data back to the attacker. Spectre v2 is hard to eradicate. Even on last-generation Intel CPUs, security hinges on the unavailability of exploitable gadgets. Nonetheless, with (i) deployed mitigations—eIBRS, no-eBPF, (Fine)IBT—all aimed at hindering many usable gadgets, (ii) existing exploits relying on now-privileged features (eBPF), and (iii) recent Linux kernel gadget analysis studies reporting no exploitable gadgets, the common belief is that there is no residual attack surface of practical concern.

In this paper, we challenge this belief and uncover a significant residual attack surface for cross-privilege Spectre-v2 attacks. To this end, we present InSpectre Gadget, a new gadget analysis tool for in-depth inspection of Spectre gadgets. Unlike existing tools, ours performs generic constraint analysis and models knowledge of advanced exploitation techniques to accurately reason over gadget exploitability in an automated fashion. We show that our tool can not only uncover new (unconventionally) exploitable gadgets in the Linux kernel, but that those gadgets are sufficient to bypass all deployed Intel mitigations. As a demonstration, we present the first native Spectre-v2 exploit against the Linux kernel on last-generation Intel CPUs, based on the recent BHI variant and able to leak arbitrary kernel memory at 3.5 kB/sec. We also present a number of gadgets and exploitation techniques to bypass the recent FineIBT mitigation, along with a case study on a 13th Gen Intel CPU that can leak kernel memory at 18 bytes/sec.”

Find the technical paper here. Published August 2024. Distinguished Paper Award Winner. Find additional information here on VU Amsterdam’s site.

Wiebing, Sander, Alvise de Faveri Tron, Herbert Bos, and Cristiano Giuffrida. “InSpectre Gadget: Inspecting the residual attack surface of cross-privilege Spectre v2.” In USENIX Security. 2024.

Further Reading

Defining Chip Threat Models To Identify Security Risks

Not every device has the same requirements, and even the best security needs to adapt.

The post Uncovering A Significant Residual Attack Surface For Cross-Privilege Spectre-V2 Attacks appeared first on Semiconductor Engineering.

A technical paper titled “GoFetch: Breaking Constant-Time Cryptographic Implementations Using Data Memory-Dependent Prefetchers” was presented at the August 2024 USENIX Security Symposium by researchers at University of Illinois Urbana-Champaign, University of Texas at Austin, Georgia Institute of Technology, University of California Berkeley, University of Washington, and Carnegie Mellon University.

“Microarchitectural side-channel attacks have shaken the foundations of modern processor design. The cornerstone defense against these attacks has been to ensure that security-critical programs do not use secret-dependent data as addresses. Put simply: do not pass secrets as addresses to, e.g., data memory instructions. Yet, the discovery of data memory-dependent prefetchers (DMPs)—which turn program data into addresses directly from within the memory system—calls into question whether this approach will continue to remain secure.

This paper shows that the security threat from DMPs is significantly worse than previously thought and demonstrates the first end-to-end attacks on security-critical software using the Apple m-series DMP. Undergirding our attacks is a new understanding of how DMPs behave which shows, among other things, that the Apple DMP will activate on behalf of any victim program and attempt to “leak” any cached data that resembles a pointer. From this understanding, we design a new type of chosen-input attack that uses the DMP to perform end-to-end key extraction on popular constant-time implementations of classical (OpenSSL Diffie-Hellman Key Exchange, Go RSA decryption) and post-quantum cryptography (CRYSTALS-Kyber and CRYSTALS-Dilithium).”

Find the technical paper here. Published August 2024.

Chen, Boru, Yingchen Wang, Pradyumna Shome, Christopher W. Fletcher, David Kohlbrenner, Riccardo Paccagnella, and Daniel Genkin. “GoFetch: Breaking constant-time cryptographic implementations using data memory-dependent prefetchers.” In Proc. USENIX Secur. Symp, pp. 1-21. 2024.

Further Reading

Chip Security Now Depends On Widening Supply Chain

How tighter HW-SW integration and increasing government involvement are changing the security landscape for chips and systems.

The post Data Memory-Dependent Prefetchers Pose SW Security Threat By Breaking Cryptographic Implementations appeared first on Semiconductor Engineering.

A technical paper titled “ABACuS: All-Bank Activation Counters for Scalable and Low Overhead RowHammer Mitigation” was presented at the August 2024 USENIX Security Symposium by researchers at ETH Zurich.

“We introduce ABACuS, a new low-cost hardware-counterbased RowHammer mitigation technique that performance-, energy-, and area-efficiently scales with worsening RowHammer vulnerability. We observe that both benign workloads and RowHammer attacks tend to access DRAM rows with the same row address in multiple DRAM banks at around the same time. Based on this observation, ABACuS’s key idea is to use a single shared row activation counter to track activations to the rows with the same row address in all DRAM banks. Unlike state-of-the-art RowHammer mitigation mechanisms that implement a separate row activation counter for each DRAM bank, ABACuS implements fewer counters (e.g., only one) to track an equal number of aggressor rows.

Our comprehensive evaluations show that ABACuS securely prevents RowHammer bitflips at low performance/energy overhead and low area cost. We compare ABACuS to four state-of-the-art mitigation mechanisms. At a nearfuture RowHammer threshold of 1000, ABACuS incurs only 0.58% (0.77%) performance and 1.66% (2.12%) DRAM energy overheads, averaged across 62 single-core (8-core) workloads, requiring only 9.47 KiB of storage per DRAM rank. At the RowHammer threshold of 1000, the best prior lowarea-cost mitigation mechanism incurs 1.80% higher average performance overhead than ABACuS, while ABACuS requires 2.50× smaller chip area to implement. At a future RowHammer threshold of 125, ABACuS performs very similarly to (within 0.38% of the performance of) the best prior performance- and energy-efficient RowHammer mitigation mechanism while requiring 22.72× smaller chip area. We show that ABACuS’s performance scales well with the number of DRAM banks. At the RowHammer threshold of 125, ABACuS incurs 1.58%, 1.50%, and 2.60% performance overheads for 16-, 32-, and 64-bank systems across all single-core workloads, respectively. ABACuS is freely and openly available at https://github.com/CMU-SAFARI/ABACuS.”

Find the technical paper here.

Olgun, Ataberk, Yahya Can Tugrul, Nisa Bostanci, Ismail Emir Yuksel, Haocong Luo, Steve Rhyner, Abdullah Giray Yaglikci, Geraldo F. Oliveira, and Onur Mutlu. “Abacus: All-bank activation counters for scalable and low overhead rowhammer mitigation.” In USENIX Security. 2024.

Further Reading

Securing DRAM Against Evolving Rowhammer Threats

A multi-layered, system-level approach is crucial to DRAM protection.

The post A New Low-Cost HW-Counterbased RowHammer Mitigation Technique appeared first on Semiconductor Engineering.

Today, almost all data on the Internet, including bank transactions, medical records, and secure chats, is protected with an encryption scheme called RSA (named after its creators Rivest, Shamir, and Adleman). This scheme is based on a simple fact—it is virtually impossible to calculate the prime factors of a large number in a reasonable amount of time, even on the world’s most powerful supercomputer. Unfortunately, large quantum computers, if and when they are built, would find this task a breeze, thus undermining the security of the entire Internet.

Luckily, quantum computers are only better than classical ones at a select class of problems, and there are plenty of encryption schemes where quantum computers don’t offer any advantage. Today, the U.S. National Institute of Standards and Technology (NIST) announced the standardization of three post-quantum cryptography encryption schemes. With these standards in hand, NIST is encouraging computer system administrators to begin transitioning to post-quantum security as soon as possible.

“Now our task is to replace the protocol in every device, which is not an easy task.” —Lily Chen, NIST

These standards are likely to be a big element of the Internet’s future. NIST’s previous cryptography standards, developed in the 1970s, are used in almost all devices, including Internet routers, phones, and laptops, says Lily Chen, head of the cryptography group at NIST who lead the standardization process. But adoption will not happen overnight.

“Today, public key cryptography is used everywhere in every device,” Chen says. “Now our task is to replace the protocol in every device, which is not an easy task.”

Most experts believe large-scale quantum computers won’t be built for at least another decade. So why is NIST worried about this now? There are two main reasons.

First, many devices that use RSA security, like cars and some IoT devices, are expected to remain in use for at least a decade. So they need to be equipped with quantum-safe cryptography before they are released into the field.

“For us, it’s not an option to just wait and see what happens. We want to be ready and implement solutions as soon as possible.” —Richard Marty, LGT Financial Services

Second, a nefarious individual could potentially download and store encrypted data today, and decrypt it once a large enough quantum computer comes online. This concept is called “harvest now, decrypt later“ and by its nature, it poses a threat to sensitive data now, even if that data can only be cracked in the future.

Security experts in various industries are starting to take the threat of quantum computers seriously, says Joost Renes, principal security architect and cryptographer at NXP Semiconductors. “Back in 2017, 2018, people would ask ‘What’s a quantum computer?’” Renes says. “Now, they’re asking ‘When will the PQC standards come out and which one should we implement?’”

Richard Marty, chief technology officer at LGT Financial Services, agrees. “For us, it’s not an option to just wait and see what happens. We want to be ready and implement solutions as soon as possible, to avoid harvest now and decrypt later.”

NIST announced a public competition for the best PQC algorithm back in 2016. They received a whopping 82 submissions from teams in 25 different countries. Since then, NIST has gone through 4 elimination rounds, finally whittling the pool down to four algorithms in 2022.

This lengthy process was a community-wide effort, with NIST taking input from the cryptographic research community, industry, and government stakeholders. “Industry has provided very valuable feedback,” says NIST’s Chen.

These four winning algorithms had intense-sounding names: CRYSTALS-Kyber, CRYSTALS-Dilithium, Sphincs+, and FALCON. Sadly, the names did not survive standardization: The algorithms are now known as Federal Information Processing Standard (FIPS) 203 through 206. FIPS 203, 204, and 205 are the focus of today’s announcement from NIST. FIPS 206, the algorithm previously known as FALCON, is expected to be standardized in late 2024.

The algorithms fall into two categories: general encryption, used to protect information transferred via a public network, and digital signature, used to authenticate individuals. Digital signatures are essential for preventing malware attacks, says Chen.

Every cryptography protocol is based on a math problem that’s hard to solve but easy to check once you have the correct answer. For RSA, it’s factoring large numbers into two primes—it’s hard to figure out what those two primes are (for a classical computer), but once you have one it’s straightforward to divide and get the other.

“We have a few instances of [PQC], but for a full transition, I couldn’t give you a number, but there’s a lot to do.” —Richard Marty, LGT Financial Services

Two out of the three schemes already standardized by NIST, FIPS 203 and FIPS 204 (as well as the upcoming FIPS 206), are based on another hard problem, called lattice cryptography. Lattice cryptography rests on the tricky problem of finding the lowest common multiple among a set of numbers. Usually, this is implemented in many dimensions, or on a lattice, where the least common multiple is a vector.

The third standardized scheme, FIPS 205, is based on hash functions—in other words, converting a message to an encrypted string that’s difficult to reverse

The standards include the encryption algorithms’ computer code, instructions for how to implement it, and intended uses. There are three levels of security for each protocol, designed to future-proof the standards in case some weaknesses or vulnerabilities are found in the algorithms.

Earlier this year, a pre-print published to the arXiv alarmed the PQC community. The paper, authored by Yilei Chen of Tsinghua University in Beijing, claimed to show that lattice-based cryptography, the basis of two out of the three NIST protocols, was not, in fact, immune to quantum attacks. On further inspection, Yilei Chen’s argument turned out to have a flaw—and lattice cryptography is still believed to be secure against quantum attacks.

On the one hand, this incident highlights the central problem at the heart of all cryptography schemes: There is no proof that any of the math problems the schemes are based on are actually “hard.” The only proof, even for the standard RSA algorithms, is that people have been trying to break the encryption for a long time, and have all failed. Since post-quantum cryptography standards, including lattice cryptogrphay, are newer, there is less certainty that no one will find a way to break them.

That said, the failure of this latest attempt only builds on the algorithm’s credibility. The flaw in the paper’s argument was discovered within a week, signaling that there is an active community of experts working on this problem. “The result of that paper is not valid, that means the pedigree of the lattice-based cryptography is still secure,” says NIST’s Lily Chen (no relation to Tsinghua University’s Yilei Chen). “People have tried hard to break this algorithm. A lot of people are trying, they try very hard, and this actually gives us confidence.”

NIST’s announcement is exciting, but the work of transitioning all devices to the new standards has only just begun. It is going to take time, and money, to fully protect the world from the threat of future quantum computers.

“We’ve spent 18 months on the transition and spent about half a million dollars on it,” says Marty of LGT Financial Services. “We have a few instances of [PQC], but for a full transition, I couldn’t give you a number, but there’s a lot to do.”

Enlarge (credit: Getty Images)

Last Tuesday, loads of Linux users—many running packages released as early as this year—started reporting their devices were failing to boot. Instead, they received a cryptic error message that included the phrase: “Something has gone seriously wrong.”

The cause: an update Microsoft issued as part of its monthly patch release. It was intended to close a 2-year-old vulnerability in GRUB, an open source boot loader used to start up many Linux devices. The vulnerability, with a severity rating of 8.6 out of 10, made it possible for hackers to bypass secure boot, the industry standard for ensuring that devices running Windows or other operating systems don’t load malicious firmware or software during the bootup process. CVE-2022-2601 was discovered in 2022, but for unclear reasons, Microsoft patched it only last Tuesday.

Tuesday’s update left dual-boot devices—meaning those configured to run both Windows and Linux—no longer able to boot into the latter when Secure Boot was enforced. When users tried to load Linux, they received the message: “Verifying shim SBAT data failed: Security Policy Violation. Something has gone seriously wrong: SBAT self-check failed: Security Policy Violation.” Almost immediately support and discussion forums lit up with reports of the failure.

Enlarge (credit: Getty Images)

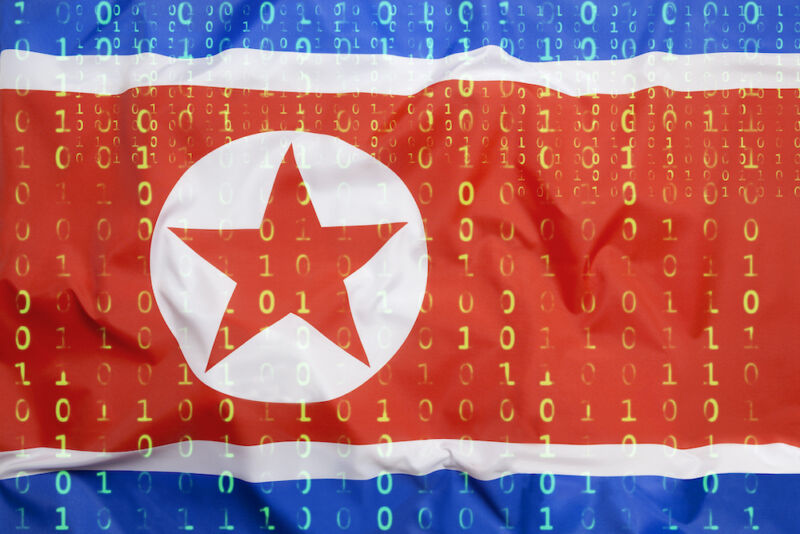

A Windows zero-day vulnerability recently patched by Microsoft was exploited by hackers working on behalf of the North Korean government so they could install custom malware that’s exceptionally stealthy and advanced, researchers reported Monday.

The vulnerability, tracked as CVE-2024-38193, was one of six zero-days—meaning vulnerabilities known or actively exploited before the vendor has a patch—fixed in Microsoft’s monthly update release last Tuesday. Microsoft said the vulnerability—in a class known as a "use after free"—was located in AFD.sys, the binary file for what’s known as the ancillary function driver and the kernel entry point for the Winsock API. Microsoft warned that the zero-day could be exploited to give attackers system privileges, the maximum system rights available in Windows and a required status for executing untrusted code.

Microsoft warned at the time that the vulnerability was being actively exploited but provided no details about who was behind the attacks or what their ultimate objective was. On Monday, researchers with Gen—the security firm that discovered the attacks and reported them privately to Microsoft—said the threat actors were part of Lazarus, the name researchers use to track a hacking outfit backed by the North Korean government.

Update, August 2, 2024 (04:10 PM ET): Google has reached out to us with a message of reassurance:

Android users are automatically protected against known versions of this malware by Google Play Protect, which is on by default on Android devices with Google Play Services.

Of course, the key word there is “known” versions, and as the team at Cleafy reported, BingoMod is still evolving and working on new tricks to evade detection. Play Protect isn’t going to rest on its laurels, either, so expect this cat-and-mouse game to continue. And for your own part, keep using best practices when it comes to sourcing your apps.

Original article, August 2, 2024 (11:44 AM ET): Getting malware on your smartphone is just a recipe for a bad day, but even within that misery there’s a spectrum of how awful things will be. Some malware may be interested in exploiting its position on your device to send spam texts or mine crypto. But the really dangerous stuff just wants to straight-up steal from you, and the example we’re checking out today has a particularly nasty going-away present for your phone when it’s done.

A remote access trojan (RAT) dubbed BingoMod was first spotted back in May by the researchers at Cleafy (via BleepingComputer). The software is largely spread via SMS-based phishing, where it masquerades as a security tool — one of the icons the app dresses itself up with is that from AVG antivirus. Once on your phone, it requests access to Android Accessibility Services, which it uses to get its hooks in for remotely controlling your device.

Once established, the malware’s goal is setting up money transfers. It steals login data with a keylogger, and confirmation codes by intercepting SMS. And then when it has the credentials and access it needs, the threat actor controlling the malware can start transferring all your savings away. With language support for English, Romanian, and Italian, the app seems targeted at European users, and circumstantial evidence suggests Romanian devs may be behind it.

All this sounds bad, but not that different from plenty of malware, right? Well, BingoMod, it seems, is a little paranoid about being found out. Besides the numerous tricks it uses to evade automatic detection, it’s got a doomsday weapon it’s ready to deploy after achieving its goals and wiping your accounts clean: it wipes your phone.

While BingoMod supports a built-in command for wiping data, that’s limited to external storage, which isn’t going to get it very far. Instead, Cleafy’s team suspects that the people controlling the malware remotely are manually executing these wipes when they’re done stealing from you, just like you’d do yourself before getting rid of an old phone. Presumably, that’s in the goal of destroying evidence of the hack — losing your personal data is just collateral damage.

That’s a fresh kind of awful that we would be very happy never having to deal with. The good news is that you really don’t have to. Get your apps from official sources, don’t install software from sketchy text messages, and you’ll be well on your way to not losing all your data in a malware attack.

A while back on Vine I’d picked up a tablet of remarkable minor problems. One of them being that the USB port only worked on the most antiquated chargers I had. I had never investigated setting it up for ADB as this was my for my kiddos on managed accounts and hidden behind a password. Secure enough for a kid’s tablet.

The device developed a little crack from a drop, which was no big deal, it was still usable. I attempted to instill the understanding of the need for a password as I assume (erring on the side of caution) managed accounts have some of my information. Even if they don’t I want my kids to have a lock on their phones so if they lose them someone else can’t make calls to <pick country that would cost a lot to call> or be used to call in fake emergency services calls. Simple security.

The tablet’s main user decides what she’s going to use as her password. Yay! Then she figures out how to set the wallpaper on the lock screen so it shows her her password in case she forgets it. Boo!

And then someone steps on the tablet.

We were left with a tablet that the touch screen was completely unresponsive. I attempted to get into the bootloader, nope, attempted adb, nope (didn’t even recognize it,) even looked up the part for the screen and it was more than the tablet was worth.

Exhausting all methods I knew from my ROM flashing days I resigned myself and went into the Google Family Link program and chose to wipe the tablet as there was no saving this beast.

And nothing happened.

Nothing.

The tablet was connected to our Wi-Fi, powered on, I could lock and unlock it from Family Link, but the wipe and erase function straight up did nothing. We waited hours. I repeatedly wiped and erased remotely. I made the unit beep for it’s location and to verify that it was getting commands. But it never wiped the tablet.

I spent about two weeks attempting everything I could think of, bearing in mind my rooting days were about 3 phones behind me, and I couldn’t even see that anything was connected when I plugged the tablet into my computer. There was only power drain. Never anything.

Then again, might not be anything if ADB was never turned on… but I couldn’t turn it on. The only thing I didn’t try was a USB Keyboard and mouse and I would have needed a USB-A to C adapter which have gone off somewhere to hide for a decade.

Deciding this was a gaping glaring security risk it was time to take the mini sledgehammer to it. I whacked it in a bag hard enough to pop the screen off the back, gently removed the battery as I didn’t want to let the magic smoke out, and went about destroying the storage.

That accomplished I felt it was now safe to recycle the rest.

I may have been a bit paranoid thinking her managed account could somehow come back to bite me, but let’s put it simply that I don’t want to find yet another zero day in which a compromised child account manages to gain access to an adult’s OAUTH2 tokens or some such.

After the destruction we went over security measures once again and that making the lock screen wallpaper be your password was a bad idea.

The stepping on the tablet, it’s an accident and why they got an inexpensive device. Next one I will make sure to add some sort of remote access app for times when this happens as evidently I can’t trust Family Link to do it.

I thought about taking some pictures, but I didn’t really like the destruction. I didn’t want to do this, I just wanted to recycle the thing, but it’s somehow tied to my Google account, however minutely, and I just watched my accountant’s digital life go down in flames due to a scam app, so not really willing to risk it.

I had to physically destroy a cheap tablet this weekend after all data destruction methods including Google Family Link remote wipe failed by Paul E King first appeared on Pocketables.

Had an interesting thing happen earlier in the day and that is that every time I opened Chrome my camera notification came on.

I’ll save the long an unnecessary SEO improving pages of why you should be scared of the camera kicking on…

I killed all my chrome tabs, killed chrome, and every time I would come in the camera notification would kick back on.

Earlier in the day I had accessed a web page sent by car insurance people that had me line my car up and take pictures of some damage, and I highly suspect that was where this started as I had to answer yes to allowing Chrome to access the camera to take photos.

Why Chrome kept accessing the camera even with all the tabs closed, I don’t know. It has no need for it other than to report body damage to my car.

There seemed to be no extensions running, no weirdness, but no matter what I do the camera active icon pops on when opening chrome. To disable / calm my paranoia down, I went into app permissions and revoked the camera permission I had granted it earlier in the day and now things are back to normal.

Or my phone’s been hacked because T-Mobile wouldn’t get me the critical firmware update until yesterday.

Whatever the case, the fix appears to be to manually revoke permissions.

Chrome triggering camera? How it happened to me / fix by Paul E King first appeared on Pocketables.

In the relentless pursuit of performance and miniaturization, the semiconductor industry has increasingly turned to 3D integrated circuits (3D-ICs) as a cutting-edge solution. Stacking dies in a 3D assembly offers numerous benefits, including enhanced performance, reduced power consumption, and more efficient use of space. However, this advanced technology also introduces significant thermal dissipation challenges that can impact the electrical behavior, reliability, performance, and lifespan of the chips (figure 1). For automotive applications, where safety and reliability are paramount, managing these thermal effects is of utmost importance.

Fig. 1: Illustration of a 3D-IC with heat dissipation.

3D-ICs have become particularly attractive for safety-critical devices like automotive sensors. Advanced driver-assistance systems (ADAS) and autonomous vehicles (AVs) rely on these compact, high-performance chips to process vast amounts of sensor data in real time. Effective thermal management in these devices is a top priority to ensure that they function reliably under various operating conditions.

The stacked configuration of 3D-ICs inherently leads to complex thermal dynamics. In traditional 2D designs, heat dissipation occurs across a single plane, making it relatively straightforward to manage. However, in 3D-ICs, multiple active layers generate heat, creating significant thermal gradients and hotspots. These thermal issues can adversely affect device performance and reliability, which is particularly critical in automotive applications where components must operate reliably under extreme temperatures and harsh conditions.

These thermal effects in automotive 3D-ICs can impact the electrical behavior of the circuits, causing timing errors, increased leakage currents, and potential device failure. Therefore, accurate and comprehensive thermal analysis throughout the design flow is essential to ensure the reliability and performance of automotive ICs.

Traditionally, thermal analysis has been performed at the package and system levels, often as a separate process from IC design. However, with the advent of 3D-ICs, this approach is no longer sufficient.

To address the thermal challenges of 3D-ICs for automotive applications, it is crucial to incorporate die-level thermal analysis early in the design process and continue it throughout the design flow (figure 2). Early-stage thermal analysis can help identify potential hotspots and thermal bottlenecks before they become critical issues, enabling designers to make informed decisions about chiplet placement, power distribution, and cooling strategies. These early decisions reduce the risks of thermal-induced failures, improving the reliability of 3D automotive ICs.

Fig. 2: Die-level detailed thermal analysis using accurate package and boundary conditions should be fully integrated into the ASIC design flow to allow for fast thermal exploration.

During the initial package design and floorplanning stage, designers can use high-level power estimates and simplified models to perform thermal feasibility studies. These early analyses help identify configurations that are likely to cause thermal problems, allowing designers to rule out problematic designs before investing significant time and resources in detailed implementation.

Fig. 3: Thermal analysis as part of the package design, floorplanning and implementation flows.

For example, thermal analysis can reveal issues such as overlapping heat sources in stacked dies or insufficient cooling paths. By identifying these problems early, designers can explore alternative floorplans and adjust power distribution to mitigate thermal risks. This proactive approach reduces the likelihood of encountering critical thermal issues late in the design process, thereby shortening the overall design cycle.

As the design progresses and more detailed information becomes available, thermal analysis should be performed iteratively to refine the thermal model and verify that the design remains within acceptable thermal limits. At each stage of design refinement, additional details such as power maps, layout geometries and their material properties can be incorporated into the thermal model to improve accuracy.

This iterative approach lets designers continuously monitor and address thermal issues, ensuring that the design evolves in a thermally aware manner. By integrating thermal analysis with other design verification tasks, such as timing and power analysis, designers can achieve a holistic view of the design’s performance and reliability.

A robust thermal analysis tool should support various stages of the design process, providing value from initial concept to final signoff:

To effectively manage thermal challenges in automotive ICs, designers need advanced tools and techniques that can provide accurate and fast thermal analysis throughout the design flow. Modern thermal analysis tools are equipped with capabilities to handle the complexity of 3D-IC designs, from early feasibility studies to final signoff.

Accurate thermal analysis requires high-fidelity thermal models that capture the intricate details of the 3D-IC assembly. These models should account for non-uniform material properties, fine-grained power distributions, and the thermal impact of through-silicon vias (TSVs) and other 3D features. Advanced tools can generate detailed thermal models based on the actual design geometries, providing a realistic representation of heat flow and temperature distribution.

For instance, tools like Calibre 3DThermal embeds an optimized custom 3D solver from Simcenter Flotherm to perform precise thermal analysis down to the nanometer scale. By leveraging detailed layer information and accurate boundary conditions, these tools can produce reliable thermal models that reflect the true thermal behavior of the design.

Automation is a key feature of modern thermal analysis tools, enabling designers to perform complex analyses without requiring deep expertise in thermal engineering. An effective thermal analysis tool must offer advanced automation to facilitate use by non-experts. Key automation features include:

Fig. 4: Ways to view thermal analysis results.

Automated thermal analysis tools can also integrate seamlessly with other design verification and implementation tools, providing a unified environment for managing thermal, electrical, and mechanical constraints. This integration ensures that thermal considerations are consistently addressed throughout the design flow, from initial feasibility analysis to final tape-out and even connecting with package-level analysis tools.

The practical benefits of integrated thermal analysis solutions are evident in real-world applications. For instance, a leading research organization, CEA, utilized an advanced thermal analysis tool from Siemens EDA to study the thermal performance of their 3DNoC demonstrator. The high-fidelity thermal model they developed showed a worst-case difference of just 3.75% and an average difference within 2% between simulation and measured data, demonstrating the accuracy and reliability of the tool (figure 5).

Fig. 5: Correlation of simulation versus measured results.

As the automotive industry continues to embrace advanced technologies, the importance of accurate thermal analysis throughout the design flow of 3D-ICs cannot be overstated. By incorporating thermal analysis early in the design process and iteratively refining thermal models, designers can mitigate thermal risks, reduce design time, and enhance chip reliability.

Advanced thermal analysis tools that integrate seamlessly with the broader design environment are essential for achieving these goals. These tools enable designers to perform high-fidelity thermal analysis, automate complex tasks, and ensure that thermal considerations are addressed consistently from package design, through implementation to signoff.

By embracing these practices, designers can unlock the full potential of 3D-IC technology, delivering innovative, high-performance devices that meet the demands of today’s increasingly complex automotive applications.

For more information about die-level 3D-IC thermal analysis, read Conquer 3DIC thermal impacts with Calibre 3DThermal.

The post Ensure Reliability In Automotive ICs By Reducing Thermal Effects appeared first on Semiconductor Engineering.

A new technical paper titled “Harnessing Heterogeneity for Targeted Attacks on 3-D ICs” was published by Drexel University.

Abstract

“As 3-D integrated circuits (ICs) increasingly pervade the microelectronics industry, the integration of heterogeneous components presents a unique challenge from a security perspective. To this end, an attack on a victim die of a multi-tiered heterogeneous 3-D IC is proposed and evaluated. By utilizing on-chip inductive circuits and transistors with low voltage threshold (LVT), a die based on CMOS technology is proposed that includes a sensor to monitor the electromagnetic (EM) emissions from the normal function of a victim die, without requiring physical probing. The adversarial circuit is self-powered through the use of thermocouples that supply the generated current to circuits that sense EM emissions. Therefore, the integration of disparate technologies in a single 3-D circuit allows for a stealthy, wireless, and non-invasive side-channel attack. A thin-film thermo-electric generator (TEG) is developed that produces a 115 mV voltage source, which is amplified 5 × through a voltage booster to provide power to the adversarial circuit. An on-chip inductor is also developed as a component of a sensing array, which detects changes to the magnetic field induced by the computational activity of the victim die. In addition, the challenges associated with detecting and mitigating such attacks are discussed, highlighting the limitations of existing security mechanisms in addressing the multifaceted nature of vulnerabilities due to the heterogeneity of 3-D ICs.”

Find the technical paper here. Published June 2024.

Alec Aversa and Ioannis Savidis. 2024. Harnessing Heterogeneity for Targeted Attacks on 3-D ICs. In Proceedings of the Great Lakes Symposium on VLSI 2024 (GLSVLSI ’24). Association for Computing Machinery, New York, NY, USA, 246–251. https://doi.org/10.1145/3649476.3660385.

The post Heterogeneity Of 3DICs As A Security Vulnerability appeared first on Semiconductor Engineering.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Enlarge (credit: Marco Verch Professional Photographer and Speaker)

Hackers delivered malware to Windows and Mac users by compromising their Internet service provider and then tampering with software updates delivered over unsecure connections, researchers said.

The attack, researchers from security firm Volexity said, worked by hacking routers or similar types of device infrastructure of an unnamed ISP. The attackers then used their control of the devices to poison domain name system responses for legitimate hostnames providing updates for at least six different apps written for Windows or macOS. The apps affected were the 5KPlayer, Quick Heal, Rainmeter, Partition Wizard, and those from Corel and Sogou.

Because the update mechanisms didn’t use TLS or cryptographic signatures to authenticate the connections or downloaded software, the threat actors were able to use their control of the ISP infrastructure to successfully perform machine-in-the-middle (MitM) attacks that directed targeted users to hostile servers rather than the ones operated by the affected software makers. These redirections worked even when users employed non-encrypted public DNS services such as Google’s 8.8.8.8 or Cloudflare’s 1.1.1.1 rather than the authoritative DNS server provided by the ISP.

Update, August 2, 2024 (04:10 PM ET): Google has reached out to us with a message of reassurance:

Android users are automatically protected against known versions of this malware by Google Play Protect, which is on by default on Android devices with Google Play Services.

Of course, the key word there is “known” versions, and as the team at Cleafy reported, BingoMod is still evolving and working on new tricks to evade detection. Play Protect isn’t going to rest on its laurels, either, so expect this cat-and-mouse game to continue. And for your own part, keep using best practices when it comes to sourcing your apps.

Original article, August 2, 2024 (11:44 AM ET): Getting malware on your smartphone is just a recipe for a bad day, but even within that misery there’s a spectrum of how awful things will be. Some malware may be interested in exploiting its position on your device to send spam texts or mine crypto. But the really dangerous stuff just wants to straight-up steal from you, and the example we’re checking out today has a particularly nasty going-away present for your phone when it’s done.

A remote access trojan (RAT) dubbed BingoMod was first spotted back in May by the researchers at Cleafy (via BleepingComputer). The software is largely spread via SMS-based phishing, where it masquerades as a security tool — one of the icons the app dresses itself up with is that from AVG antivirus. Once on your phone, it requests access to Android Accessibility Services, which it uses to get its hooks in for remotely controlling your device.

Once established, the malware’s goal is setting up money transfers. It steals login data with a keylogger, and confirmation codes by intercepting SMS. And then when it has the credentials and access it needs, the threat actor controlling the malware can start transferring all your savings away. With language support for English, Romanian, and Italian, the app seems targeted at European users, and circumstantial evidence suggests Romanian devs may be behind it.

All this sounds bad, but not that different from plenty of malware, right? Well, BingoMod, it seems, is a little paranoid about being found out. Besides the numerous tricks it uses to evade automatic detection, it’s got a doomsday weapon it’s ready to deploy after achieving its goals and wiping your accounts clean: it wipes your phone.

While BingoMod supports a built-in command for wiping data, that’s limited to external storage, which isn’t going to get it very far. Instead, Cleafy’s team suspects that the people controlling the malware remotely are manually executing these wipes when they’re done stealing from you, just like you’d do yourself before getting rid of an old phone. Presumably, that’s in the goal of destroying evidence of the hack — losing your personal data is just collateral damage.

That’s a fresh kind of awful that we would be very happy never having to deal with. The good news is that you really don’t have to. Get your apps from official sources, don’t install software from sketchy text messages, and you’ll be well on your way to not losing all your data in a malware attack.

A while back on Vine I’d picked up a tablet of remarkable minor problems. One of them being that the USB port only worked on the most antiquated chargers I had. I had never investigated setting it up for ADB as this was my for my kiddos on managed accounts and hidden behind a password. Secure enough for a kid’s tablet.

The device developed a little crack from a drop, which was no big deal, it was still usable. I attempted to instill the understanding of the need for a password as I assume (erring on the side of caution) managed accounts have some of my information. Even if they don’t I want my kids to have a lock on their phones so if they lose them someone else can’t make calls to <pick country that would cost a lot to call> or be used to call in fake emergency services calls. Simple security.

The tablet’s main user decides what she’s going to use as her password. Yay! Then she figures out how to set the wallpaper on the lock screen so it shows her her password in case she forgets it. Boo!

And then someone steps on the tablet.

We were left with a tablet that the touch screen was completely unresponsive. I attempted to get into the bootloader, nope, attempted adb, nope (didn’t even recognize it,) even looked up the part for the screen and it was more than the tablet was worth.

Exhausting all methods I knew from my ROM flashing days I resigned myself and went into the Google Family Link program and chose to wipe the tablet as there was no saving this beast.

And nothing happened.

Nothing.

The tablet was connected to our Wi-Fi, powered on, I could lock and unlock it from Family Link, but the wipe and erase function straight up did nothing. We waited hours. I repeatedly wiped and erased remotely. I made the unit beep for it’s location and to verify that it was getting commands. But it never wiped the tablet.

I spent about two weeks attempting everything I could think of, bearing in mind my rooting days were about 3 phones behind me, and I couldn’t even see that anything was connected when I plugged the tablet into my computer. There was only power drain. Never anything.

Then again, might not be anything if ADB was never turned on… but I couldn’t turn it on. The only thing I didn’t try was a USB Keyboard and mouse and I would have needed a USB-A to C adapter which have gone off somewhere to hide for a decade.

Deciding this was a gaping glaring security risk it was time to take the mini sledgehammer to it. I whacked it in a bag hard enough to pop the screen off the back, gently removed the battery as I didn’t want to let the magic smoke out, and went about destroying the storage.

That accomplished I felt it was now safe to recycle the rest.

I may have been a bit paranoid thinking her managed account could somehow come back to bite me, but let’s put it simply that I don’t want to find yet another zero day in which a compromised child account manages to gain access to an adult’s OAUTH2 tokens or some such.

After the destruction we went over security measures once again and that making the lock screen wallpaper be your password was a bad idea.

The stepping on the tablet, it’s an accident and why they got an inexpensive device. Next one I will make sure to add some sort of remote access app for times when this happens as evidently I can’t trust Family Link to do it.

I thought about taking some pictures, but I didn’t really like the destruction. I didn’t want to do this, I just wanted to recycle the thing, but it’s somehow tied to my Google account, however minutely, and I just watched my accountant’s digital life go down in flames due to a scam app, so not really willing to risk it.

I had to physically destroy a cheap tablet this weekend after all data destruction methods including Google Family Link remote wipe failed by Paul E King first appeared on Pocketables.

Had an interesting thing happen earlier in the day and that is that every time I opened Chrome my camera notification came on.

I’ll save the long an unnecessary SEO improving pages of why you should be scared of the camera kicking on…

I killed all my chrome tabs, killed chrome, and every time I would come in the camera notification would kick back on.

Earlier in the day I had accessed a web page sent by car insurance people that had me line my car up and take pictures of some damage, and I highly suspect that was where this started as I had to answer yes to allowing Chrome to access the camera to take photos.

Why Chrome kept accessing the camera even with all the tabs closed, I don’t know. It has no need for it other than to report body damage to my car.

There seemed to be no extensions running, no weirdness, but no matter what I do the camera active icon pops on when opening chrome. To disable / calm my paranoia down, I went into app permissions and revoked the camera permission I had granted it earlier in the day and now things are back to normal.

Or my phone’s been hacked because T-Mobile wouldn’t get me the critical firmware update until yesterday.

Whatever the case, the fix appears to be to manually revoke permissions.

Chrome triggering camera? How it happened to me / fix by Paul E King first appeared on Pocketables.

A new technical paper titled “Harnessing Heterogeneity for Targeted Attacks on 3-D ICs” was published by Drexel University.

Abstract

“As 3-D integrated circuits (ICs) increasingly pervade the microelectronics industry, the integration of heterogeneous components presents a unique challenge from a security perspective. To this end, an attack on a victim die of a multi-tiered heterogeneous 3-D IC is proposed and evaluated. By utilizing on-chip inductive circuits and transistors with low voltage threshold (LVT), a die based on CMOS technology is proposed that includes a sensor to monitor the electromagnetic (EM) emissions from the normal function of a victim die, without requiring physical probing. The adversarial circuit is self-powered through the use of thermocouples that supply the generated current to circuits that sense EM emissions. Therefore, the integration of disparate technologies in a single 3-D circuit allows for a stealthy, wireless, and non-invasive side-channel attack. A thin-film thermo-electric generator (TEG) is developed that produces a 115 mV voltage source, which is amplified 5 × through a voltage booster to provide power to the adversarial circuit. An on-chip inductor is also developed as a component of a sensing array, which detects changes to the magnetic field induced by the computational activity of the victim die. In addition, the challenges associated with detecting and mitigating such attacks are discussed, highlighting the limitations of existing security mechanisms in addressing the multifaceted nature of vulnerabilities due to the heterogeneity of 3-D ICs.”

Find the technical paper here. Published June 2024.

Alec Aversa and Ioannis Savidis. 2024. Harnessing Heterogeneity for Targeted Attacks on 3-D ICs. In Proceedings of the Great Lakes Symposium on VLSI 2024 (GLSVLSI ’24). Association for Computing Machinery, New York, NY, USA, 246–251. https://doi.org/10.1145/3649476.3660385.

The post Heterogeneity Of 3DICs As A Security Vulnerability appeared first on Semiconductor Engineering.

A new technical paper titled “MINT: Securely Mitigating Rowhammer with a Minimalist In-DRAM Tracker” was published by researchers at Georgia Tech, Google, and Nvidia.

Abstract

“This paper investigates secure low-cost in-DRAM trackers for mitigating Rowhammer (RH). In-DRAM solutions have the advantage that they can solve the RH problem within the DRAM chip, without relying on other parts of the system. However, in-DRAM mitigation suffers from two key challenges: First, the mitigations are synchronized with refresh, which means we cannot mitigate at arbitrary times. Second, the SRAM area available for aggressor tracking is severely limited, to only a few bytes. Existing low-cost in-DRAM trackers (such as TRR) have been broken by well-crafted access patterns, whereas prior counter-based schemes require impractical overheads of hundreds or thousands of entries per bank. The goal of our paper is to develop an ultra low-cost secure in-DRAM tracker.

Our solution is based on a simple observation: if only one row can be mitigated at refresh, then we should ideally need to track only one row. We propose a Minimalist In-DRAM Tracker (MINT), which provides secure mitigation with just a single entry. At each refresh, MINT probabilistically decides which activation in the upcoming interval will be selected for mitigation at the next refresh. MINT provides guaranteed protection against classic single and double-sided attacks. We also derive the minimum RH threshold (MinTRH) tolerated by MINT across all patterns. MINT has a MinTRH of 1482 which can be lowered to 356 with RFM. The MinTRH of MINT is lower than a prior counter-based design with 677 entries per bank, and is within 2x of the MinTRH of an idealized design that stores one-counter-per-row. We also analyze the impact of refresh postponement on the MinTRH of low-cost in-DRAM trackers, and propose an efficient solution to make such trackers compatible with refresh postponement.”

Find the technical paper here. Preprint published July 2024.

Qureshi, Moinuddin, Salman Qazi, and Aamer Jaleel. “MINT: Securely Mitigating Rowhammer with a Minimalist In-DRAM Tracker.” arXiv preprint arXiv:2407.16038 (2024).

The post Secure Low-Cost In-DRAM Trackers For Mitigating Rowhammer (Georgia Tech, Google, Nvidia) appeared first on Semiconductor Engineering.

In today’s technology-driven landscape in which reducing TCO is top of mind, robust data protection is not merely an option but a necessity. As data, both personal and business-specific, is continuously exchanged, stored, and moved across various platforms and devices, the demand for a secure means of data aggregation and trust enhancement is escalating. Traditional data protection strategies of protecting data at rest and data in motion need to be complemented with protection of data in use. This is where the role of inline memory encryption (IME) becomes critical. It acts as a shield for data in use, thus underpinning confidential computing and ensuring data remains encrypted even when in use. This blog post will guide you through a practical approach to inline memory encryption and confidential computing for enhanced data security.

Inline memory encryption offers a smart answer to data security concerns, encrypting data for storage and decoding only during computation. This is the core concept of confidential computing. As modern applications on personal devices increasingly leverage cloud systems and services, data privacy and security become critical. Confidential computing and zero trust are suggested as solutions, providing data in use protection through hardware-based trusted execution environments. This strategy reduces trust reliance within any compute environment and shrinks hackers’ attack surface.

The security model of confidential computing demands data in use protection, in addition to traditional data at rest and data in motion protection. This usually pertains to data stored in off-chip memory such as DDR memory, regardless of it being volatile or non-volatile memory. Memory encryption suggests data should be encrypted before storage into memory, in either inline or look aside form. The performance demands of modern-day memories for encryption to align with the memory path have given rise to the term inline memory encryption.

There are multiple off-chip memory technologies, and modern NVMS and DDR memory performance requirements make inline encryption the most sensible solution. The XTS algorithm using AES or SM4 ciphers and the GCM algorithm are the commonly used cryptographic algorithms for memory encryption. While the XTS algorithm encrypts data solely for confidentiality, the GCM algorithm provides data encryption and data authentication, but requires extra memory space for metadata storage.

Inline memory encryption is utilized in many systems. When considering inline cipher performance for DDR, the memory performance required for different technologies is considered. For instance, LPDDR5 typically necessitates a data path bandwidth of 25 gigabytes per second. An AES operation involves 10 to 14 rounds of encryption rounds, implying that these 14 rounds must operate at the memory’s required bandwidth. This is achievable with correct pipelining in the crypto engine. Other considerations include minimizing read path latency, support for narrow bursts, memory specific features such as the number of outstanding transactions, data-hazard protection between READ and WRITE paths, and so on. Furthermore, side channel attack protections and data path integrity, a critical factor for robustness in advanced technology nodes, are additional concerns to be taken care without prohibitive PPA overhead.

The importance of data security is not limited to traditional computing areas but also extends to AI inference and training, which heavily rely on user data. Given the privacy issues and regulatory demands tied to user data, it’s essential to guarantee the encryption of this data, preventing unauthorized access. This necessitates the application of a trusted execution environment and data encryption whenever it’s transported outside this environment. With the advent of new algorithms that call for data sharing and model refinement among multiple parties, it’s crucial to maintain data privacy by implementing appropriate encryption algorithms.

Equally important is the rapidly evolving field of computational storage. The advent of new applications and increasing demands are pushing the boundaries of conventional storage architecture. Solutions such as flexible and composable storage, software-defined storage, and memory duplication and compression algorithms are being introduced to tackle these challenges. Yet, these solutions introduce security vulnerabilities as storage devices operate on raw disk data. To counter this, storage accelerators must be equipped with encryption and decryption capabilities and should manage operations at the storage nodes.

As our computing landscape continues to evolve, we need to address the escalating demand for robust data protection. Inline memory encryption emerges as a key solution, offering data in use protection for confidential computing, securing both personal and business data.

Rambus Inline Memory Encryption IP provide scalable, versatile, and high-performance inline memory encryption solutions that cater to a wide range of application requirements. The ICE-IP-338 is a FIPS certified inline cipher engine supporting AES and SM4, as well as XTS GCM modes of operation. Building on the ICE-IP-338 IP, the ICE-IP-339 provides essential AXI4 operation support, simplifying system integration for XTS operation, delivering confidentiality protection. The IME-IP-340 IP extends basic AXI4 support to narrow data access granularity, as well as AES GCM operations, delivering confidentiality and authentication. Finally, the most recent offering, the Rambus IME-IP-341 guarantees memory encryption with AES-XTS, while supporting the Arm v9 architecture specifications.

For more information, check out my recent IME webinar now available to watch on-demand.

The post A Practical Approach To Inline Memory Encryption And Confidential Computing For Enhanced Data Security appeared first on Semiconductor Engineering.

In the automotive industry, AI-enabled automotive devices and systems are dramatically transforming the way SoCs are designed, making high-quality and reliable die-to-die and chip-to-chip connectivity non-negotiable. This article explains how interface IP for die-to-die connectivity, display, and storage can support new developments in automotive SoCs for the most advanced innovations such as centralized zonal architecture and integrated ADAS and IVI applications.

The automotive industry is adopting a new electronic/electric (EE) architecture where a centralized compute module executes multiple applications such as ADAS and in-vehicle infotainment (IVI). With the advent of EVs and more advanced features in the car, the new centralized zonal architecture will help minimize complexity, maximize scalability, and facilitate faster decision-making time. This new architecture is demanding a new set of SoCs on advanced process technologies with very high performance. More traditional monolithic SoCs for single functions like ADAS are giving way to multi-die designs where various dies are connected in a single package and placed in a system to perform a function in the car. While such multi-die designs are gaining adoption, semiconductor companies must remain cost-conscious as these ADAS SoCs will be manufactured at high volumes for a myriad of safety levels. One example is the automated driving central compute system. The system can include modules for the sensor interface, safety management, memory control and interfaces, and dies for CPU, GPU, and AI accelerator, which are then connected via a die-to-die interface such as the Universal Chiplet Interconnect Express (UCIe). Figure 1 illustrates how semiconductor companies can develop SoCs for such systems using multi-die designs. For a base ADAS or IVI SoC, the requirement might just be the CPU die for a level 2 functional safety. A GPU die can be added to the base CPU die for a base ADAS or premium IVI function at a level 2+ driving automation. To allow more compute power for AI workloads, an NPU die can be added to the base CPU or the base CPU and GPU dies for level 3/3+ functional safety. None of these scalable scenarios are possible without a solution for die-to-die connectivity.

Fig. 1: A simplified view of automotive systems using multi-die designs.

The industry has come together to define, develop, and deploy the UCIe standard, a universal interconnect at the package-level. In a recent news release, the UCIe Consortium announced “updates to the standard with additional enhancements for automotive usages – such as predictive failure analysis and health monitoring – and enabling lower-cost packaging implementations.” Figure 2 shows three use cases for UCIe. The first use case is for low-latency and coherency where two Network on a Chip (NoC) are connected via UCIe. This use case is mainly for applications requiring ADAS computing power. The second automotive use case is when memory and IO are split into two separate dies and are then connected to the compute die via CXL and UCIe streaming protocols. The third automotive use case is very similar to what is seen in HPC applications where a companion AI accelerator die is connected to the main CPU die via UCIe.

Fig. 2: Examples of common and new use cases for UCIe in automotive applications.

To enable such automotive use cases, UCIe offers several advantages, all of which are supported by the Synopsys UCIe IP:

Synopsys UCIe IP is integrated with Synopsys 3DIC Compiler, a unified exploration-to-signoff platform. The combination eases package design and provides a complete set of IP deliverables, automated UCIe routing for better quality of results, and reference interposer design for faster integration.

Fig. 3: Synopsys 3DIC Compiler.

OEMs are attracting consumers by providing the utmost in cockpit experience with high-resolution, 4K, pillar-to-pillar displays. Multi-Stream Transport (MTR) enables a daisy-chained display topology using a single port, which consists of a single GPU, one DP TX controller, and PHY, to display images on multiple screens in the car. This revision clarifies the components involved and maintains the original meaning. This daisy-chained set up simplifies the display wiring in the car. Figure 4 illustrates how connectivity in the SoC can enable multi-display environments in the car. Row 1: Multiple image sources from the application processor are fed into the daisy-chained display set up via the DisplayPort (DP) MTR interface. Row 2: Multiple image sources from the application processor are fed to the daisy-chained display set up but also to the left or right mirrors, all via the DP MTR interface. Row 3: The same set up in row 2 can be executed via the MIPI DSI or embedded DP MTR interfaces, depending on display size and power requirements.

An alternate use case is USB/DP. A single USB port can be used for silicon lifecycle management, sentry mode, test, debug, and firmware download. USB can be used to avoid the need for very large numbers of test pings, speed up test by exceeding GPIO test pin data rates, repeat manufacturing test in-system and in-field, access PVT monitors, and debug.

Fig. 4: Examples of display connectivity in software-defined vehicles.

ISO/SAE 21434 Automotive Cybersecurity is being adopted by industry leaders as mandated by the UNECE R155 regulation. Starting in July 2024, automotive OEMs must comply with the UNECE R155 automotive cybersecurity regulation for all new vehicles in Europe, Japan, and Korea.

Automotive suppliers must develop processes that meet the automotive cybersecurity requirements of ISO/SAE 21434, addressing the cybersecurity perspective in the engineering of electrical and electronic (E/E) systems. Adopting this methodology involves embracing a cybersecurity culture which includes developing security plans, setting security goals, conducting engineering reviews and implementing mitigation strategies.

The industry is expected to move towards enabling cybersecurity risk-managed products to mitigate the risks associated with advancement in connectivity for software-defined vehicles. As a result, automotive IP needs to be ready to support these advancements.

Synopsys ARC HS4xFS Processor IP has achieved ISO/SAE 21434 cybersecurity certification by SGS-TṺV Saar, meeting stringent automotive regulatory requirements designed to protect connected vehicles from malicious cyberattacks. In addition, Synopsys has achieved certification of its IP development process to the ISO/SAE 21434 standard to help ensure its IP products are developed with a security-first mindset through every phase of the product development lifecycle.

The transformation to software-defined vehicles marks a significant shift in the automotive industry, bringing together highly integrated systems and AI to create safer and more efficient vehicles while addressing sophisticated user needs and vendor serviceability. New trends in the automotive industry are presenting opportunities for innovations in ADAS and IVI SoC designs. Centralized zonal architecture, multi-die design, daisy-chained displays, and integration of ADAS/IVI functions in a single SoC are among some of the key trends that the automotive industry is tracking. Synopsys is at the forefront of automotive SoC innovations with a portfolio of silicon-proven automotive IP for the highest levels of functional safety, security, quality, and reliability. The IP portfolio is developed and assessed specifically for ISO 26262 random hardware faults and ASIL D systematic. To minimize cybersecurity risks, Synopsys is developing IP products as per the ISO/SAE 21434 standard to provide automotive SoC developers a safe, reliable, and future proof solution.

The post Keeping Up With New ADAS And IVI SoC Trends appeared first on Semiconductor Engineering.

Less than a month after the attempted assassination of former President Donald Trump, the agency that failed to protect him from harm may get a bigger budget.

On July 13, when Thomas Matthew Crooks shot and wounded Trump during a campaign rally in Pennsylvania, Secret Service agents sprang into action, heroically shielding him from further harm and escorting him from the stage.

But subsequent reporting revealed that the incident was entirely preventable: Rally attendees alerted law enforcement to the presence of a suspicious person more than an hour before he started shooting, and they later saw him climbing on top of a building with a gun. "Trump was on stage for around 10 minutes between the moment Crooks was spotted on the roof with a gun and the moment he fired his first shot," the BBC reported.

After a particularly disastrous appearance before the House Oversight Committee, Secret Service Director Kimberly Cheatle resigned. Appearing before two Senate committees this week, acting Director Ronald Rowe Jr. testified that he was "ashamed" of the agency's failure.

Almost immediately after the shooting, a narrative emerged that the lapse owed to a lack of resources.

At a July 15 White House press briefing, a reporter asked Secretary Alejandro Mayorkas of the Department of Homeland Security—which oversees the Secret Service—"Is the Secret Service stretched too thin?"