Normální zobrazení

-

Attack of the Fanboy

- A mug meant for lattes has become a viral test of gratitude, honesty, and red flags

A mug meant for lattes has become a viral test of gratitude, honesty, and red flags

A TikTok video that ignited the debate, where a woman’s visible disappointment over a Christmas gift from her fiancé turned a simple latte mug into a viral discussion about gratitude and relationship expectations. As highlighted by Daily Dot, the clip quickly gained traction, drawing millions of views and intense scrutiny online.

The video centers on Cloe, who posts under the username @223in2023, and her reaction to opening a Christmas gift she had specifically requested. While she asked for a mug designed for lattes, her response suggested the item she received did not match her expectations, echoing the kind of social media pile-on seen when a restaurant owner erupted over a mild three-star review and sparked a similarly outsized online backlash.

As the clip spread, viewers debated whether Cloe’s reaction was unnecessarily rude or an example of honest communication between partners. Much like the reaction to viral shopping mishaps such as when a couple ordered expensive sofas from Temu and instantly regretted it, the focus quickly shifted away from the object itself and onto the people involved.

The reaction mattered more than the mug itself

Cloe explained in the video that she had asked her fiancé for a very specific type of latte mug, describing it as a bowl-shaped mug with a wide, rounded form. She even demonstrated the shape with her hands to help clarify what she wanted. Despite the explanation, the gift did not align with her vision.

@223in2023 I fear I’m the cuff link guy, but side note if anyone knows what I’m talking about and has links, send them my way. Also I promise he’s not upset about this, he knew it was a shot in the dark because I was being so confusing, also I’m very grateful that he really tried to see my vision

♬ original sound – Cloe

When she filmed herself opening the present, her reaction immediately became the focal point. After asking her fiancé if he was nervous, her expression dropped upon seeing the mug. She remarked that it was not what she wanted and referred to the moment with a dismissive “whomp, whomp,” before attempting to soften her response by saying she liked it. Her fiancé responded by pointing out that she clearly did not.

@nthegate The bad husband posts where the woman doesn’t want to hear it is a cultural thing. She really did not expect to be told it was bad behavior and that she should leave. For most of these women that’s probably the first time they are ever hearing something like that.

♬ original sound – Jane Fox

Following the backlash, Cloe addressed the situation in her caption and later comments. She acknowledged that her instructions may have been confusing and compared herself to the “cuff link guy,” a reference to receiving an unwanted but well-intended gift. She emphasized that her fiancé was not upset and that she appreciated his effort, noting that he knew the request was a “shot in the dark.”

Despite her clarification, the discussion continued. Many commenters criticized her reaction as disrespectful, with some framing it as a warning sign for the relationship. Others defended her honesty, arguing that being open about disappointment over a small gift is healthier than pretending.

-

Attack of the Fanboy

- ‘I know exactly what you’re doing’: Waiter refuses customer’s to-go cup request after realizing what he planned to do with it

‘I know exactly what you’re doing’: Waiter refuses customer’s to-go cup request after realizing what he planned to do with it

A waiter was serving a couple during happy hour at a Tennessee bar. She offered to refill the man’s discounted Bud Light before the deal ended. But she quickly figured out he was trying to break a rule that could cost her job.

TikTok user Bennett (@bennett_dev) shared the story in a video that got over 14,300 views. She explained how the couple came in for happy hour, which runs from 3 to 6pm. The man ordered a tall Bud Light.

It was 15 minutes before 6 p.m. when Bennett asked if he wanted another beer at the happy hour price. He said yes, so she brought him a fresh one, according to Bro Bible. After finishing their meal, the man asked for a to-go box and a drink cup, revealing he planned to take the discounted beer with him. It’s something the bar explicitly doesn’t allow and could have put Bennett in trouble with management.

The customer tried pulling off a scheme that could cost the server her liquor license

Bennett looked at the table and saw his full beer sitting there untouched. She only saw the woman’s empty water glass, the new tall beer, and a bit left from his first one. “All I could see was her empty glass of water, your tall beer, and the little bit left of [the first one],” she says. “I know exactly what you’re doing, sir.”

Bars and restaurants usually have an on-premise alcohol license. This means they can only sell drinks that customers finish inside the building. To let customers take drinks home, they need a different license called an off-premise license.

If Bennett let the customer take alcohol in a to-go cup, the bar could lose its liquor license completely. Plus, customers can face legal trouble too since many states ban open alcohol containers in public or in cars. Bennett’s work requires staying alert to these situations, much like people who need to stay aware despite noisy distractions.

Bennett gave the man his to-go box but no cup. When he asked again, she questioned what he needed it for. He said “uh, uh, a water” and winked at her. She told him absolutely not, explaining she was on camera and would lose her job. The text on her video read: “Your $5 beer isn’t worth losing my job, sir.” She also stated in another video, “I’m not breaking the law just for a good tip.”

One person shared their similar experience in the comment section. “I had a old man try to do the same with his wine, when he asked for a to go cup I said no you can’t take that wine with you,” they commented.

“He said it’s for his wife’s iced tea which was empty. I gave him a go to cup full of ice tea and watched him DUMP IT AND POUR THE WINE IN THERE. I literally ripped the cup out of his hands and said “ it’s for her iced tea huh?” And I never felt more satisfied,” they continued.

Other servers commented on how they would have handled it. One suggested getting a fresh water cup and saying they got him a new one. Bennett replied she didn’t trust him not to pour the beer into it when she wasn’t watching, as she had other tables to serve. Her commitment to following workplace rules shows the kind of dedication that TikTok users are setting as goals for the new year.

-

Attack of the Fanboy

- A Michelin-trained chef just exposed the ‘number one’ dish you eat that gives you food-borne illness, and the reason is disgusting

A Michelin-trained chef just exposed the ‘number one’ dish you eat that gives you food-borne illness, and the reason is disgusting

If you’re worried about getting sick from restaurant food, you probably shouldn’t be focusing on that seafood special; a veteran chef just warned that the number one dish giving people food-borne illnesses is actually the humble salad. As per BroBible, Chef Solomon Ince of Tableaux Eats recently offered a glimpse into back-of-house reality on TikTok, explaining that unless he’s eating somewhere truly exceptional, he refuses to order greens because of how often cooks skip the crucial washing step.

In a follow-up video, Ince explained that if the lettuce isn’t washed and prepared correctly, you’re eating a bunch of bacteria like E. coli. “Salad is the number one thing you’re going to get a food-borne illness from,” he stated. Ince noted that even at some nice places he’s worked, people “throw a fit over washing some damn Romaine.”

Ince is a veteran of some of America’s best kitchens, having spent time at chef Daniel Boulud’s two-Michelin-starred flagship establishment, Restaurant Daniel, where a meal runs nearly $200 per person. While the salad risk is shocking, Ince also backed up a classic piece of restaurant advice that many diners have heard before: Stay away from the fish specials.

That’s some great insight before your next restaurant visit

He explained that a special is often just a way to get rid of stock. If a chef has too much of something they need to move quickly, they’ll rapidly invent a dish to sell it before it goes bad. “A special is something you’re trying to get rid of,” he confirmed. “If you don’t know that, it’s the truth.”

Interestingly, many viewers immediately referenced the late, great Anthony Bourdain, whose tell-all book, Kitchen Confidential, offered similar warnings about seafood specials years ago. Ince is definitely a fan, saying of Bourdain’s work, “Anyone who enjoys the grittiness of the industry will love this book. It was the first book I read that made me want to become a chef.”

@chefsolomonince Replying to @regular|exorcise #michelin #industry #boh #chef #linecook

♬ original sound – Solomon Ince

Now, not everyone agrees with the “specials are bad” rule, especially when talking about high-end dining. One viewer who worked in expensive restaurants pushed back, saying their specials were always fresh and they always ordered them. Another commenter agreed, noting their high-end restaurant used to order fresh fish every Friday specifically for weekend specials. That same commenter did add one important calendar-based caveat, though: “Now on a Monday or Tuesday I may agree.”

So, how can you spot a special that’s actually suspicious? One commenter noted that if the special is a mixed dish, like a seafood stew or a medley of some sort, it’s usually built from leftover seafood they couldn’t sell but desperately need to move, such as old fish, clams, or shrimp.

Ince himself offered a great rule of thumb for judging the overall quality of the establishment. He said that specials aren’t all going to be bad, but you absolutely have to know what type of restaurant you’re at. If the menu has forty or more items and serves both Italian and Latin American cuisine, you should probably steer clear of the special board.

Conversely, if you visit a well-thought-out restaurant with only about eight items on the menu, and it’s obvious that a lot of care went into the customer experience, he suggests you’re probably in for a treat with any special menu item. That attention to detail usually translates to fresh ingredients.

-

Attack of the Fanboy

- These ‘cult’ earplugs promise sleep next to a snoring partner, but here’s what actually happens

These ‘cult’ earplugs promise sleep next to a snoring partner, but here’s what actually happens

As reported by UNILAD, Loop Dream earplugs are quickly gaining attention among light sleepers looking for relief from a snoring partner. Marketed as a dedicated bedtime solution, the earplugs have developed a near “cult” following online, similar to how everyday tech tools have quietly stepped in to fix problems people didn’t realize they had, such as when Gmail introduced built-in help for poorly written work emails.

Rather than resorting to sleeping in separate rooms or enduring sleepless nights, many people are turning to Loop’s range of reusable earplugs. While the brand already offers options for concerts and daily noise reduction, the Loop Dream model is specifically designed for overnight use, tapping into the same growing demand for tech-driven solutions now extending even into children’s toys with AI features.

Loop claims that 91% of users reported improved sleep quality when using the Dream model. The earplugs provide up to 27 dB (SNR) of noise reduction, which is intended to soften disruptive background noise without fully blocking out important sounds.

Comfort appears to be the deciding factor for sleepers

One of the most common issues with sleep earplugs is discomfort, particularly for side sleepers. Traditional foam plugs can cause pressure and irritation over time, but Loop addresses this by combining memory foam with soft silicone and shaping the earplugs specifically for lying down.

The Loop Dream uses oval Foam-Silicone Tips designed to follow the ear’s natural shape. The package also includes Loop Dream Double Tips, offering additional sizing options to help users achieve a secure and comfortable fit throughout the night.

@loopearplugs A little Tuesday ASMR. #loopearplugs #asmr

♬ original sound – LoopEarplugs

Customer feedback frequently highlights their effectiveness against snoring. Several users report that the earplugs significantly reduce disruptive noise while remaining comfortable for all-night wear. Reviews also note that finding the correct size is key, with some users discovering that larger tips provide the best seal.

Another commonly mentioned benefit is that the earplugs do not block out all sound. Users report being able to hear alarms or voices while still reducing constant background noise, which many consider an important safety feature.

Travelers have also responded positively, with some using the Loop Dream earplugs on flights and noting that they stay securely in place. Loop includes multiple tip sizes with each set, and once the correct fit is selected, users are advised to insert the earplugs and hold them in place for several seconds to ensure a proper seal.

The earplugs are available in several colors, including black and pastel options like blue, lilac, and peach, allowing users to choose a style that suits their preference.

-

Attack of the Fanboy

- He agreed to switch plane seats for a stranger, then instantly realized his mistake

He agreed to switch plane seats for a stranger, then instantly realized his mistake

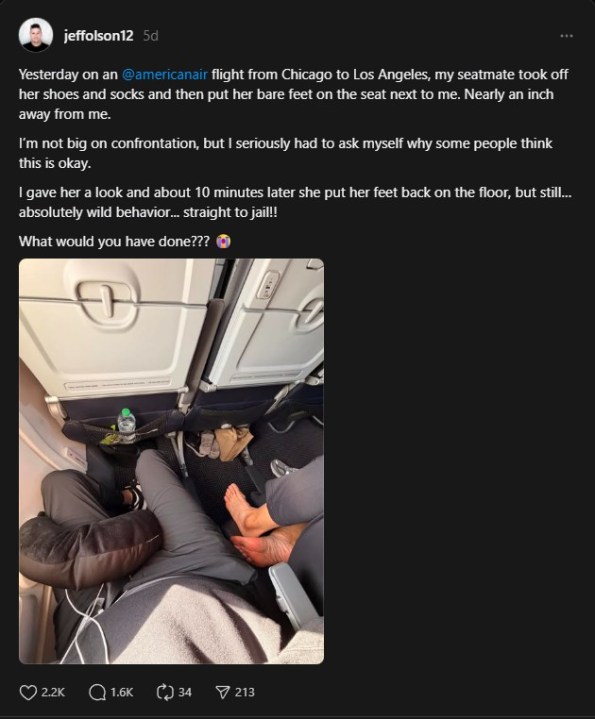

An American Airlines passenger learned the hard way that agreeing to swap seats can sometimes lead to an unpleasant surprise. As Daily Dot highlighted, Jeff Olson said his decision to move seats before takeoff resulted in him sitting next to a fellow traveler whose behavior quickly made the flight uncomfortable.

Olson was flying from Chicago to Los Angeles when another passenger asked to switch seats. He assumed the request was to help a family sit together, a situation many travelers are familiar with. Instead, his new seat placed him next to a woman who treated the cabin as if it were her personal living room.

The situation escalated shortly after takeoff, when Olson realized the seat change had put him inches away from behavior he never expected to deal with mid-flight. He took to the social media platform, Threads, to document what happened during the flight.

Sometimes being polite on a flight backfires

Olson shared a photo showing a pair of bare feet positioned next to his legs after the woman beside him removed her shoes and socks. According to Olson, she propped her feet up on the armrest, placing them nearly an inch away from his thigh.

The angle of the photo made it appear as though her foot could have been touching him, though Olson later clarified he pulled his leg inward just enough to avoid contact. Even so, the proximity was enough to make the situation uncomfortable.

Olson said he is not confrontational by nature, but the behavior was so off-putting that he gave the woman a look. After about ten minutes, she put her feet back on the floor. While the issue did not last the entire flight, Olson said the experience left a lasting impression.

The post quickly sparked debate online, with many commenters asking what they should have done in his place. Several suggested confronting the woman directly or calling over a flight attendant, echoing reactions seen in other recent airline disputes where passengers felt mistreated despite doing everything right, such as when a $5,000 United ticket still wasn’t enough to guarantee smooth boarding.

Olson later explained why he chose not to escalate the situation. The flight was full, and he still had to sit next to the woman for more than four hours. He said calling a flight attendant would likely have caused an awkward scene or delayed the flight, especially since the issue resolved itself within minutes.

He also responded to users who defended the barefoot passenger, joking that they should go “straight to jail”. Others shared similar experiences in the comments, posting photos of bare feet crossing seat barriers in economy, emergency exit rows, and even first-class cabins. Those stories joined a growing collection of unsettling travel moments, including recent reactions from passengers after something disturbing appeared on an airport baggage carousel.

Ditch the bingo card, TikTok has a cuter goal hack for 2026

If you’re still using oversized goal bingo cards, TikTok says you’re behind the trend. As highlighted by Daily Dot, creators are declaring 2026 the year of the punch card, a smaller, more tactile way to track New Year’s resolutions. The format is being embraced as a simpler and more engaging alternative to last year’s sprawling goal charts.

The concept borrows from old-school loyalty punch cards used at coffee shops and sandwich counters. Instead of earning a free drink, users punch a hole each time they complete a personal milestone. The physical interaction turns abstract goals into something visible and satisfying.

TikTok creators say the appeal comes from how achievable the system feels. Punch cards make progress easy to see, helping resolutions feel less overwhelming and more like a game with clear rewards, similar to how the platform often turns everyday moments into viral talking points.

Turning progress into something you can actually see

Getting started requires little more than index cards, markers, and a hole punch. Users create individual cards for goals like paying down debt, going to the gym consistently, or spending more time outside. Each punch represents progress, creating a simple visual record that updates with every completed task, which helps explain why so many everyday situations end up going viral on the platform, from goal-setting trends to moments like the Chicago airport restaurant dispute that exploded on TikTok.

@meerdanyou So cutee! Can’t wait to use them :) #fyp#cute#diy#2026#newyears

♬ Happiness – Piero Piccioni

Creators emphasize that rewards are a major part of the appeal. Many write incentives directly onto their cards, promising themselves a favorite coffee or small treat after reaching a set number of punches. This kind of built-in motivation mirrors the way TikTok users rally around shared experiences, including viral reactions like mocking wealthy tourists stranded in St. Barths during a crisis.

Customization is another reason the trend has taken off. Users decide how many punches a goal requires, whether each punch represents one activity, a full month of consistency, or a dollar amount toward a financial target. The structure adapts easily to different types of goals.

@christine.schauer Such a fun way to keep track of some of your goals throughout the year!! #punchcards #2026punchcards #newyearsresolution #newyear

♬ original sound – MZ.OLDIEZ

One creator, Christine, shared how she uses separate cards for monthly gym visits, car loan payments, and trying new recipes. Others have added drawings, borders, or themed designs to make the cards more visually engaging, though simple designs work just as well.

Making the cards is intentionally quick and uncomplicated. After choosing attainable goals, users label their cards, draw punch spots, and add rewards if desired. The cards can be tied together with ribbon, twine, or a key ring for easy storage.

Because they’re compact, punch cards are easy to carry. Users can mark progress immediately after finishing a workout or making a payment instead of waiting to log it later.

-

Attack of the Fanboy

- TikTok user calls out Michaels for ‘scamming’ customers on Black Friday, and what the craft retailer did with pricing only fuels the claims

TikTok user calls out Michaels for ‘scamming’ customers on Black Friday, and what the craft retailer did with pricing only fuels the claims

A TikTok video calling out craft retailer Michaels for what the user believes is a major Black Friday pricing scam has gone completely viral, racking up over 6.1 million views. Crafter AJ, known as @isitgay on the platform, claimed Michaels was doubling the original price of items promoted for the Black Friday sale before applying the supposed 50% discount.

This practice effectively meant customers were buying items “on sale” for their original full price, or sometimes even more. AJ admitted, “We all hear about items being marked up on Black Friday.” However, she pointed out that the item she was trying to purchase would actually cost more with the 50% off than it would have the week before at the regular price. This kind of deceptive pricing is absolutely awful for consumers hoping to score real deals.

To back up her claims, AJ held up a small wooden house she wanted to purchase. The price tag on the item read $12.99. AJ said she knew the regular price of that specific wooden house was typically $6.49. “I know the price,” she stated. “It is $6.49… Now these are 50% off today. So I thought I was gonna come in here and get it for $3.20. No, $12.99.” She noted that the item cost more that day than it usually does.

We need laws about deceptive pricing, and they need to be enforced as well

This means the 50% off discount on the $12.99 price still resulted in a $6.49 price tag, which was the item’s standard cost anyway. As one TikTok user, @tootalon, cleverly wrote, “So the 50% off is actually 100% on.” AJ also noted that she saw regular price tags removed from promotional items. Even worse, she showed that the in-store price check terminals had been strategically removed, making it really difficult for shoppers to verify the original cost.

Michaels, however, has strongly pushed back against the accusation. A representative for the store issued a statement to the Daily Dot addressing the claims. The representative insisted, “At Michaels, we take pricing integrity seriously and are not removing original price tags from any of our merchandise.” The company stated that their standard practice is listing prices on the shelf and individually tagging seasonal items.

@isitgay The ability to price check being removed was so strategic #crafter #artist #blackfriday #blackfridaydeals #michaelscraftstore

♬ original sound – AJ

Regarding the specific wooden house AJ showed, Michaels offered a different perspective. They clarified that the regular price for the DIY Villages Bakery has always been $12.99. The $6.49 price AJ saw previously was actually a sale price at that time. This is a crucial detail, but it still makes the process incredibly confusing for shoppers who don’t track every price fluctuation.

Even after the store’s response, AJ did not remove her viral video. While she admitted in a follow-up post that the “scam might have not been what I thought,” she still felt strongly that the store was deceiving the average consumer. She wrote in onscreen text, “I DO BELIEVE they are still scamming & deceiving the average consumer.”

This unofficial “MichaelsGate” resonated deeply with shoppers who are fed up with deceptive pricing across the retail landscape. Many commenters connected Michaels’ practices to other mega retailers like Amazon and Walmart, which came under scrutiny for other dodgy shenanigans. They blamed the rise of AI-driven “dynamic pricing” for maximizing corporate profit at the expense of the consumer. Talking about AI, the new kind of toys this Black Friday got flak from actor Joseph Gordon-Levitt.

-

Attack of the Fanboy

- A private chef noticed a subtle two-word change on the Reese’s label, what she found means you’re not actually eating what you think you are

A private chef noticed a subtle two-word change on the Reese’s label, what she found means you’re not actually eating what you think you are

Private Chef Lee (@o_g_deez) created a viral two-part PSA on TikTok explaining why the two-word swap from “milk chocolate” to “chocolate candy” on some Resee’s products should make consumers pause. Her videos quickly racked up more than 900,000 views, proving that consumers are definitely noticing a difference in their favorite peanut butter cups.

This isn’t just semantics. Under U.S. law, “milk chocolate” has an official standard of identity from the FDA. That standard requires specific amounts of chocolate liquor, milkfat, and total milk solids. “Chocolate candy,” however, does not have an equivalent federal definition. That means products like the Unwrapped Minis can use this wording and fall under much broader candy guidance rather than a strict chocolate definition.

Lee points out that the coating on the Minis now reads more like “chocolate, vanilla-flavored oil” than traditional milk chocolate. She even joked that the ingredient list, which starts with sugar and includes palm, shea, sunflower, and palm kernel oils, sounds like one for a “hair care product.”

She explains why some people are experiencing the taste of Resee’s products to be chemical-like

Lee’s deep dive came after many viewers tagged her in videos complaining that Reese’s Peanut Butter Cups taste nasty or waxy. She noticed that while the ingredients list hasn’t drastically changed, the overall nutritional profile has shifted dramatically over time. She explains that in the early 2000s, a package of Reese’s had about 250 calories and 14 grams of fat. Now, the current label shows just 210 calories and 12 grams of fat. This calorie drop is key to her theories about flavor changes.

First, she believes they are substituting expensive cocoa butter with an additive called PRPG. She says this additive, which she describes as a sweetener made from castor oil, gives the chocolate that waxy mouthfeel people are complaining about. She argued that a higher level of this additive could be “the reason why” people are saying the product tastes like chemicals or even vomit.

@o_g_deez Keep your eyes out for “chocolate candy”

♬ original sound – The Food HackerThe king size and twin pack also contain different amounts of calories and fat, further confirming a smaller, if not different product. #chocolate #reeses #peanutbutter #candy #reesespeanutbuttercups

Second, Lee noted that the protein content stayed flat while the fiber doubled. She thinks this suggests they might be using a cheaper peanut variety because “everything is about the bottom dollar.” Not all varieties of peanuts contain the same amount of fiber, so this is a major clue. Finally, she suggests the product now uses less milk fat and more skim milk, which makes the final product “less creamy.” She explained that this is similar to what happened with Kit Kat products.

Lee also touched on the size debate, though she doesn’t think it’s the main culprit for the taste shift. While the twin packs saw a small change in weight years ago, she found a difference in the king-size packs. The king size consists of four cups, but instead of weighing the expected 3 ounces, it comes in at 2.8 ounces. That means you’re definitely getting a different product than just two double-twin packs.

This is happening right as the Trump administration is proposing to roll back parts of the food safety and labeling framework. We have seen similar cases with Breyers frozen treats exploiting FDA loophole and Pillsbury having aluminum inside biscuit dough. Experts warn that proposals to revoke long-standing “standards of identity” for dozens of foods could seriously weaken quality and transparency for consumers.

-

Attack of the Fanboy

- ‘It doesn’t have a crossfire in its program’: Waymo robotaxi makes bizarre decision during LAPD standoff that leaves witnesses laughing nervously

‘It doesn’t have a crossfire in its program’: Waymo robotaxi makes bizarre decision during LAPD standoff that leaves witnesses laughing nervously

A video went viral this week showing a self-driving Waymo car rolling right into the middle of an active police standoff in Los Angeles. The robotaxi surprised everyone watching, from people on the street to millions online.

According to Daily Dot, the video, recorded by someone nearby, shows the driverless car turning left and driving straight toward a line of armed officers. A suspect was lying face-down on the ground next to a truck during this tense moment. You can hear nervous laughter from people watching as the robotaxi drives through the scene like it wandered onto a movie set.

A police helicopter was flying overhead while officers shouted commands at the suspect. The Waymo car just kept moving through the chaos without stopping. Waymo quickly responded after the video spread on X and other platforms.

The self-driving car treated a dangerous standoff like regular traffic

A company spokesperson told the Daily Dot that the car was fully autonomous and carrying passengers in Los Angeles when this happened. The car found a street blocked by police vehicles, so its system turned into an area that wasn’t blocked, where other regular cars were also driving.

The spokesperson said safety is their top priority for riders and everyone on the streets. They confirmed the Waymo car was near the standoff for only 15 seconds before leaving the area. Waymo’s official response to the incident provided more details about how their system handled the situation.

This moment sparked a serious debate about how self-driving cars handle situations that can’t be fully programmed. During pure chaos, like an armed standoff, can the AI really make the best choice? The main problem is that the car was avoiding traffic well, but it didn’t seem to understand the high-danger situation around it.

Waymo with passengers just drove into a middle of standoff

— RT (@RT_com) December 1, 2025

It doesn’t have a crossfire in its program — it guessed the best option is to go under the fire

The RoboCop we deserve pic.twitter.com/X4KykjG7si

Social media users had mixed reactions to the video. Some made jokes about the robotaxi’s bold confidence, while others questioned how the system understands danger. One user pointed out how absurd it looked, saying even the suspect on the ground was looking up at the Waymo, wondering why it drove through. Another joked that the car simply saw someone who needed a ride.

Waymo Robotaxi Carrying Passengers Drives Directly Into Active Police Standoff. This seems just a bit dangerous.

— Breaking911 (@Breaking911) December 2, 2025

pic.twitter.com/iY3lKwW1vc

An X profile named “RT” shared the video with the caption “Waymo with passengers just drove into a middle of standoff. It doesn’t have a crossfire in its program – it guessed the best option is to go under the fire. The RoboCop we deserve.”

Not everyone found it funny though. Some accounts posted dramatic takes, with one claiming the car thought going through was the best option. The fact that passengers were inside the car makes this incident more serious than if it were empty.

This raises concerns about how surveillance systems monitor public spaces and how technology interprets dangerous situations. One user wondered if the car was thinking to itself when it slowed down in the intersection.

-

Attack of the Fanboy

- Woman bites into Buc-ee’s brisket sandwich and notices something odd, so she washes the meat and what she finds underneath has her horrified

Woman bites into Buc-ee’s brisket sandwich and notices something odd, so she washes the meat and what she finds underneath has her horrified

A Texas woman recently bought a brisket sandwich from Buc-ee’s and found something that made her stop eating right away. Her discovery is now making other customers worry about the quality of food at the popular travel center, especially since prices keep going up.

According to Bro Bible, the customer, known as @rrositaafresita on TikTok, posted a video that got over 54,400 views. She showed what happened after she bit into her sandwich and noticed the meat looked strange. She decided to rinse off all the BBQ sauce to get a better look, and what she found was shocking.

After washing away the sauce, the brisket looked terrible. The meat came in thick, uneven pieces with lots of fat and looked disturbingly pink in the middle. She wrote in the video, “Decided to rinse the BBQ off and this is what I saw.”

Good brisket should never look like this

Anyone who knows barbecue can tell this isn’t how properly cooked brisket should look. Real brisket needs to be cooked slowly for a long time – usually about an hour to an hour and a half for each pound. This long cooking process breaks down the tough parts of the meat and gives it a dark, even color with a soft texture. The meat in the video was clearly missing this important step. Buc-ee’s might be using a different cut of beef, cooking it too fast, or using some quick method instead of traditional smoking.

This problem feels even worse when you think about how expensive fast food has become. Getting takeout used to be cheap and easy, but now it’s almost a luxury. Prices went up because of higher labor costs, problems from the pandemic, and expensive supply chains. Fast-food prices at major chains jumped between 39% and 100% from 2014 to 2024. That’s much higher than the overall inflation rate of 31% during the same time. When people pay these high prices, they expect good quality food.

@rrositaafresita this is absolutely disgusting @buccees #fyp #buccees #texas #viral #food

♬ WTHELLY – Rob49

Many people commented on the TikTok video saying they weren’t surprised because they’ve had similar bad experiences with Buc-ee’s hot food. “The best thing at Buccees is free , the restrooms , the rest is tourist trap garbage,” one of them wrote. Another user explained why food quality at Buc-ee’s has been so poor.

“Nothing is cooked fresh at Bucees except the fudge and rolled nuts. They also demand vendors give them the lowest price on everything then mark it up to ridiculous prices to make a huge profit off its customers,” they wrote.

Food quality issues have been popping up across social media lately, with viral food content sparking major concerns.

Even more concerning, one person shared, “I don’t like bucees brisket sandwiches. I’ve had one twice and both times they had a chemical taste.” When customers report finding raw meat and tasting chemicals while paying higher prices, there’s clearly a serious problem that needs to be fixed. This situation mirrors other shocking viral health claims on TikTok that have left people concerned about safety.

-

Attack of the Fanboy

- Australian couple picks restaurant that ‘looked cool on the outside’, but what happens when they sit down with their children is shocking

Australian couple picks restaurant that ‘looked cool on the outside’, but what happens when they sit down with their children is shocking

Australian couple Andrew and Laura got a huge surprise when they walked into a Twin Peaks restaurant on a Friday night after moving to Texas. The family sat down with their young children because the place “looked cool on the outside.” But they quickly realized they had made a big mistake.

According to Bro Bible, the couple shared their experience in a TikTok video that got over 560,000 views. They explained they were on a family walk and wanted to find a place to eat dinner on the way home. They had driven past Twin Peaks a few times and thought it looked like a nice American restaurant with a “Colorado/lodge style vibe.” They admitted they should have looked up the restaurant before going in.

Twin Peaks isn’t just a sports bar. It’s known as a “breastaurant” because the servers wear revealing tops that show cleavage. That’s already unusual for a family dinner, but Friday nights are even more extreme because the staff wear much more revealing outfits.

Twin Peaks turns into something completely different on Friday nights

Some Twin Peaks locations, like one in Fort Worth, have lingerie nights every Friday. On these nights, the servers wear black lingerie instead of their normal tops while serving discounted food and drinks. That’s a huge change in atmosphere.

The couple joked in their video that they tried hard not to look at the waitresses while waiting for their food. Andrew even switched seats with his wife so he faced the wall instead of the dining room. However, Laura later explained that Andrew actually moved because “my daughter wanted him to sit next to her,” not to avoid looking at the servers.

@itsandrewandlaura When you thought you were walking into a wholesome family restaurant in Texas…

♬ original sound – itsandrewandlaura(Note to self, do your research first.

) hahaha. We joke about the

but in all seriousness, the waitresses were so sweet and beautiful

Definitely a night to remember

#TwinPeaks #TwinPeaksRestaurant #Funny #Husband #Texas

Despite the awkward situation, the couple said the food was delicious, and the staff was friendly. Restaurant workers being put in uncomfortable situations isn’t uncommon, as some establishments force servers into embarrassing dance performances just to deliver expensive meals.

You might think they should have known better since Australia has Hooters. But the couple explained things are totally different back home. They said Hooters on the Gold Coast is “genuinely known as a family restaurant” and the waitresses don’t dress “very raunchy at all.” They admitted they were “NOT expecting a ‘breastraunt’ when we saw the Twin Peaks sign.” Speaking of Australian surprises, a small Australian brand recently won a major legal victory against Eminem.

Some people in the comments section joked that Andrew knew all along what he would see in a Twin Peaks restaurant. “Well played Andrew,” one wrote. “And the Oscar goes to…” another joked.

-

Attack of the Fanboy

- A TikTok creator is going viral for ‘feeding the homeless,’ But the contents of his bucket have left millions horrified

A TikTok creator is going viral for ‘feeding the homeless,’ But the contents of his bucket have left millions horrified

A TikTok creator known as “Wolfy” is currently racking up millions of views and massive condemnation after filming himself distributing miniature bottles of alcohol, cigarettes, and dangerous weapons to unhoused people in several major cities, as per New York Post. This creator, Keith Castillo, frames these controversial stunts as “feeding the homeless,” but critics are rightly pointing out that he’s exploiting extremely vulnerable people just to generate viral content.

Castillo has published dozens of videos showing him handing out these highly inappropriate items, including 18-inch machetes and small bottles of liquor. It really seems like he’s mocking genuine efforts to help people survive or escape homelessness because every single post carries the same caption: “Keeping the homeless in the streets.”

In one video, Castillo declared, “let’s feed the homeless.” He then dumped a bucket full of mini bottles of Fireball whiskey and loose cigarettes, portioning them out on a table like they were actual meals. Considering how often addiction is associated with people experiencing homelessness, the implication here is pretty grim.

It looks like Castillo wants these struggling humans to dig even deeper into their poverty holes

Castillo told reporters that he expects the people receiving the weapons to use them for “tool purposes” rather than as actual weapons. If you look at the research, unhoused people are actually far more likely to be the victims of crime than the general population is, so this entire scenario is deeply disturbing.

Despite the highly visible nature of these videos and the millions of views they attract, police departments in the cities he visits have been completely silent. Authorities have declined to comment on the issue, which is shocking when you consider the recent policy of arresting pro-Palestinian protesters on felony charges of riot incitement in some of these same areas. Castillo remains free to continue his exploits, while people who are attempting to actually feed unhoused people sometimes risk arrest.

A TikToker is catching heavy backlash after handing out 18-inch machetes and alcohol to homeless people, saying it’s “good for the views.”

— You Mad Bro? (@youmadbroDGTL) December 2, 2025

Internet is furiouspic.twitter.com/ZfFS3Z9WTk

Meanwhile, Castillo’s content is reaching massive audiences. His most viral video, the one that included a machete, had over 18 million views before it was finally deleted. The 29-year-old creator is traveling around to keep the content flowing. He explained his strategy, “I travel around, bulk record in one city and then for my safety go to another city, do the same thing there for like two weeks.” He plans to visit New York next, followed by Las Vegas and L.A.

It’s hard to justify exploiting people struggling with addiction and poverty by giving them substances and weapons just so you can get a few million views. This entire situation is a perfect example of how the chase for online virality can lead to truly exploitative and dangerous behavior.

-

Attack of the Fanboy

- ‘What am I actually eating here?’: Breyers is facing absolute chaos after consumers discovered their beloved ice cream is something else entirely

‘What am I actually eating here?’: Breyers is facing absolute chaos after consumers discovered their beloved ice cream is something else entirely

Breyers is facing major scrutiny online after a viral TikTok video exposed that some of its popular frozen treats aren’t actually labeled as “ice cream” at all, but as “frozen dairy dessert.” If you’ve noticed that your usual pint doesn’t taste quite right or seems to melt differently, you’re definitely not alone.

The recent controversy was ignited by TikTok user Trisha Fenimore, who shared a video that quickly racked up over two million views. Fenimore showed two packages of Breyers frozen dessert and asked the important question we’ve all been wondering, “Does anybody know what’s happened to Breyers Ice Cream? That it’s no longer ice cream?”

According to Fenimore, she and her husband were trying to figure out why the frozen treat she bought “tastes so terrible.” After checking the packaging carefully, the answer became clear. She noted that the word “ice cream” was missing entirely from the container.

If it’s not ice cream, then what is it really?

Fenimore described the flavor and texture as strange. She specifically called the taste “metallic-y” and claimed that eating the dessert left “a film all around my mouth and on my tongue.” That sounds genuinely unpleasant, and honestly, if my dessert left a film on my tongue, I’d be asking the same thing she did: “What am I actually eating here? Because it sure is not ice cream.”

So, what is the deal? Is Breyers trying to pull a fast one? The truth is that Breyers offers two distinct categories of products: classic ice cream and frozen dairy dessert.

@trishafenimore @Breyers Ice Cream what HAPPENED, guys???

♬ original sound – Trisha Fenimore#food #foodtiktok #foodie #fypシ @thejamesfenimore

It all comes down to the Food and Drug Administration or FDA rules. The FDA has specific standards that a product must meet to be legally called “ice cream.” Some of Breyers’ core flavors, like Homemade Vanilla, Chocolate, and Natural Strawberry, actually meet those standards. You can find those specific items labeled correctly as “ice cream” on store shelves.

However, the company also sells “frozen dairy desserts,” and this has been going on for at least a decade. Breyers, through its parent company Unilever, says these desserts are designed to be a smoother, lower-calorie, and lower-fat option for consumers.

A spokeswoman for Unilever explained that these products are different. She told The Dispatch, “Like ice cream, frozen dairy desserts are also made with fresh milk, cream and sugar but are not light enough to be called light ice cream nor have enough fat to be called ice cream.”

Unsurprisingly, many commenters online are not happy about the state of the American freezer aisle. One user declared that “Capitalism turned our ice cream into bioengineered chemical products.” Another commenter offered a strong suggestion to consumers, advising them to “Stop buying it, force these companies to go back to Real Food or Go out of business.”

Many feel that the quality of food has noticeably worsened in the last few years. This checks out with our recent reports, where consumers spotted aluminum in Pillsbury biscuits and found ammonia stench in ground beef.

The reality is that if you want true, FDA-defined ice cream, you just have to check the label carefully. You can’t assume the brand name means the product is what you think it is.

-

Attack of the Fanboy

- Mechanic’s daughter visits in her Honda Element, but what he discovers about her brake lights changes his mind on LED bulbs forever

Mechanic’s daughter visits in her Honda Element, but what he discovers about her brake lights changes his mind on LED bulbs forever

Mechanic Eric, who goes by @ericthecarguy on TikTok, is now taking out all aftermarket LED bulbs from his cars after a scary experience with his daughter’s vehicle. He shared his story on TikTok, explaining why he’s going back to traditional filament bulbs. Eric says the LED upgrades have caused too many reliability problems.

According to Motor1, the problem became clear when Eric’s daughter came to visit him in her Honda Element. Right away, Eric noticed something dangerous: her brake lights weren’t working at all. The aftermarket LED bulbs had completely stopped working.

Eric showed the failed LED bulbs in his TikTok video and didn’t hold back his thoughts. He said these LED parts were in his daughter’s Honda Element, and while regular filament bulbs do burn out sometimes, he’s frustrated by how often LED replacements fail. He now plans to remove LED bulbs from all his vehicles and switch back to regular bulbs.

The real danger of brake light failures

When brake lights don’t work, the risks are serious. Cars without working brake lights can easily get hit from behind. Around 264,000 car accidents happen each year in the U.S. because of brake-related problems. While this number includes mechanical failures, broken lights are a dangerous problem that can be avoided. Honda owners have faced costly repair quotes from mechanics for various vehicle issues.

The issue with LED bulbs goes deeper than just the bulbs burning out. When you replace a regular bulb with an LED in modern cars, it can cause electrical problems. Many newer cars use body control modules that check if bulbs are working properly. These systems test the old-style filaments to see if they’re still good.

@ericthecarguy What are your thoughts on these LED bulbs? Are they great, or not so great? #led #ledbulb #taillight #opinion #ericthecarguy

♬ original sound – EricTheCarGuy

When you put in an LED bulb instead, the car’s system gets confused. This can lead to false warnings saying a bulb is out. Even worse, the confusion can cause bigger problems like the car not starting or a dead battery. Some companies add resistors to fix this, but that removes the energy-saving benefits that LEDs are supposed to provide.

Heat is another big problem for LED bulbs. While people expect LED bulbs to last up to 50,000 hours, they often fail much sooner. LED bulbs are sensitive electronic parts that can wear out and get dimmer over time instead of just burning out completely. Cheap aftermarket LEDs especially fail early because of poor quality parts and bad heat control.

People have mixed opinions about LED bulbs. Under Eric’s video, some commenters said quality matters, and that major bulb brands make good LED replacements. “I went through the same thing. Some last a long time, but many don’t. Not worth the headache. When back to standard filament bulbs for the consistency and reliability.

Would love to see someone come out with a quality LED replacement bulb lineup that isn’t absurdly expensive,” one user commented. “It all depend on the brand. I put some Auxito in my 2001 F150 with a LED relay. Haven’t had an issue. Your vehicles, your choices. Stay dirty,” another wrote.

Some people like LEDs for reverse lights but not for tail lights that stay on all the time. Similar to auto workers dealing with unexpected issues, mechanics often face tough decisions about recommending aftermarket parts.

-

Attack of the Fanboy

- A TikTok chef smelled something horrifying while cooking ground beef. The disturbing truth about US food safety has left them declaring ‘America is cooked’

A TikTok chef smelled something horrifying while cooking ground beef. The disturbing truth about US food safety has left them declaring ‘America is cooked’

A TikTok chef has absolutely blown up the debate over US food safety after discovering a powerful ammonia-like smell while cooking a package of ground beef, leading them to declare that “America is cooked.” The content creator, known as TheAudhdchef (@audhdchef), posted a video that’s already racked up over 190,000 views detailing the disturbing discovery.

As reported by Daily Dot, the chef was browning a package of lean ground beef that was still days away from its expiration date. While the meat looked normal at first glance, the chef said it smelled strongly of ammonia and seemed much paler than usual. This is a huge red flag for anyone who buys meat regularly.

The chef immediately noted that this shouldn’t be happening with standard ground beef, explaining that processors sometimes use an ammonia method for scrap cuts of meat depending on the preparation. They emphasized that this was just a regular package of ground beef, not a scrap cut.

So, should you panic about ammonia in your meat?

It’s complicated because the smell can mean two different things. According to experts, some meat processors add small amounts of ammonia to the beef to help prevent the growth of dangerous pathogens like E. coli and Salmonella. Ammonia used in these tiny amounts poses no harm to humans. However, the smell can also be a warning sign. A strong ammonia odor is often how spoiled meat alerts customers to a potentially dangerous product as it rots.

The disturbing experience has clearly shaken the chef. They lamented that they might have to quit eating beef and pork completely. The chef ended the viral video by referencing ammonia-use standards that have changed over the years, saying that “until regulations are back in place, it’s the only safe way to do it.” This really hits home if you’re concerned about what exactly is in your groceries.

“It smelled like ammonia”: Chef’s viral video reignites debate over U.S. beef processing https://t.co/dTGCACJND0 pic.twitter.com/rXsuXNYEUi

— The Daily Dot (@dailydot) November 22, 2025

This isn’t just random complaining, either. A professional chef of 20 years weighed in with a serious warning about the state of the industry. “I CANNOT believe this isn’t being talked about more! There are no regulations! There can’t be,” the chef stated. They added that produce often rots within 24 hours of purchase and that chicken packages are full of nasty blood and juice. They believe, quite bluntly, that “They’re literally trying to kill us.”

The community wasn’t without solutions, thankfully. Many commenters suggested that consumers need to take action by holding retailers accountable. One person urged others to “Return it. Put it in a bag and take it back to the store. If we don’t hold the stores accountable, they won’t hold their suppliers accountable.”

Others suggested ditching the big food chains entirely. Case in point, fast food chain Arby’s recently launched steak, which got rightfully grilled online. If you’re worried about quality, you should find a local butcher and buy only from them, as most still take pride in what they are marketing. One person who switched to buying meat from a farm noted that their beef is “beautiful and it smells good. The color is dark, like it should be.”

It seems like this single viral video has truly sparked a major discussion about consumer trust and how we process our food in the US. The current state here is not as bad as the recent food poisoning tragedy in Istanbul, but that should never be the benchmark anyway.

-

Attack of the Fanboy

- Mechanic quotes woman nearly $3,000 to fix Honda Accord suspension, but her $50 fix has TikTok absolutely furious

Mechanic quotes woman nearly $3,000 to fix Honda Accord suspension, but her $50 fix has TikTok absolutely furious

A TikTok user found a way to cut down a huge $2,800 car repair bill to just $50, all because she got some smart help from ChatGPT. The user @everything_bylaneise, who calls herself a young Martha Stewart, went viral right away after she showed how she avoided a massive quote for fixing her Honda Accord’s suspension by asking artificial intelligence for help.

According to Motor1, when a mechanic told her she needed to pay almost $3,000 to fix her suspension, she didn’t just say yes to the charge. Instead, she asked the AI tool for a better option. According to her, ChatGPT told her to use a junkyard part, which would only cost around $50. That’s a savings of $2,750, which is just crazy when you think about it.

“Mechanic told me $2,800 to fix my suspension,” she wrote on the screen in her viral video. “ChatGPT told me junk yard $50. Let’s go fix this mess.”

This proves DIY culture is winning against expensive repairs

While this was her first time doing this kind of car work, she wasn’t totally new to fixing things herself. She told viewers she already had the tools she needed because she works as an electrician and does home repairs. She recorded herself putting in the suspension and looked like she handled it without any real problems. This is a big win for people who take matters into their own hands.

Sadly, when you post a DIY video online, especially one about cars, you should expect some quick criticism. After her video got more than 571,300 views, a wave of negative comments from men filled the section. Many said she was doing the job wrong, but the main issue was about safety. This isn’t the first time a TikTok user faced backlash over car content, as the platform often sparks heated debates.

However, @everything_bylaneise wasn’t going to let the criticism go unchecked. She quickly posted a follow-up video to prove Walt wrong and shut down the critics. She showed viewers that she absolutely did have a jack stand in place and that there was a tire under the frame to keep the car steady.

She then spoke directly to the overly harsh criticism. “If you gonna try to ‘help’ maybe adjust your tone,” she replied. “Esp, when you don’t know what you’re talking about.” It’s clear her way of doing DIY upset some people, but she was following the right steps. With TikTok’s recent watermarking of billions of videos, her content reached an even wider audience than expected.

Despite the early wave of negativity, plenty of viewers cheered her on for her smart thinking. “Hell yeah get it sis ![]()

![]()

![]()

![]() You just inspired me to show my repairs online I feel like we can show them women can do all these things as well..” one user wrote. “Proud of you”, another said.

You just inspired me to show my repairs online I feel like we can show them women can do all these things as well..” one user wrote. “Proud of you”, another said.

You can learn anything online now, whether you’re fixing a computer or putting in a suspension part. @everything_bylaneise proves that sometimes, asking an AI for a second opinion can save you thousands of dollars, as long as you do the work safely and correctly.

-

Attack of the Fanboy

- Former world leader reveals what she’s most frustrated about after leaving office, and it has nothing to do with politics

Former world leader reveals what she’s most frustrated about after leaving office, and it has nothing to do with politics

Finland’s former Prime Minister Sanna Marin is really frustrated that her great four years in office are being overshadowed by a viral dance video. Two years after she left her position, Marin, who is 40 years old, says she’s still better known around the world for her “shimmy” than for her actual work in politics. You have to admit, that’s pretty annoying when you’ve guided a country through a global pandemic and a huge geopolitical change.

According to The Sun, Marin confirmed that even today, the dance moves are still what people remember her for. She told the media just how small that moment was compared to everything else, saying: “That night was, maybe, six hours of my life.”

It’s easy to forget just how much Marin accomplished while she was in charge, especially since the focus has been on her personal life. When she took the top job in 2019, she was only 34, making her the youngest sitting Prime Minister in the world. She successfully led Finland through the COVID-19 pandemic, keeping the death toll very low compared to many other countries. Even more importantly, she brought the country into NATO, securing a massive win for national security.

A viral moment shouldn’t define leadership

The media storm started just months after Finland announced its NATO membership bid. Videos of Marin dancing, singing, and drinking with friends in what looked like an apartment were posted online. The video featured several public figures, including Finnish singer Alma, rapper Petri Nygard, and TV host Tinni Wikstrom, along with members of Marin’s own Social Democratic party.

The clips spread like wildfire and caused global speculation, especially after someone off-camera mentioned a “powder gang.” This was seen by some as hinting at cocaine, although other social media users suggested the term was more likely a reference to a popular Finnish alcoholic drink that sounds similar in the native language.

Regardless, the rumors continued and caused global concern over whether or not she had taken drugs. To shut down the gossip and protect her reputation, Marin paid for a drug test, which thankfully came back negative. She consistently denied seeing any drugs at the party.

Marin is still angry about the uproar, arguing that the attention was driven by a “layer of misogyny.” She insists a male leader would never have faced the same level of scrutiny. That’s a powerful point, and I think she’s right about the double standard. Political figures across the spectrum have faced similar controversies, including Hunter Biden’s recent comments about Democrats.

She asked a key question that truly highlights the issue: “Nobody ever asked a male leader: ‘How can you come to work today and be that professional you, when you yesterday went to a pub with your guy friends?'” Now, Marin is trying to take back her public image by releasing a memoir. In the book, she argues that she fought to create a “world where you can, yes, dance freely when the day’s work is done.”

Marin has always been open about her love for going out with friends and has often been photographed at music festivals. The conversation around female political leaders continues to evolve, with figures like Marjorie Taylor Greene speaking about leadership challenges.

-

Attack of the Fanboy

- Woman orders Taco Bell and takes a bite, but what she discovers mid-chew leaves her with more questions than answers

Woman orders Taco Bell and takes a bite, but what she discovers mid-chew leaves her with more questions than answers

A TikTok user from California named Kelsey (@kezleyyy) recently shared a shocking find after she bit into her Taco Bell Crunchwrap Supreme. She found a piece of knotted plastic covered in sauce stuck inside her meal. Talk about a nasty surprise! This is the kind of mistake that leaves you completely grossed out and wondering what really happens behind the counter at your favorite fast-food place.

According to Bro Bible, Kelsey posted a video about what happened, and it quickly got over 444,000 views. She was clearly very angry about the whole thing. She started her TikTok video by talking directly to the fast-food chain. She said, “Taco Bell, you better count your f***ing days, OK!” That’s a pretty strong way to start a complaint video, but it makes sense. She explained that she was right in the middle of taking a bite when she found the strange, sauce-covered object.

She held up the item for the camera, which was clearly a piece of plastic tied into a knot and covered in the messy filling from the Crunchwrap. She asked, “What the f*** is this? Why is there a plastic bag?” The really frustrating part is that Kelsey couldn’t get any answers from the location where she bought the food, which just made everything worse. If you’re a customer, you expect the restaurant to at least explain what foreign object you almost ate.

Finding plastic in your food raises serious concerns

Since nobody gave her an explanation, Kelsey started guessing about what was in the plastic bag. She jumped straight to a really wild idea. She suggested that the bag “might have ‘rugs’ in it.” While this is a pretty extreme thought, finding a knotted bag in your food that shouldn’t be there definitely makes you think of all kinds of theories. It’s not the first time restaurant transparency has been called into question, especially after a host exposed OpenTable’s customer labeling system.

It’s easy to laugh about it, but this kind of secret drug selling has actually happened in real life. For example, police found out that a fast-food location was selling drugs after they realized ordering “fries, extra crispy” would get you a drug offer. This isn’t just a problem in the US, either. A German pizzeria was raided by police after they discovered that ordering a “number 40” meant the customer would get a bag of cocaine.

@kezleyyy @tacobell wtf. yall gotta do better!!!!!!!!!! #tacobell #rugs #psa

♬ Last Cup Swing – 華音

However, the real answer to Kelsey’s mystery is much more boring, though still really gross. A commenter who said they used to work at Taco Bell shared a much less exciting explanation. They said the plastic is the top of a carry-over bag.

This makes complete sense to anyone who knows how fast-food kitchens work. Taco Bell workers get the pre-cooked beef in a plastic bag. The top part of that bag is a tie-off point that has to be removed before the meat can be used and put on the steam table.

It seems very likely that someone removed this piece of plastic and, instead of throwing it away right away, accidentally dropped it into Kelsey’s Crunchwrap Supreme while making it. Taco Bell experiences aren’t always smooth, as one woman learned when her card got declined twice during a date. While that explanation solves the mystery, it shows a serious problem with quality control. There should have been much better safety and cleanliness rules in place to stop it from ever happening.

-

Attack of the Fanboy

- Amanda Bynes finally addressed the teen pregnancy rumor, and the truth reveals who is really profiting from the chaos

Amanda Bynes finally addressed the teen pregnancy rumor, and the truth reveals who is really profiting from the chaos

Amanda Bynes is setting the record straight, confirming that the viral TikTok video claiming she was pregnant at age 13 with former Nickelodeon executive Dan Schneider’s child is totally fake. This is the latest in a string of ugly rumors the former Nickelodeon star has had to deal with, and she isn’t happy about how people are twisting her personal life for views.

Bynes told TMZ that the video currently making the rounds was absolutely not made by her. She’s calling the entire thing “completely bogus” and says it’s “altered and full of BS.” If you’ve seen the video, it’s designed to look like she’s leveling serious allegations against Schneider, but that couldn’t be further from the truth.

This whole situation is honestly awful for her. It’s deeply frustrating that people are willing to create and spread these kinds of awful lies just for profit. Bynes explained that whoever created the TikTok spliced up genuine clips from her social media to make up what she directly calls “lies for clickbait.” You’re watching someone’s private content get stolen and twisted into a massive, harmful fabrication.

Bynes’ case is a stark reminder of social media’s harmful side

She detailed exactly how the manipulation happened, too. The very first clip in the viral TikTok was actually ripped from a recent Instagram Story she posted. In her original Story, she turns the camera to show the guy she’s dating. However, the edited TikTok cuts that moment out completely, jumping to a different clip before the camera can turn. That level of editing is incredibly deceptive, and it’s disappointing to see this kind of malicious content being shared widely.

This bogus video couldn’t come at a worse time, either, considering the legal battles Dan Schneider is currently facing. Schneider is suing the producers of the recent Quiet on Set documentary for defamation. He claims that the documentary falsely portrayed him as a child sexual abuser, which, he argues, has ruined his professional reputation. This lawsuit shows just how sensitive the atmosphere is right now, making Bynes’s false rumor particularly toxic and unfair to everyone involved.

Amanda Bynes Denies Viral TikTok Claiming Dan Schneider Got Her Pregnant at 13 https://t.co/561Sad1Uba pic.twitter.com/cepQz6n8jt

— TMZ (@TMZ) November 21, 2025

While the pregnancy claim is completely false, the allegations and conversations surrounding Schneider aren’t new. It’s part of a larger discussion about the environment on Nickelodeon sets years ago. Lori Beth Denberg, an alum from All That, previously accused Schneider of acting inappropriately when she was a young adult working on the shows. Schneider, however, responded to those specific accusations by saying that Denberg was exaggerating the situation.

It’s clear that the person who fabricated the Bynes pregnancy video was capitalizing on the ongoing public interest in the Quiet on Set documentary and the discussions surrounding Schneider. It’s a sad reality that people will use genuine trauma and serious allegations as fodder for viral content, especially when they’re targeting someone like Amanda Bynes, who has been working hard to maintain her privacy and focus on her well-being.

You’ve got to be careful what you click on. While sometimes they may lead you to innocuous feel-good Christmas decorations, other times those viral videos aren’t news; they’re just clickbait trying to steal your attention.